1. Tabled

1.1. Students inconsistently engage with async content

1.2. 8140 Outline - example

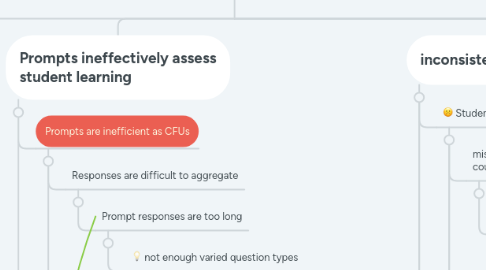

2. Prompts ineffectively assess student learning

2.1. Prompts are inefficient as CFUs

2.1.1. Responses are difficult to aggregate

2.1.1.1. Prompt responses are too long

2.1.1.1.1. not enough varied question types

2.1.2. Individual responses to open-ended questions take too long to parse

2.1.2.1. Prompts don't encourage collaboration

2.1.2.1.1. Faculty unsure of benefits of group responses to BLT outweigh logistical concerns of getting students to collaborate during async content

2.2. Prompts are ineffective as CFUs

2.2.1. Questions are too open-ended

2.2.1.1. Initial intent of prompts were meta-cognitive not Checking for Understanding (CFU)

2.2.1.1.1. Design of BLTs wasn't explicit about meeting both demands

3. Curriculum/Async not organized or communicated to promote learning

3.1. Poor presentation of the async video elements

3.1.1. Length of async content doesn't support prompts - too long

3.1.2. Coherence of async content doesn't support prompts

3.2. Lack of coherence of course content presentation - LMS/Syllabus/Live sessions.

3.2.1. Curricular elements may be poorly organized

3.2.1.1. Objectives aren't clear enough within weeks

3.2.1.1.1. Objectives aren't communicated at the outset of the async content

3.2.1.1.2. Initial course design may not have been explicit enough to allow for this level of intention

3.2.2. Important Curricular resources/documents aren't available in all locations

3.2.2.1. faculty weren't aware of LMS capability

3.2.2.2. LMS files pod doesn't allow for organization/folder structure

3.2.2.3. Significant scope of work

3.2.2.3.1. does each course at full implementation need a TA?

3.2.2.4. Faculty need better routines for course semester setup

3.2.2.4.1. Lack explicit set of process steps

3.2.2.4.2. New process

3.2.3. curricular references or materials are introduced in async without mention or connection to syllabus or other LMS locations - seems disjointed

4. Omitted or ineffective Faculty processes or structures

4.1. Faculty don't communicate coherent across touch-points - Live/LMS/Syllabus

4.1.1. reminders

4.1.1.1. didn't fully appreciate frustration

4.1.1.2. Were unaware of impact of LMS progression as 'communication' medium, see need to enforce consistency with syllabus

4.1.1.3. were unaware of some strategies

4.1.1.3.1. previewing in async then posting to wall

4.1.2. preview async objectives

4.1.2.1. Insufficient course organization

4.1.2.2. Lack of clarity on mechanism to communicate objectives

4.1.3. preview BLTS - to promote learning

4.2. Need better routines to make use of CFUs

4.2.1. new faculty

4.2.2. Time intensive

4.2.2.1. overall workload

4.2.2.2. Prompts are inefficient to parse

4.2.2.2.1. Too many prompts

4.2.2.2.2. wrong prompt type

4.2.2.3. Sense of efficacy

4.2.2.3.1. accuracy of time projections

4.2.2.3.2. Lack of efficient strategies

4.2.2.4. Compensation is insufficient for the workload

4.2.3. Multiple instructors make may utilizing feedback less effective

4.3. Faculty need to actively monitor engage with shared spaces (LMS) to maintain positive, accessible use

4.4. Faculty didn't communicate BLT expectations fully

4.4.1. didn't model good practices

4.4.2. time

4.4.3. Quality

4.4.4. Should we highlight each week what we'll parse more closely?

4.5. haven't explicitly incorporate submissions

4.5.1. Live Plans Not developed with CFUs explicitly in mind

4.5.1.1. lack of curriculum organization

4.5.1.2. remove waste

4.5.2. need new routines once new CFUs are developed

5. Provisional root causes

5.1. lack of balance of BLTs for different purposes

5.2. Ineffective communication of curricular progress and BLT previews inhibit CFUs

5.3. Student work load more flexibility, better coordination

5.4. Lack or organization/communication of curricular progression and BLTs

5.5. Live session design not fully integrated with BLTs

5.6. Lower priority

6. inconsistent student engagement

6.1. Students get behind

6.1.1. misalignment of major assignments across courses

6.1.1.1. Lack of structural process to coordinate across instructors

6.1.1.1.1. Prize faculty autonomy

6.1.1.1.2. Challenge is new in new program

6.1.2. Demanding work/home lives

6.1.2.1. Inconsistent demands outside of school

6.1.3. Inflexible due dates for major assignments

6.1.3.1. Didn't foresee challenges of workload

6.1.3.2. Haven't spent revision time on revising major assignments (since last revision)

6.1.3.2.1. Could help solve a problem of spreading out grading demands

6.2. Student responses are inconsistently thoughtful

6.2.1. Async process differences

6.2.1.1. Best practices vs. personal preference

6.2.1.1.1. watch then read

6.2.1.1.2. read then watch

6.2.1.1.3. Have no idea on what to do here

6.2.1.2. Communicated expectations for BLTs

6.2.1.2.1. Deadlines but not quality

6.2.1.3. The prompts are harder to engage with effectively on mobile devices - can't type effectively to respond to longer prompts.

6.2.2. Academic skills/preparation

6.2.2.1. Note taking skills

6.2.2.2. Process reading

6.2.2.3. Lack of formal process for collecting best practices and communicating to students

6.2.3. Differing levels of relevance/interest in source material

6.2.3.1. inconsistent engagement due to lack of relevance due to education-specific nature of the course

6.2.3.1.1. Faculty have sector bias

6.3. Students can't get remediation during async content - surface questions

6.3.1. LMS mechanisms feel 'dead'

6.3.1.1. Norms not installed

6.3.1.2. Behavior hasn't been modeled

6.3.1.3. Wall feels too general for clarifying questions

6.3.1.4. Students might prefer 1t1 channel for clarifying questions

6.3.1.5. Might not be sustainable to offer clarification mechanism, through faculty, on that tight of a timeline

6.3.1.5.1. too many doctoral students

6.3.1.6. Individual behaviors can have a negative impact for the whole group

6.3.2. Lack of peer collaboration

6.3.2.1. New students

6.3.2.1.1. No relationship prior to course launch

6.3.2.1.2. Takes longer to build relationships in on-line format

6.3.2.2. Not directing students to collaborate outside of live early in the course.

6.3.2.3. Students have access to classrooms but don't initially utilize them

6.3.2.3.1. Lack of comfort with technology

6.3.3. Faculty support isn't realistic at scale and on time horizon - may not be necessary that often as the live session is coming?

7. Data needs

7.1. Course access

7.1.1. Timing relative to class start

7.1.2. Timing relative to session start

7.2. Video viewing

7.2.1. Time spent viewing

7.2.2. Repeated viewing

7.2.3. Audio setting

7.2.4. Speed setting

7.3. 2VU access relative to class start

7.4. Platform access type -

7.4.1. Mobile vs Laptop

7.5. BLTs

7.5.1. Prompt responses

7.6. Student Interview

7.6.1. Read/watch order

7.6.1.1. Can you even specify

7.7. Outcomes

7.7.1. Comprehension?

7.8. Drivers

7.8.1. CFUs working?

7.8.1.1. faculty self-report

7.8.2. Student engagement

7.8.2.1. On-line engagement metric

7.8.2.2. prompt metadata

7.8.2.3. video metadata

7.8.2.4. Live session attendance/polling

7.8.3. Faculty Process