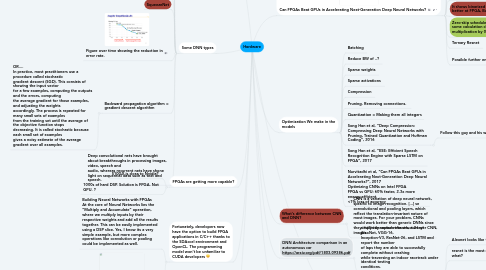

1. Some DNN types

1.1. GoogleNeet 22 layers

1.2. Resnet 125 layers

1.2.1. BinaryConnect at NIPS'15

1.2.2. Sparse CNN at CVPR 15

1.2.3. Pruning NIPS 15

1.2.4. Ternary Resnet at ICASSP'17

1.3. XNORNet

1.4. Ternary Connect ICL16

1.5. DeepComp ICLR16

1.6. SqueezeNet

1.7. Figure over time showing the reduction in error rate.

1.8. Backward propagation algorithm = gradient descent algorithm

1.8.1. OR.... In practice, most practitioners use a procedure called stochastic gradient descent (SGD). This consists of showing the input vector for a few examples, computing the outputs and the errors, computing the average gradient for those examples, and adjusting the weights accordingly. The process is repeated for many small sets of examples from the training set until the average of the objective function stops decreasing. It is called stochastic because each small set of examples gives a noisy estimate of the average gradient over all examples.

1.9. Deep convolutional nets have brought about breakthroughs in processing images, video, speech and audio, whereas recurrent nets have shone light on sequential data such as text and speech.

2. FPGAs are getting more capable?

2.1. S10GX is close to Nvidia?

2.2. 1000s of hard DSP. Solution is FPGA. Not GPU. ?

3. Systolic arrays are used in hardware

3.1. Systolic arrays are examples of MISD architecture. Multiple instruction single data.https://en.wikipedia.org/wiki/Systolic_array

3.2. GEMM : General matric multiply engines

3.2.1. A design example

4. Fortunately, developers now have the option to build FPGA applications in C/C++ thanks to the SDAccel environment and OpenCL. The programming model won’t be unfamiliar to CUDA developers :)

4.1. Building Neural Networks with FPGAs At the core of Neural Networks lies the “Multiply and Accumulate” operation, where we multiply inputs by their respective weights and add all the results together. This can be easily implemented using a DSP slice. Yes, I know its a very simple example, but more complex operations like convolution or pooling could be implemented as well.

4.2. NVIDIA Deep Learning Accelerator

5. Nvidia M40

5.1. Two years old

5.2. China Competition[,..]for the project refers specifically to Nvidia: the ministry says it wants a chip that delivers performance and energy efficiency 20 times better than that of Nvidia’s M40 chip, branded as an “accelerator” for neural networks.

5.2.1. ...

5.2.2. 64 TFLOPS of traditional half-precision or 128 TOPS using the 8-bit integer metric commonly used in machine learning algorithms Cambricon, Makers of Huawei's Kirin NPU IP, Build A Big AI Chip and PCIe Card

5.2.2.1. MLUv01 architecture

5.2.2.2. Pic

5.2.3. http://www.fhi.ox.ac.uk/wp-content/uploads/Deciphering_Chinas_AI-Dream.pdf

5.2.4. Cambricon Reaches for the Cloud With a Custom AI Accelerator, Talks 7nm IPs

6. Na. Their methodology is not the real car. A small robotic car toy. Plus wireless image transfer? No. Never efficient like on board computer. How much data you transfer? ETC ETC. NO.

7. Can FPGAs Beat GPUs in Accelerating Next-Generation Deep Neural Networks?

7.1. Eriko Nurvi

7.2. Mentions you can use Torch in cuBLAS or Cuda libraries for DNN? I need to double check this.

7.3. FGPA estimates are done with Quartus and PowerPlay.

7.4. FPGAs are better in Perf/Watt. It is expected...Customizized.

7.4.1. It is even better at performance at low precision.

7.5. It shows binarized low precision GEMM is better at FPGA, But how often is this used?

7.6. Zero-skip scheduler. Allows you to skip some calculation checks, basically multiplication by 0.

7.7. Ternary Resnet

7.8. Possible further enhancements

7.8.1. Math transformations such as FTT.

7.8.2. Winograd?

8. Optimization We make in the models

8.1. Batching

8.2. Reduce BW of ..?

8.3. Sparse weights

8.4. Sparse activations

8.5. Compression

8.6. Pruning. Removing connections.

8.7. Quantization = Making them all integers

8.8. Song Han et al, “Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding”, 2016

8.8.1. Follow this guy and his work.

8.9. Song Han et al, “ESE: Efficient Speech Recognition Engine with Sparse LSTM on FPGA”, 2017

8.10. Nurvitadhi et al, “Can FPGAs Beat GPUs in Accelerating Next-Generation Deep Neural Networks?”, 2017 Optimizing CNNs on Intel FPGA FPGA vs GPU: 60% faster, 2.3x more energy-efficient <1% loss of accuracy

9. What's difference between CNN and DNN?

9.1. CNN is a variation of deep neural network, spesific to image recognition. [...] se convolutional and pooling layers, which reflect the translation-invariant nature of most images. For your problem, CNNs would work better than generic DNNs since they implicitly capture the structure of images.

10. DNN Architecture comparison in an autonomous car https://arxiv.org/pdf/1803.09386.pdf

10.1. a fully-connected network, a 2-layer CNN, AlexNet, VGG-16, Inception-V3, ResNet-26, and LSTM and report the number of laps they are able to successfully complete without crashing while traversing an indoor racetrack under identical testing conditions.

10.1.1. Alexnet looks like the best one

10.1.2. resnet is the most efficient one. Powerise or what?