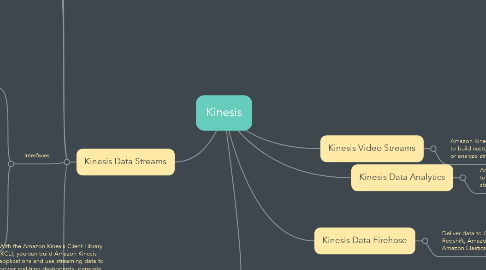

1. Kinesis Data Streams

1.1. Usage Patterns

1.1.1. Real-time data analytics

1.1.2. Log and data feed intake and processing

1.1.3. Real-time metrics and reporting

1.2. Cost Model

1.2.1. each shard gives you a capacity 5 read transactions per second, up to a maximum total of 2 MB of data read per second.Each shard can support up to 1,000 write transactions per second and up to a maximum total of 1 MB data written per second

1.3. Interfaces

1.3.1. Producers

1.3.1.1. AWS Kinesis PUT API

1.3.1.2. AWS SDK or toolkit abstraction

1.3.1.3. Developing Producers Using the Amazon Kinesis Producer Library - Amazon Kinesis Data Streams Developing Producers Using the Amazon Kinesis Producer Library - Amazon Kinesis Data Streams

1.3.1.4. Amazon Kinesis Agent

1.3.1.4.1. Kinesis Agent is a stand-alone Java software application that offers an easy way to collect and send data to Kinesis Data Streams. The agent continuously monitors a set of files and sends new data to your stream. The agent handles file rotation, checkpointing, and retry upon failures. It delivers all of your data in a reliable, timely, and simple manner. It also emits Amazon CloudWatch metrics to help you better monitor and troubleshoot the streaming process.

1.3.2. Consumers

1.3.2.1. Amazon Kinesis Data Streams application, is an application that you build to read and process data records from Kinesis data streams. You build application using EC2. Can use Autoscaling to scale the app.

1.3.2.2. KCL - For processing data that has already been put into an Amazon Kinesis stream, there are client libraries provided to build and operate real-time streaming data processing applications. The KCL acts as an intermediary between Amazon Kinesis Data Streams and your business applications which contain the specific processing logic. There is also integration to read from an Amazon Kinesis stream into Apache Storm via the Amazon Kinesis Storm Spout.

1.4. Anti Patterns

1.4.1. Small scale consistent throughput - Even though Kinesis Data Streams works for streaming data at 200 KB/sec or less, it is designed and optimized for larger data throughputs.

1.4.2. Long-term data storage and analytics –Kinesis Data Streams is not suited for long-term data storage. By default, data is retained for 24 hours, and you can extend the retention period by up to 7 days. You can move any data that needs to be stored for longer than 7 days into another durable storage service such as Amazon S3, Amazon Glacier, Amazon Redshift, or DynamoDB.

2. Kinesis Client Library

2.1. With the Amazon Kinesis Client Library (KCL), you can build Amazon Kinesis applications and use streaming data to power real-time dashboards, generate alerts, and implement dynamic pricing and advertising. You can also emit data from Kinesis Data Streams and Kinesis Video Streams to other AWS services such as Amazon Simple Storage Service (Amazon S3), Amazon Redshift, Amazon Elastic MapReduce (Amazon EMR), and AWS Lambda.

3. Kinesis Data Firehose

3.1. Deliver data to Amazon S3, Amazon Redshift, Amazon Kinesis Analytics, and Amazon Elasticsearch Service

4. Kinesis Data Analytics

4.1. Amazon Kinesis Data Analytics enables you to process and analyze streaming data with standard SQL.

5. Kinesis Video Streams

5.1. Amazon Kinesis Video Streams enables you to build custom applications that process or analyze streaming video.

6. Lambda

6.1. Triggers

6.1.1. S3

6.1.2. Dynamo

6.1.3. KDS

6.1.4. SNS

6.1.5. Cloudwatch

6.2. Anti Patterns

6.2.1. Long Running Applications

6.2.2. Dynamic Websites

6.2.3. Stateful Applications

6.3. Usage Patterns

6.3.1. Realtime file processing

6.3.2. Real time streaming processing

6.3.3. ETL - You can use Lambda to run code that transforms data and loads that data into one data repository to another.

6.3.4. Replace Cron

6.3.5. Process AWS Events

6.4. Performance

6.4.1. Can have some latency for the first time usage i.e. Cold Start issues