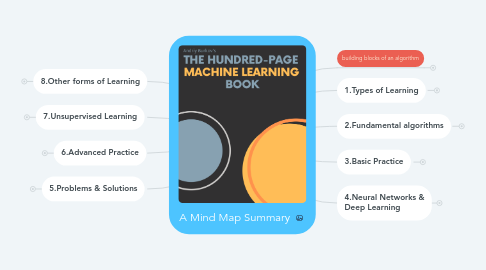

1. 8.Other forms of Learning

1.1. metric learning

1.1.1. generalizing the distance calculation using a metric A and learning the best parameters from data

1.2. Learning to Recommend

1.2.1. content-based

1.2.2. collaborative

1.3. word embedding

1.3.1. word2vec

1.3.1.1. skipgram

2. 7.Unsupervised Learning

2.1. Density Estimation

2.1.1. similar to kernel regression. Estimates the PDF using a gaussian kernel.

2.2. clustering

2.2.1. labeling unlabeled data

2.2.2. K-means

2.2.2.1. number of clusters (k) is determined a priori

2.2.2.2. k centroids are randomly chosen at first, then for each point the distance to the centroids is calculated and labels are assigned based on minimum distance. Then in every iteration the location of the centroid is updated.

2.2.2.3. the clusters are hyperspheres

2.2.2.4. it is non-deterministic: each run can lead to a different clustering.

2.2.3. DBSCAN

2.2.3.1. is density based and groups together points that are packed together

2.2.3.2. has 2 hyperparameters ϵ and n

2.2.3.3. can create arbitrary shaped clusters

2.2.4. HDBSCAN

2.2.4.1. only has one hyperparameter n: minimum number of points to put in a cluster

2.2.4.2. slightly slower than modern k-means algorithms, but is more effective.

2.2.5. determining the optimal k

2.2.5.1. splitting the data set into training and test sets and using "prediction strength" to determine k.

2.2.5.2. experimentally, a reasonable number of clusters is the largest k that has PS>0.8.

2.2.5.3. other methods

2.2.5.3.1. gap statistic

2.2.5.3.2. elbow method

2.2.5.3.3. average silhouette method

2.2.6. Gaussian Mixture model

2.2.6.1. soft clustering algorithm (probabilistic)

2.2.6.2. finds cluster membership by maximizing a Gaussian likelihood

2.2.6.3. clusters can be non-spherical (in contrast to k-means)

2.2.7. spectral clustering

2.2.8. hierarchical clustering

2.3. Dimensionality Reduction

2.3.1. PCA

2.3.1.1. transforming the data into a new coordinate system such that the first coordinate is along the highest variance, the second coordinate is along the second highest variance etc.

2.3.2. UMAP

2.3.3. t-SNE

2.4. outlier detection

2.4.1. autoencoder

2.4.2. one-class classifier

3. 6.Advanced Practice

3.1. Imbalanced Dataset

3.1.1. solutions

3.1.1.1. oversampling: making multiple copies of the underrepresented class

3.1.1.2. undersampling: randomly removing copies of the majority class

3.1.1.3. create synthetic examples

3.1.1.3.1. SMOTE

3.1.1.3.2. ADASYN

3.1.1.4. decision trees, random forests, and gradient boosting often perform well with imbalanced datasets.

3.2. Combining Models

3.2.1. averaging

3.2.1.1. for regression models, or classification models that return a classification score

3.2.2. majority vote

3.2.2.1. for classification models

3.2.3. stacking

3.2.3.1. a meta-model that takes the output of the base models as input

3.3. Training Neural Networks

3.3.1. image

3.3.1.1. standardize pixels

3.3.2. text

3.3.2.1. tokenize - bag of words

3.3.2.2. word embedding

3.3.3. start with 1 or 2 layers and gradually increase the size

3.4. Advanced Regularization

3.4.1. dropout

3.4.1.1. randomly dropping some of the data at each training epoch (similar to jackknife)

3.4.2. early stopping

3.4.2.1. assessing the performance of the preliminary model on the validation set at the end of each epoch

3.4.3. batch normalization/standardization

3.4.3.1. normalizing the output of each layer before passing it to the next layer as input

3.4.4. data augmentation

3.4.4.1. synthesizing examples by applying transformations such as rotation, zooming, darkening, etc to the data the training data (especially image data) but keeping the original labels.

3.5. Transfer Learning

3.5.1. Using a neural net trained on some data set to predict examples from another one (the two data sets can be from different distributions).

3.6. Algorithmic Efficiency

3.6.1. avoid for loops whenever possible (use numpy instead)

3.6.2. if the order doesn't matter, use sets instead of loops

3.6.3. use dicts in python for fast key look-up

3.6.4. for iterating over a vast number of elements, use generators and

3.6.5. use cProfile, multiprocessing, PyPy, Numba

4. 5.Problems & Solutions

4.1. Kernel Regression

4.1.1. non-parametric regression that can be used for nonlinear data. (only has a hyper-parameter b)

4.2. multi-class classification

4.2.1. if the algorithm is naturally binary (e.g. SVM) it can be generalized for multi-class classification using the one versus test.

4.3. one-class classification

4.3.1. used for detecting anomalies, outliers and novelties

4.3.1.1. one-class Gaussian

4.3.1.2. on-class kNN

4.3.1.3. one-class k-means

4.3.1.4. one-class SVM

4.4. multi-label classification

4.4.1. can be converted to multi-class classification

4.5. Ensemble Learning

4.5.1. combining an ensemble of weak (but fast) learners to make a high accuracy meta-model

4.5.2. Boosting

4.5.2.1. using slightly different models on the same training data. each model tries to fix the errors made by the previous model iteratively. The final model is the certain combination of the weak models built iteratively.

4.5.2.1.1. gradient boosting

4.5.3. Bagging

4.5.3.1. Each model uses a slightly different copy of the training data to built multiple weak models and then combines them. Example:

4.5.3.1.1. Random Forest

4.6. Active Learning

4.6.1. Applied when obtaining labeled data is costly

4.6.1.1. density and uncertainty based

4.6.1.1.1. model is trained with few labeled data. a score is calculated for unlabeled data (density x uncertainty). The data with highest scores are then labeled by an expert.

4.6.1.2. Support Vector based

4.6.1.2.1. a decision boundary is formed using labeled data. an expert annotates the unlabeled data closest to the boundary.

4.7. Semi-Supervised Learning

4.7.1. only a small fraction of the data is labeled.

4.8. auto-encoder

4.8.1. a network consisting of an encoder and decoder, which learns a representation of data with reduced dimensionality (learns structures in data in an unsupervised manner).

4.9. One-shot learning

4.9.1. uses a triple loss function (max of the distance from the anchor to the positive and negative examples.)

4.9.2. e.g. face-recognition

4.10. zero-shot learning

4.10.1. word embedding

5. building blocks of an algorithm

5.1. loss function

5.2. optimization criterion (cost function)

5.3. optimization routine (training)

6. 1.Types of Learning

6.1. Supervised

6.1.1. regression (continuous)

6.1.2. classification (categorical)

6.2. Unsupervised

6.2.1. clustering

6.2.2. dimensionality reduction

6.3. Semi-supervised

7. 2.Fundamental algorithms

7.1. Linear Regression

7.1.1. loss function: the square error

7.1.2. minimizes the loss function by setting the gradient equal to zero

7.1.3. advantages

7.1.3.1. simple

7.1.3.2. interpretable

7.1.3.3. rarely overfits

7.1.4. disadvantages

7.1.4.1. over simplifies real world problems because:

7.1.4.1.1. assumes linearity in data

7.1.4.1.2. assumes that the errors are normally distributed

7.1.4.2. prone to outliers

7.2. Logistic Regression

7.2.1. is not a regression, but a classification algorithm

7.2.2. assigns + & - labels to data using the sigmoid function

7.2.3. maximizes likelihood using gradient descent

7.2.4. advantages

7.2.4.1. efficient and fast

7.2.4.2. not prone to overfitting after regularization?

7.2.4.3. works well for single decision boundaries (vs decision tree)

7.2.4.4. data doesn't need to be scaled (normalized)

7.2.5. disadvantages

7.2.5.1. can't solve nonlinear problems (the decision boundary is linear)

7.2.5.2. Feature engineering is very important

7.2.5.3. builds the model with the data at once; has to be rebuilt with new data

7.3. Decision Tree

7.3.1. divides features into branches based on minimizing the entropy (maximizing information gain)

7.3.2. solves overfitting using pruning

7.3.2.1. pruning means going over the tree once it's built and removing branches that don't contribute significantly.

7.3.3. advantages

7.3.3.1. handles both continuous and discrete (categorical) data

7.3.3.2. handels missing data

7.3.3.3. easily interpretable

7.3.4. disadvantages

7.3.4.1. decisions are made locally, so the solution is not necessarily optimal

7.3.4.2. Is prone to overfitting

7.3.4.3. builds the model with the data at once; has to be rebuilt with new data

7.4. SVM

7.4.1. separates data into different classes using a decision boundary

7.4.2. advantages

7.4.2.1. effective in high dimensional spaces

7.4.2.2. with the appropriate kernel, it can solve complex problems

7.4.3. disadvantages

7.4.3.1. poor performance when number of features is larger than the number of samples

7.4.3.2. choosing a good kernel is not easy

7.4.3.3. long training time?

7.4.3.4. builds the model with the data at once; has to be rebuilt with new data

7.5. kNN

7.5.1. is a non-parametric model: for new data points, uses nearest data points in the training set to guess a label.

7.5.2. distance metric is the hyperparameter but it can be inferred from the data.

7.5.3. advantages

7.5.3.1. robust to noise

7.5.3.2. no training needed

7.5.3.3. learns complex models easily

7.5.4. disadvantages

7.5.4.1. the value of k needs to be determined

7.5.4.2. doesn't perform well in high demensions

7.5.4.3. not clear which distance metric to use

7.5.4.4. memory intensive

8. 3.Basic Practice

8.1. Feature Engineering

8.1.1. transforming raw data into a dataset

8.1.2. one-hot encoding

8.1.2.1. all features set to zero except the target feature (similar to bag of words)

8.1.2.2. turns categorical data into machine understandable values

8.1.3. binning

8.1.3.1. converting a continuous variable into discrete bins

8.1.3.2. helps the algorithm to learn using fewer examples

8.1.4. Normalization

8.1.4.1. converting the range of data into a standard interval e.g. [0,1] or [-1,1]

8.1.4.2. prevents features with larger numerical values from overweighting the algorithm

8.1.4.3. avoids numerical overflow

8.1.5. standardization

8.1.5.1. rescaling data so that in follows a normal dist. with mean=0 and var=1

8.1.5.2. preferable to normalization for

8.1.5.2.1. unsupervised algorithms

8.1.5.2.2. if the data naturally follows a normal distribution

8.1.5.2.3. if the data has outliers

8.1.6. missing values

8.1.6.1. can be dealt with by

8.1.6.1.1. removing the entries with missing values

8.1.6.1.2. interpolation or other data substitution algorithms

8.1.6.1.3. data imputation

8.2. Learning Algorithm Selection

8.2.1. Explainability

8.2.1.1. Neural nets and ensemble models are accurate "black boxes": make few errors but not easily interpretable.

8.2.1.2. kNN, Regression models, Decision Trees are not as accurate but easily interpretable

8.2.2. In Memory vs Out of memory

8.2.2.1. if the dataset doesn't fit in memory, incremental learning algorithms are preferable

8.2.3. number of features

8.2.3.1. neural nets and gradient boosting can handle large number of features, but something like SVM is modest in capacity

8.2.4. categorical vs numerical features

8.2.4.1. some algorithms can only handle numerical data

8.2.5. nonlinearity in data

8.2.5.1. algorithms such as SVM with linear kernel, linear and logistic regression are good choices for linear data.

8.2.6. training speed

8.2.6.1. some algorithms are slow learners (neural nets). linear and logistic regression and decision trees are much faster. random forests can benefit from multiple CPUs.

8.2.7. prediction speed

8.2.7.1. linear and logistic regression and SVMs and some kinds of neural nets are fast at prediction. Others such as kNN, ensemble algorithms and deep neural nets are slower.

8.3. Three sets

8.3.1. training set

8.3.1.1. to train the learning algorithm

8.3.1.2. 70-15-15 % for small data sets

8.3.1.3. 95-2.5-2.5 % for large data sets

8.3.2. validation set

8.3.2.1. to find the best learning algorithm

8.3.2.2. to find the best hyper-parameters of the algorithm

8.3.3. test set

8.3.3.1. to asses the performance of the model before delivery and production

8.4. Overfitting/Underfitting

8.4.1. Underfitting: high bias

8.4.1.1. the model makes many mistakes on the training dataset

8.4.1.1.1. the model is too simple

8.4.1.1.2. The features are not informative enough

8.4.2. Overfitting: high variance

8.4.2.1. the model predicts the training data too well but performs poorly on the holdout data sets

8.4.2.1.1. model is too complex

8.4.2.1.2. too many features and small number of examples

8.4.2.1.3. MSE on the test data is substantially higher than the training data

8.4.2.2. solutions

8.4.2.2.1. trying simpler models

8.4.2.2.2. reducing dimensionality

8.4.2.2.3. adding more training data

8.4.2.2.4. regularizing the model

8.5. Model Performance Assessment

8.5.1. Confusion Matrix

8.5.1.1. summarizes the results of classification

8.5.2. Precision

8.5.2.1. TP/(TP+FP)

8.5.2.2. How many of the identified positives are actually positives (the proportion of relevant items to all items returned)

8.5.2.2.1. high precision means that when you catch an item, it's actually what you want.

8.5.3. Recall (sensitivity)

8.5.3.1. TP/(TP+FN)

8.5.3.2. How many of the actual positives were identified (proportion of relevant items to all relevant items that could have been returned)

8.5.4. Accuracy

8.5.4.1. (TP+TN)/total

8.5.4.1.1. can be generalized to be cost sensitive (assign costs/weights to FP and FN)

8.5.4.2. How many did we get right, out of everything

8.5.4.3. Only works for balanced datasets

8.5.5. Receiver Operating Characteristic (ROC)

8.5.5.1. can be used to assess classifiers that have a confidence score (probability/prediction threshold)

8.5.5.2. a plot of TPR(TP/TP+FN) vs FPR(FP/FP+TN)

8.5.5.2.1. for threshold=0, both TPR=FPR=1

8.5.5.2.2. for threshold=1, both TPR=FPR=0

8.5.5.3. The higher the area under the curve, the better the classifier.

8.5.6. Hyper-parameter tuning

8.5.6.1. Brute Force Grid Search

8.5.6.1.1. use grid search with logarithmic scale

8.5.6.2. Random Search

8.5.6.3. Bayesian Optimization

8.5.6.4. Gradient Based

8.5.6.5. Evolutionary

8.5.7. Cross Validation

8.5.7.1. k-fold validation: split data into k sections, each time one section (fold) is used as the test set.

9. 4.Neural Networks & Deep Learning

9.1. Neural Nets

9.1.1. are generalized Logistic Regression

9.1.2. e.g. multi-layer perceptron (Feed Forward model)

9.2. Deep Learning

9.2.1. Neural Nets with more than 2 non-output layers

9.2.2. Convolutional Neural Net

9.2.2.1. used in image and text processing

9.2.2.2. each unit convolves a trainable filter with the input image

9.2.3. Recurrent Neural Nets

9.2.3.1. used to label, generate and classify sequences in text and speech processing