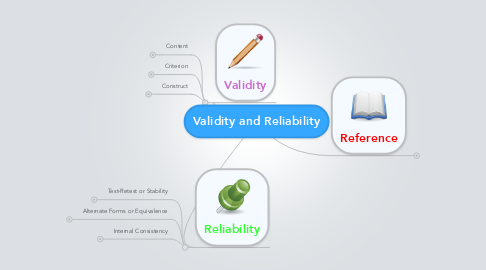

1. Reference

1.1. Kubiszyn,T. & Borich, G. (2010). Educational testing & measurement: Classroom application and practice (9th ed.). John Wiley & Sons, Inc., Hoboken, NJ.

2. Validity

2.1. Content

2.1.1. " The content validity evidence for a test is established by inspecting test questions to see whether they correspond to what the user decides should be covered by the test (Borich & Kubisyn, 2010 p.330)." The assessor should ensure that the questions on the test are related to what was studied and the learning outcomes that he wants his students to know.

2.2. Criterion

2.2.1. "Criterion-related validity evidence, scores from a test are correlated with an external criterion (Borich & Kubisyn, 2010 p.330)." The external criterion should be pertinent to the original test; e.g. comparing the scores from a month's worth of vocab quizzes to a final vocab test at the end of the month. A student that did well on the weekly quizzes should have no problem with the test.

2.3. Construct

2.3.1. "A test has construct validity evidence if its relationship to other information corresponds well with some theory (Borich & Kubisyn, 2010 p.332)." The test scores should be compared to what the assessors expect the results would be.

3. Reliability

3.1. Test-Retest or Stability

3.1.1. "The test is given twice and the correlation between the first set of scores and the second set of scores is determined (Borich & Kubisyn, 2010 p.341)." It's exactly what the name sounds like. The assesses take the test two times and the scores are compared with each other, checking for correlations. A problem with this method of testing reliability is that taking the assessment the second time would skew the data, unless the test-takers managed to forget the entire test layout in between the assessment periods

3.2. Alternate Forms or Equivalence

3.2.1. "If there are two equivalent forms of a test, these forms can be used to obtain an estimate of the reliability of the scores from the test (Borich & Kubisyn, 2010 p.343)." Similar to the test-retest method, though by not using the same test two times you can eliminate the problem of skewed results upon taking the second test. The two tests are taken and the data compared; however, this method does require the assessor to make two good tests. Potentially a lot more work.

3.3. Internal Consistency

3.3.1. "If the test in question is designed to measure a single basic concept, it is reasonable to assume that people who get one item right will be more likely to get other, similar items right(Borich & Kubisyn, 2010 p.343)." The test should be consistent within itself. If a test has many questions related to the same topics or subjects, then it would make sense that a student who answers one of these questions correctly would have a higher probability of answering questions correctly with similar topics.