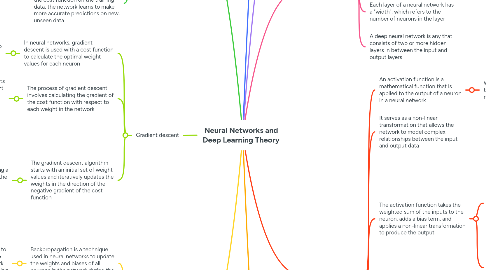

1. Cost functions

1.1. In a neural network, a cost function (also called a loss function or objective function) is used to evaluate the network's outputs during the training process

1.1.1. The cost function measures how well the network's predictions match the true output labels of the training data

1.2. During training, the network makes predictions on the input data, and the cost function is used to calculate the difference between these predictions and the true output labels

1.2.1. The goal of the training process is to minimize the value of the cost function, which can be achieved by adjusting the network's weights and biases

1.3. The most common cost function used in neural networks is the mean squared error (MSE) function, which calculates the average squared difference between the predicted output and the true output

1.3.1. Other common cost functions include cross-entropy loss and binary cross-entropy loss, which are often used for classification tasks

1.4. Once the cost function has been calculated, the network's weights and biases are updated using a technique called backpropagation, which involves calculating the gradients of the cost function with respect to each parameter in the network

1.4.1. The gradients are then used to update the parameters in a way that reduces the value of the cost function

1.5. By repeatedly adjusting the network's parameters to minimize the cost function on the training data, the network learns to make more accurate predictions on new, unseen data

2. Gradient descent

2.1. In neural networks, gradient descent is used with a cost function to calculate the optimal weight values for each neuron

2.1.1. The goal of gradient descent is to find the set of weights that minimizes the cost function

2.2. The process of gradient descent involves calculating the gradient of the cost function with respect to each weight in the network

2.2.1. The gradient is a vector that points in the direction of steepest ascent of the cost function, and its magnitude indicates the rate of change of the cost function with respect to the weight

2.3. The gradient descent algorithm starts with an initial set of weight values and iteratively updates the weights in the direction of the negative gradient of the cost function

2.3.1. This update is performed using a learning rate, which controls the size of the steps taken in the weight space

2.3.1.1. If the learning rate is too high, the weight values may oscillate and fail to converge to a minimum. If the learning rate is too low, the convergence may be slow

2.3.1.2. Rather than the learning rate being fixed steps, we can use adaptive gradient descent to start with bigger steps then reduce to smaller steps as the gradient closes in on zero

2.3.1.2.1. Adam is a rather obscure reference to a scientific paper published in 2015 and if you see this, it simply means that an adaptive gradient descent is being used to more efficiently calculate the optimal weight values for each neuron in a neural network

3. Backpropagation

3.1. Backpropagation is a technique used in neural networks to update the weights and biases of all neurons in the network during the training process

3.1.1. The goal of backpropagation is to minimize the error between the predicted output of the network and the true output of the training data

3.2. The backpropagation algorithm works by first computing the output of the network for a given input, and then calculating the error between this output and the true output of the training data using a cost function

3.2.1. The cost function is typically chosen to be a differentiable function such as mean squared error or cross-entropy

3.3. Next, the error is backpropagated through the network, meaning that the error is propagated backwards from the output layer to the input layer

3.3.1. This involves computing the gradient of the cost function with respect to the weights and biases of each neuron in the network

3.3.1.1. The gradient is calculated using the chain rule of calculus, which allows the gradient of a function with respect to an intermediate variable to be expressed in terms of the gradients of the function with respect to its inputs

3.4. Once the gradients have been computed, the weights and biases of each neuron are updated using an optimization algorithm such as gradient descent, which adjusts the weights and biases in the direction that minimizes the cost function

3.5. The process of backpropagation and weight and bias updates is repeated iteratively on batches of training data until the network converges to a minimum error on the training data

3.5.1. At this point, the network has learned to make accurate predictions on the training data, and can be used to make predictions on new, unseen data

4. Perceptron model

4.1. A perceptron is a type of artificial neural network that consists of a single layer of interconnected neurons

4.2. It is a linear classifier that can be used for binary classification tasks

4.3. The perceptron takes input features, multiplies them by their corresponding weights, sums the results, and applies an activation function to produce an output

4.3.1. There is also another value known as the bias, which combines with the weight values

4.3.1.1. The bias is an additional input to the perceptron that allows it to learn decision boundaries that don't pass through the origin (0, 0) in the input space

4.3.1.1.1. An absence of a bias limits the expressiveness of the model, making it unable to learn more complex decision boundaries

4.4. The output of the perceptron is a binary value that indicates the predicted class label of the input data

4.5. During training, the perceptron updates its weights based on the errors in the predictions it makes

4.6. The training algorithm used by the perceptron is called the perceptron learning rule, which involves adjusting the weights based on the difference between the predicted output and the actual output

4.7. The perceptron learning rule is a form of supervised learning, where the model learns from labeled examples

4.8. The perceptron is a foundational concept in neural network theory, and has paved the way for the development of more complex neural network architectures

5. Biological neuron

5.1. Simplified way to visualise a neuron

5.1.1. In this diagram, the nucleus is represented by the body of the neuron, while the dendrites are shown as lines branching out from the body

5.1.1.1. Think of the nucleus (in the neuron's body) as performing some function on the dendrite inputs, and the output of that function flows out via the axon

5.1.2. The single axon output is depicted as a single line extending downward from the body of the neuron

5.1.3. This is a highly simplified representation of a neuron, but it captures the basic idea of how signals flow through a neuron: inputs arrive at the dendrites and are integrated in the body, and then the output is transmitted through the axon to other neurons

6. Neural networks

6.1. A single neuron (same concept as a perceptron) cannot be used to solve complex problems, so we need to combine neurons via interconnected layers, to form a network of neurons

6.1.1. Multi layer, fully connected neural network

6.2. Each layer of a neural network has a "width", which refers to the number of neurons in the layer

6.3. A deep neural network is any that consists of two or more hidden layers in between the input and output layers

7. Activation functions

7.1. An activation function is a mathematical function that is applied to the output of a neuron in a neural network

7.1.1. We can think of this as something that happens inside the neuron nucleus

7.2. It serves as a non-linear transformation that allows the network to model complex relationships between the input and output data

7.3. The activation function takes the weighted sum of the inputs to the neuron, adds a bias term, and applies a non-linear transformation to produce the output

7.3.1. We can think of this as: x*w+b

7.3.1.1. The bias (b) can be thought of a threshold value where the product of the input (x) and weight (w) needs to exceed the bias to have an effect

7.3.1.1.1. For example, if bias (b) is set to -10, the weighted input (x*w) won't have any effect until it exceeds 10

7.3.1.2. But the activation function (f) itself does not perform x*w+b, it just takes the result (z = x*w+b) as its input, so an activation function can be expressed as: f(z)

7.3.2. The output of the activation function is then passed to the next layer of the network as input

7.4. The choice of activation function can have a significant impact on the performance of the neural network

7.4.1. Sigmoid function

7.4.1.1. This function maps the output to a value between 0 and 1, which can be interpreted as a probability

7.4.1.2. It is often used in the output layer of binary classification problems

7.4.2. Tanh (Hyperbolic Tangent) function

7.4.2.1. This function maps the output to a value between -1 and 1, which can be useful in some contexts

7.4.3. ReLU (Rectified Linear Unit) function

7.4.3.1. This function sets any negative values in the output to zero, while leaving positive values unchanged

7.4.3.2. It is commonly used in the hidden layers of neural networks and has been shown to work well in practice

7.4.4. Softmax function

7.4.4.1. This function maps the output to a probability distribution over multiple classes, which is useful in multi-class classification problems

7.4.4.1.1. Each multi-class output gets a probability between 0 and 1 and the sum of all multi-class output probabilities is 1

8. Multi-class classification

8.1. Multi-class classification in neural networks is a type of machine learning problem where the goal is to classify input data into one of several possible classes

8.1.1. In this problem, the input data is typically represented as a vector of features, and the goal is to predict the class label of the input data based on these features

8.2. In a neural network architecture designed for multi-class classification, the output layer has multiple neurons, where each neuron corresponds to a different class label

8.2.1. The output of each neuron represents the probability that the input data belongs to that class

8.2.1.1. There are 2 main types of multi-class:

8.2.1.1.1. Non-exclusive classes

8.2.1.1.2. Mutually exclusive classes

8.3. There are several approaches to multi-class classification in neural networks, including one-vs-all (OvA), softmax regression, and hierarchical classification

8.3.1. The choice of approach depends on the specific problem and the number of classes involved

8.4. Multi-class classification is an important task in many fields, such as image recognition, natural language processing, and speech recognition

8.5. One-hot encoding

8.5.1. One-hot encoding is a technique used in multi-class classification problems to represent categorical variables as binary vectors

8.5.1.1. In the context of neural networks, it is used to represent the class labels of the input data

8.5.2. In one-hot encoding, each class label is represented as a binary vector where all elements are zero, except for the element corresponding to the class label, which is set to one

8.5.2.1. For example, if there are three classes (A, B, and C), then class A can be represented as [1, 0, 0], class B can be represented as [0, 1, 0], and class C can be represented as [0, 0, 1]

8.5.2.1.1. For non-exclusive classes, A and C can be represented as [1, 0, 1]

8.5.3. Also referred to as dummy variables