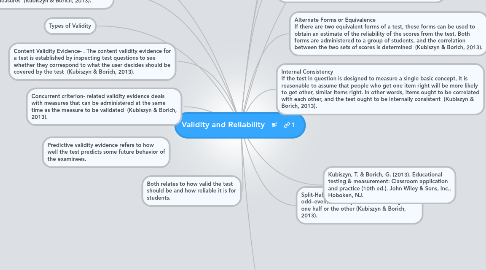

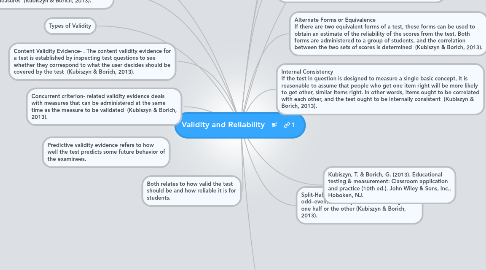

Validity and Reliability

by Kemberlyn Rickett

1. A test has validity evidence if we can demonstrate that it measures what it says it measures (Kubiszyn & Borich, 2013).

2. Concurrent criterion- related validity evidence deals with measures that can be administered at the same time as the measure to be validated (Kubiszyn & Borich, 2013).

3. Validity

4. Types of Validity

5. Content Validity Evidence- . The content validity evidence for a test is established by inspecting test questions to see whether they correspond to what the user decides should be covered by the test (Kubiszyn & Borich, 2013).

6. Predictive validity evidence refers to how well the test predicts some future behavior of the examinees.

7. Both relates to how valid the test should be and how reliable it is for students.

8. Reliability

9. Get started!

9.1. Use toolbar to add ideas

9.2. Key shortcuts

9.2.1. INS to insert (Windows)

9.2.2. TAB to insert (Mac OS)

9.2.3. ENTER to add siblings

9.2.4. DEL to delete

9.2.5. Press F1 to see all key shortcuts

9.3. Drag & Drop and double-click canvas

9.4. Find out more?

9.4.1. Online Help

9.4.2. Use Cases & Templates

9.4.2.1. Personal Todo List

9.4.2.2. Vacation Planning

9.4.2.3. Meeting Minutes

9.4.2.4. Project Plan

9.4.2.5. more...

9.4.3. Tools and Gadgets

9.4.3.1. Offline Mode

9.4.3.2. Geistesblitz Tools

9.4.3.3. Email & SMS Gateways

9.4.3.4. Offline Mode