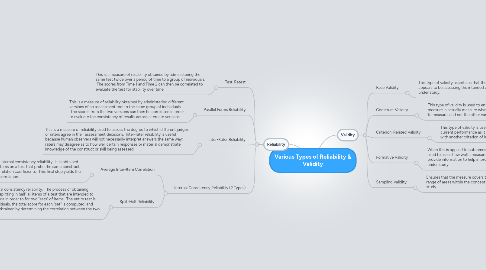

1. Reliability

1.1. Test-Retest

1.1.1. This is a measure of reliability obtained by administering the same test twice over a period of time to a group of individuals. The scores from Time 1 and Time 2 can then be correlated to evaluate the test for stability over time.

1.2. Parallel Forms Reliability

1.2.1. This is a measure of reliability obtained by administering different versions of an assessment tool to the same group of individuals. The scores from the two versions can then be correlated in order to evaluate the consistency of results across alternate versions.

1.3. Inter-Rater Reliability

1.3.1. This is a measure of reliability used to assess the degree to which different judges or raters agree in their assessment decisions. Inter-rater reliability is useful because human observers will not necessarily interpret answers the same way; raters may disagree as to how well certain responses or material demonstrate knowledge of the construct or skill being assessed.

1.4. Internal Consistency Reliability (2 Types)

1.4.1. Average Inter-Item Correlation

1.4.1.1. This is a subtype of internal consistency reliability. It is obtained by taking all of the items on a test that probe the same construct, determining the correlation coefficients. This final step yields the average inter-item correlation.

1.4.2. Split-Half Reliability

1.4.2.1. This is another subtype of internal consistency reliability. The process of obtaining split-half reliability is begun by "splitting in half" all items of a test that are intended to probe the same area of knowledge in order to for two "sets" of items. The entire test is administered to a group of individuals, the total score for each "set" is computed, and finally the split-half reliability is obtained by determining the correlation between the two total "set" scores.

2. Validity

2.1. Face Validity

2.1.1. This type of validity ascertains that the measure appears to be assessing the intended construct under study.

2.2. Construct Validity

2.2.1. This type of validity is used to ensure that the measure is actually measure what it is intended to measure, and not the other variables.

2.3. Criterion-Related Validity

2.3.1. This type of validity is used to predict future or current performance as it correlates test results with another criterion of interest.

2.4. Formative Validity

2.4.1. When this is applied to outcomes assessment it is used to asses how well a measure is able to provide information to help improve the program under study.

2.5. Sampling Validity

2.5.1. Ensures that the measure covers the broad range of areas within the concept under study.