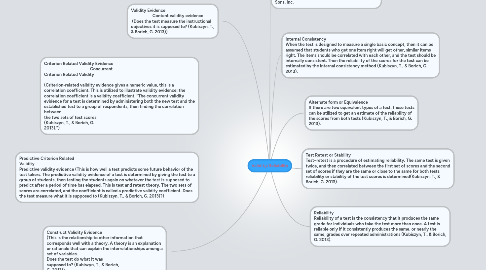

Validity/Reliability

by eleanor gonyea

1. Validity Evidence Content validity evidence (Does the test measure the instructional objectives it is supposed to? (Kubiszyn, T., & Borich, G. 2013))

2. Criterion Related Validity Evidence Concurrent Criterion Related Validity (Criterion-related validity evidence gives a numeric value, this is a correlation coefficient. This is utilized to illustrate validity evidence; the correlation coefficient is a validity coefficient. “The concurrent validity evidence for a test is determined by administering both the new test and the established test to a group of respondents, then finding the correlation between the two sets of test scores (Kubiszyn, T., & Borich, G. 2013).”)

3. Predictive Criterion Related Validity Predictive validity evidence (This is how well a test predicts some future behavior of the test takers. The predictive validity evidence of a test is determined by giving the test to a group of students, then testing the students again on whatever the test is supposed to predict after a period of time has elapsed. This is test and retest theory. The two sets of scores are correlated, and the coefficient is called a predictive validity coefficient .Does the test measure what it is supposed to (Kubiszyn, T., & Borich, G. 2013)?)

4. Construct Validity Evidence (This is the relationship to other information that corresponds well with a theory. A theory is an explanation or rationale that can explain the interrelationships among a set of variables. Does the test do what it was supposed to? (Kubiszyn, T., & Borich, G. 2013))

5. Reliability Reliability of a test is the consistency that it produces the same grade for individuals who take the test more than once. A test is reliable only if it consistently produces the same, or nearly the same, grades over repeated administrations (Kubiszyn, T., & Borich, G. 2013).

6. Test Retest or Stability Test–retest is a procedure of estimating reliability. The same test is given twice, and then correlated between the first set of scores and the second set of scores if they are the same or close to the same for both tests reliability or stability of the test scores is determined(Kubiszyn, T., & Borich, G. 2013).

7. Alternate form or Equivalence If there are two equivalent types of a test, these tests can be utilized to get an estimate of the reliability of the scores from both tests (Kubiszyn, T., & Borich, G. 2013).

8. Internal Consistency When the test is designed to measure a single basic concept, then it can be assumed that students who get one item right will get other, similar items right. The items should be correlated with each other, and the test should be internally consistent. Then the reliability of the scores for the test can be estimated by the internal consistency method (Kubiszyn, T., & Borich, G. 2013).

9. Kubiszyn, T., & Borich, G. (2013). Educational testing and measurement: classroom application and practice. (10 TH ED.). Danvers, MA: John Wiley & Sons, Inc.