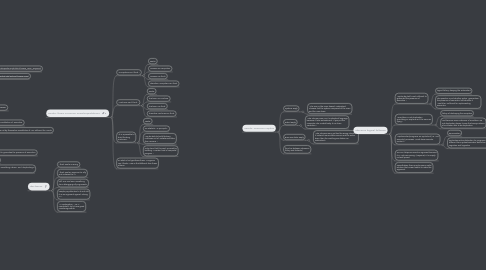

1. Searle: three common misinterpretations

1.1. "Computers can't think."

1.1.1. Searle:

1.1.2. Humans are computers.

1.1.3. Humans can think.

1.1.4. Therefore, computers can think.

1.2. "Machines can't think."

1.2.1. Searle:

1.2.2. The brain is a machine.

1.2.3. The brain can think.

1.2.4. Therefore machines can think.

1.3. "It is impossible to build thinking machines".

1.3.1. Searle:

1.3.2. No obstacle "in principle".

1.3.3. May be able to build thinking machines out of "substances other than neurons."

1.3.4. Only claim that this can't succeed by building "a certain kind of computer program."

1.4. So what is his hypothesis then? "Programs can't think?" How is that different from these others?

2. statement of the argument

2.1. John Searle, Scholarpedia

2.1.1. http://www.scholarpedia.org/article/Chinese_room_argument

2.1.2. http://plato.stanford.edu/entries/chinese-room/

2.2. 60 Second Chinese Room

2.3. Premise 1: Implemented programs are syntactical processes.

2.4. Premise 2: Minds have semantic content.

2.5. Premise 3: Syntax by itself is neither sufficient for, nor constitutive of, semantics.

2.6. Conclusion: Therefore, the implemented programs are not by themselves constitutive of, nor sufficient for, minds.

2.7. "In short, Strong Artificial Intelligence is false."

2.8. Principle 1

2.8.1. Syntax is not semantics.

2.8.2. Syntax by itself is not sufficient to guarantee the presence of semantics.

2.9. Principle 2

2.9.1. Simulation is not duplication.

2.9.2. There is a difference between "simulating a brain" and "duplicating a brain".

3. Searle: common replies

3.1. Systems Reply

3.1.1. "The man in the room doesn't understand Chinese, but the system composed of the room and the man does."

3.2. Robot Reply

3.2.1. "The Chinese room can't understand language because it lacks embodiment. If you put the computer into a robot body, it can learn semantics."

3.3. Brain Simulator Reply

3.3.1. "The Chinese room just has the wrong structure. If, instead, we simulate neurons and the entire brain, then the resulting simulation can understand."

3.4. (fine line between strawman fallacy and dialectic)

4. Obvious logical fallacies

4.1. "Syntax by itself is not sufficient to guarantee the presence of semantics."

4.1.1. logical fallacy: denying the antecedent

4.1.2. The question is not whether syntax "guarantees" the presence of semantics, but whether it "could be" sufficient for implementing semantics.

4.2. "Simulation is not duplication. Simulating an airplane isn't the same as flying."

4.2.1. fallacy of destroying the exception

4.2.2. Just because some instances of simulation are not duplication doesn't mean that every instance of simulation fails to be duplication.

4.3. "Implemented programs are syntactical (i.e., not semantic) processes. Minds have semantic content."

4.3.1. equivocation

4.3.2. syntax/semantics distinction for programs is different from syntax/semantics distinction in cognition and linguistics

4.4. You can't disprove Searle's argument because it is "not even wrong" (Feynman), it is simply not well posed.

4.5. Nevertheless, there may be some useful intuition that caused Searle to make this argument.

5. NLP Reply

5.1. think about Searl-like implementation

5.1.1. incoming strings are pattern-matched against a catalog of patterns, and an appropriate reply is selected

5.2. would this work?

5.2.1. metaphor

5.2.1.1. "Prices are increasing."

5.2.1.2. "Prices are rising."

5.2.1.3. "Prices are like a moon rocket."

5.2.1.4. "Prices are like a Concorde taking off."

5.2.1.5. "Prices are like a helium balloon cut loose."

5.2.2. You can't understand language without "semantics"; you need a model of the world.

5.3. Searle is right

5.3.1. You need (linguistic) semantics; (linguistic) syntax is not enough.

5.4. Searl is wrong

5.4.1. Linguistic semantics can be implemented via syntax, since arbitrary computations can be implemented via syntax (cf. Church calculus)

5.5. Causes

5.5.1. When Searle came up with his argument, AI consistent of rule-based expert systems and non-statistical NLP.

5.6. Lessons learned?

5.6.1. AI and NLP probably requires some pretty good internal modeling of the real world.

5.6.2. "Imagine putting a football on top of a brick and tapping the football in different places; what might happen?" "Imagine putting a hungry cat and a hungry weasel into a cage. What happens?"