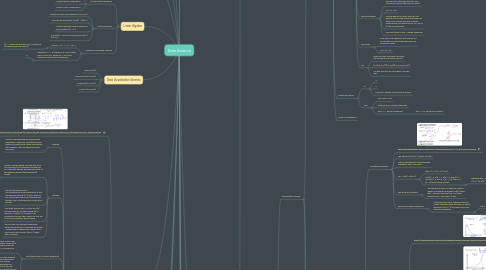

1. NLP

1.1. Text preparation

1.1.1. Remove punctuation

1.1.2. Lower case

1.1.3. Tokenize words

1.1.4. Remove stop words

1.1.5. Remove blanks

1.1.6. Remove single letter words

1.1.7. Remove/translate non-english words

1.1.8. Stemming/Lemitization

1.1.8.1. Snowball ()

1.2. Tool-kits

1.2.1. NLTK (python)

1.2.2. Spacy (python)

1.3. Text classifiction

1.3.1. 1. Prepare text data (see text preparation)

1.3.2. 2. CountVectorize each feature (word) into a matrix

1.3.3. 3. Apply TD-IDF (Term Frequency, Inverse-Term-Frequency) to account for different length of documents

1.3.4. 4. Split data set into variables (countvector of text) and target (category label of the text)

1.3.5. 5. Deploy standard ML classification process (model, evaluate, iterate/tune)

1.4. Topic modelling

1.5. Entity recognition

2. Network Analysis

2.1. Metrics

2.1.1. Centricity

2.1.2. Betweenness

2.2. Data format

2.2.1. Node_df = NAME, NODE_ATTRIBUTE_1 ,NODE_ATTRIBUTE_N Relation_df = FROM, TO, EDGE_ATTRIBUTES_1, EDGE_ATTRIBUTE_N

3. Front-End (Web application tools)

3.1. Flask (python)

3.2. Shiny (R)

3.3. Dash (Python)

3.4. Tableau

3.5. Carto

3.6. Angular/React (JS)

4. Linear Algebra

4.1. Vector/Matrix operations

4.1.1. Matrix/Matrix Addition

4.1.2. Matrix/Matrix Multiplication

4.1.3. Matrix/Vector Multiplication

4.2. Matrix properties

4.2.1. Matrices are not commutative (A*B != B*A)

4.2.2. Matrices are associative (A*B)*C = A*(B*C)

4.2.3. Matrices with the identity matrix are commutative (AI = IA)

4.2.4. SHAPE(M) = ALWAYS Row,Columns (R,C) (e.g. 2,3)

4.3. Inverse & Transposed Matrices

4.3.1. Inverse: A*A^-1 = A^-1*A = I

4.3.1.1. (A^-1 is the inverse matrix of A, though not all matrices have an inverse)

4.3.2. Transpose: A -> AT (where A is a m*n matrix and AT is an n*m, where Aij = ATji) First column becomes first row basically.

4.3.2.1. X

5. Time-series analysis

6. Data Vizualisation libraries

6.1. GGPLOT2(R)

6.2. MATPLOTLIB(PYTHON)

6.3. SEABORN(PYTHON)

6.4. PLOTLY(PYTHON)

7. Anomaly detection

7.1. https://raw.githubusercontent.com/ritchieng/machine-learning-stanford/master/w9_anomaly_recommender/anomaly_detection8.png

7.2. Can be an unsupervised problem (looking for points with high p(x) standard deviation away from the mean of many of the features), from but mostly setup as a supervised problem with a training set with labels of anomalies

7.2.1. Premise

7.2.1.1. Premise: assume features follow normal distribution. Find the u, sd & p(x) for each feature and use this to create new derived p(x) features. Then use these to predict anomalies

7.2.2. Process

7.2.2.1. Create a 'good training' set with 60% of all non-anomaly (y=0) examples and use this to create p(x) derived features from each of the original features (see formula p(x) below).

7.2.2.1.1. If you complete this process and still find anomaly y=1 samples which are not detected then it is a good idea to look into these specific example to see if there are new derived features that can be create to help detect it

7.2.2.2. Put the remaining 20% of non-anomalously records with 50% of the anomalously records (y=1) into a training set, and the last 20% of non-anomalously and last 50% of anomalously records into a test set

7.2.2.3. Use 'good training' set to create the p(x) derived features, use the training set to predict y=0 good, y=1 anomaly, and optimize the model, then finally use test set to do cross-validation performance

7.2.2.4. We can then use standard supervised performance metrics to evaluate the model - though due to imbalanced classes must use a more robust metric (like F1) rather than accuracy!

7.2.3. Pros (supervised / anomaly detection)

7.2.3.1. AD preferable when we have a very small set of positive (y=1) examples (as we want to save this just for training and test set and can 'expend' many y=0 examples to fit the p(x) model)

7.2.3.2. When anomalies may follow many different 'patterns' so fitting a standard supervised model may not be able to find a good separation boundary, but the pattern of their probability distribution (i.e. the fact they are very different from normal) will be a constant pattern

7.2.4. Examples

7.2.4.1. Spam detection

7.2.4.2. Manufacturing checks

7.2.4.3. Machine/data monitoring

7.2.5. Formula for p(x)

7.2.5.1. Using set of y=0 data points create new derived features which model the original features as a normal distribution and calculate the sample mean, sd, and p(x) as new derived features

7.2.5.1.1. https://yyqing.me/2017/2017-08-09/anomaly-detection.png

7.2.5.2. Assumes features are Normally distributed (x~(u,s2)

7.2.5.2.1. To check this assumption more-or-less holds true it is highly recommended to graph the features first

7.2.5.2.2. Even if this does not hold true AD algorithms generally work OK

7.2.6. Multivariate Gaussian Distribution (AD)

7.2.6.1. Premise

7.2.6.1.1. Standard AD uses single-variance Gaussian distribution - essentially creating a circle radius of p(x) around the mean. However often it may be better to have a more complex shape around the mean - to do this we simply use a multi-var gaussian formula to calculate p(x)

7.2.6.2. Formula

7.2.6.2.1. https://notesonml.files.wordpress.com/2015/06/ml51.png

7.2.6.3. Advantages

7.2.6.4. Disadvantages

8. Recommendation engines

8.1. Content based

8.2. Collaborative filter

9. Labeling Data

9.1. Manual Labeling

9.1.1. Calculate approximate time it would take (e.g. 10s to label one, ergo...)

9.2. Crowd Source

9.2.1. E.g. Amazon Mechanical Turk / Chiron

9.3. Synthetic Labeling

9.3.1. Introducing distortions to smaller training set to amplify it (but only if distortions are what we would expect to find in real training set not just random noise)

10. Data Preparation

10.1. Unbalanced Classes

10.1.1. Collect more data

10.1.2. Change performance metrics

10.1.2.1. Confusion matrix

10.1.2.2. Precision

10.1.2.3. Recall

10.1.2.4. F1

10.1.2.5. Kappa

10.1.2.6. ROC Curves

10.1.3. Resampling data

10.1.3.1. Up sampling

10.1.3.1.1. 'Oversampling'

10.1.3.2. Down sampling

10.1.4. Generate Synthetic samples

10.1.4.1. SMOTE

10.1.5. Try different algorithms

10.1.6. Try Penalized Models

10.1.6.1. e.g. penalized-LDA

10.1.6.2. Weka CostSensitive wappers

10.1.7. Try different approaches

10.1.7.1. Anomaly detection

10.1.7.2. Change detection

10.1.8. Get creative

10.1.8.1. Split into smaller problems

10.2. Scaling/normalizing (feature scaling)

10.2.1. best for numeric variables which are on different scales (e.g. height = 178m, score = 10,000, shoesize = 5).

10.2.2. This will make gradient descent work much better! as less back and forth as it tries to find local minimum between the parameters.

10.2.3. Many variations but generally we want to get all features into approximately a -1 < x < 1 range

10.2.4. MEAN NORMALIZATION: X - Xu / Xmax - Xmin

10.2.4.1. Will have a Xu ~= 0

10.2.4.2. Can also use standard deviation as denominator (X / s)

10.3. Feature construction

11. Optimization algorithms

12. Data gathering

12.1. APIs

12.2. Web Scrapers

12.2.1. Selenium/PhantonJS

12.2.1.1. Good when info is behind JS or when you need to interact with the browser (e.g. login as a human)

12.2.2. BeautifulSoup

12.2.2.1. Simple scraper than you can use directly in a python script

12.2.3. Scrapy

12.2.3.1. Most developed and efficient scraper for large trawling. Also offers lots of functionality to customize (e.g. IP masking). Though needs to be setup with correct directory and class structures.

12.3. Manual Labeling

12.3.1. Manual

12.3.2. Services

12.3.2.1. Mechanical turk (etc.)

12.3.3. Exotic sampling

12.4. Major file types

12.4.1. CSV

12.4.2. JSON

13. HL Programming Languages

13.1. R

13.2. Python

13.2.1. Vectorization

13.2.1.1. Matrix / for loops

13.2.1.1.1. Matrix multiplications applied across an entire dataset is much more efficient that a for loop as do not have to reset and find memory space for each variables each time and has pre-indexed order for column vector

13.3. Octave

14. Statistics

14.1. Distributions

14.1.1. Gaussian (normal) distribution

14.1.1.1. Described by the mean (u) and variance (σ2) - middle is mean, width is 95% in 2σ

14.1.1.1.1. https://upload.wikimedia.org/wikipedia/commons/7/74/Normal_Distribution_PDF.svg

14.1.1.2. 'Bell shaped curve'

14.1.1.3. probability distribution = 1

14.2. Statistical tests

14.2.1. t-test

14.2.2. ANOVA

15. Data Project Management

15.1. CRISP-DM

15.1.1. 1. Business understanding

15.1.2. 2. Data understanding

15.1.3. 3. Data preperation

15.1.4. 4. Modelling

15.1.5. 5. Evaluation

15.1.6. 6. Deployment

15.1.7. https://pbs.twimg.com/media/DNF5vACVQAAxOWD.jpg

15.2. Ceiling Analysis

15.2.1. Assess which part of the pipeline is most valuable to spend your time?

15.2.2. To do this, override each module/step with the perfect output (e.g. replace predictions with correct labels) for each module and assess where getting closer

16. Machine Learning

16.1. Generic ML approaches

16.1.1. ML Diagnostics (assess algorithms)

16.1.1.1. Over-fitting (high variance)

16.1.1.1.1. The hypothesis equation is 'over fit' to the training data (e.g. complex polynomial equation that passes through each data point) meaning it performs very well in training but fails generalize well in testing

16.1.1.2. Under-fitting (high bias)

16.1.1.2.1. The hypothesis equation is 'under fit' meaning it over generalized the problem (e.g. using a basic linear separation line for a polynomial problem), meaning if cannot identify more complex cases well

16.1.1.3. Approaches

16.1.1.3.1. Cross-validation

16.1.1.3.2. Learning curves

16.1.1.3.3. General diagnostic options

16.1.2. Generic ML algorithm Methodology

16.1.2.1. Input: x, the input variable that predicts y

16.1.2.2. target: y, a labelled outcome

16.1.2.3. hypothesis: h(x), the function line that is a function of x

16.1.2.4. Parameter: θ, the parameter(s) we choose with the objective of minimising the cost function

16.1.2.5. Cost function: J(θ) a function of the parameters that we try to reduce to get a good prediction (e.g. MSE). We can plot this to see the minimum point.

16.1.2.5.1. https://raw.githubusercontent.com/ritchieng/machine-learning-stanford/master/w1_linear_regression_one_variable/2_params.png

16.1.2.5.2. e.g. RMSE

16.1.2.6. Goal: minimize J(θ), the goal of the algorithm to minimize the error of the cost function through changing the parameters

16.1.2.7. Gradient decent (cost reduction mechanism): Repeat θj := θj - α dθj/d J(θ)

16.1.2.7.1. := assignment operator, take a and make it b

16.1.2.7.2. α = learning rate = how big steps to take, if it is too small then baby-steps will take a lot of time, if too big can fail to converge, or even diverge. The learning rate impact varys depending of slope of the derivative - This means that closer to convergence the steps will be smaller anyway.

16.1.2.7.3. Simultaneously updates all parameters!

16.1.2.7.4. dθj/d J(θ) = derivative function, the slope of the straight line at the tangent of the curve at each point (derivative). If slope is positive then it is θ - positive number makes θ less, if slope is negative then makes θ more until we get to a point where derivative is 0 (local minimum).

16.1.2.7.5. Sometimes called "Batch" gradient decent as it looks at all the available examples in the training set (compared to cross-validation where we look at a sub-set of samples)

16.1.2.7.6. Pros: works well even when you have a large number of features - so scales well.

16.1.2.7.7. Cons: you need to choose a learning rate (α) and you need to do lots of iterations

16.1.2.7.8. There are however other ways of solving this problem

16.1.2.8. Prediction: a predict value of y using a new x sample and a θ trained by reducing the cost function for the training set

16.1.3. The phenomenon of increasing training data

16.1.3.1. X 2001

16.1.3.2. This only holds if the features X hold enough information to predict y (i.e. predicting missing word from a specific sentence compared to trying to predict house prices from only having the square feet ... not possible even for human experts)

16.2. Supervised (predictive models)

16.2.1. Classification models

16.2.1.1. Performance Metrics

16.2.1.1.1. Confusion matrix http://www.dataschool.io/content/images/2015/01/confusion_matrix2.png

16.2.1.1.2. Simple Metrics

16.2.1.1.3. Advanced Metrics

16.2.1.1.4. Other considerations

16.2.1.2. Classification Model Types

16.2.1.2.1. Logistic Regression

16.2.1.2.2. SVMs

16.2.1.2.3. KNN

16.2.1.2.4. Decision Trees

16.2.1.2.5. Random Forest

16.2.1.2.6. XGBoost

16.2.1.3. Classification types

16.2.1.3.1. Binary class

16.2.1.3.2. Multi class

16.2.2. Regression models

16.2.2.1. Performance Metrics / Cost function

16.2.2.1.1. We can measure the accuracy of our hypothesis function by using a cost function. This takes an average difference (actually a fancier version of an average) of all the results of the hypothesis with inputs from x's and the actual output y's.

16.2.2.1.2. We can measure the accuracy of our hypothesis function by using a cost function. This takes an average difference (actually a fancier version of an average) of all the results of the hypothesis with inputs from x's and the actual output y's.

16.2.2.1.3. Cost functions

16.2.2.2. Regression Model Types

16.2.2.2.1. Linear Regression

16.2.2.2.2. Decision Trees for Regression

16.2.2.2.3. Random Forest for Regression

16.2.3. Reinforcement models

16.2.3.1. Performance Metrics

16.2.3.2. Neural Networks

16.2.3.2.1. Architectures

16.2.4. Ensemble modeling

16.2.4.1. Definition

16.2.4.1.1. Ensembling is a technique of combining two or more algorithms of similar or dissimilar types called base learners

16.2.4.2. Types

16.2.4.2.1. Averaging:

16.2.4.2.2. Majority vote:

16.2.4.2.3. Weighted average:

16.2.4.3. Methods

16.2.4.3.1. Bagging

16.2.4.3.2. Boosting

16.2.4.3.3. Stacking

16.2.4.4. Advantages/Disadvantages of ensembling

16.2.4.4.1. Advantages

16.2.4.4.2. Disadvantages

16.3. Unsupervised (descriptive models)

16.3.1. Clustering

16.3.1.1. KNN

16.3.1.1.1. Process

16.3.1.2. DBscan

16.3.1.3. Auto-encoders (Neural Nets)

16.3.2. Dimensionality reduction

16.3.2.1. PCA

16.3.2.1.1. Reduce the dimensions of a dataset by finding a plane between similar variables than can be used to express the original variables in a lower-dimensional space

17. Big Data

17.1. Big Data technologies

17.1.1. Hadoop

17.1.2. Spark

17.2. ML on large datasets

17.2.1. Gradient descent

17.2.1.1. Stochastic gradient descent