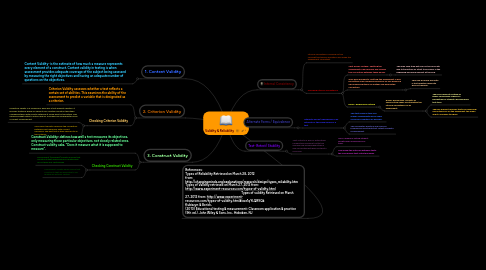

1. 1. Content Validity

1.1. Content Validity- is the estimate of how much a measure represents every element of a construct. Content validity in testing is when assessment provides adequate coverage of the subject being assessed by measuring the right objectives and having an adequate number of questions on the objectives.

2. 2. Criterion Validity

2.1. Criterion Validity assesses whether a test reflects a certain set of abilities. This examines the ability of the assessment to predict a variable that is designated as a criterion.

2.2. Checking Criterion Validity

2.2.1. Predictive validity is a measure of how well a test predicts abilities. It involves testing a group of subjects for a certain construct and then comparing them with results obtained at some point in the future. This measures what extent a future level of a variable can be predicted from a current measurement.

2.2.2. Concurrent validity measures the correlation between past measures with current measures. The past test is then referred to as the criterion.

3. 3. Construct Validity

3.1. Construct Validity- defines how well a test measures its objectives, only measuring those particular objectives, not closely related ones. Construct validity asks, “Does it measure what it is supposed to measure”.

3.2. Checking Construct Validity

3.2.1. Discriminant (Divergent) validity ensures that constructs that should have no relationship do not have any relationship

3.2.2. Convergent Validity helps ensure that constructs that are expected to be related are actually related.

4. References: Types of Reliability Retrieved on March 28, 2012 from: http://changingminds.org/explanations/research/design/types_reliability.htm Types of Validity retrieved on March 27, 2012 from: http://www.experiment-resources.com/types-of-validity.html Types of validity Retrieved on Marsh 27, 2012 from: http://www.experiment- resources.com/types-of-validity.html#ixzz1qYLQR9Qk Kubiszyn & Borich, (2010) Educational testing & measurement: Classroom application & practice (9th ed.). John Wiley & Sons, Inc., Hoboken, NJ

5. Internal Consistency

5.1. Internal consistency is based on the concept that similar questions will make the assessment consistent.

5.2. Checking Internal Consistency

5.2.1. Split-halves Method- splitting the assessment in half equally and finding the correlation between these halves.

5.2.1.1. This may save time but may not be accurate due to the nature of a test to be easier in the beginning and more difficult at the end.

5.2.2. Odd-Even Reliability- splitting the assessment in half by putting even numbered problems on one page and odd numbered items on another and finding the correlation.

5.2.2.1. This may be more accurate if test question difficulty was not random.

5.2.3. Kuder -Richardson Method

5.2.3.1. Kuder- Richardson consists of several other ways of estimating the internal consistency of an assessment.

5.2.3.1.1. The more difficult method of Kuder-Richardson requires a percentage of students passing each test item.

5.2.3.1.2. The less difficult requires that you know how many items are on the assesment, the mean and it's varience to figure.

6. Test- Retest/ Stability

6.1. Test-retest is a way of estimating reliability by giving a test to the same group of individuals twice and correlating between both sets of scores.

6.1.1. More reliable in noting student growth ofer long periods of time.

6.1.2. The longer the intervals between tests, the less reliable test-retest becomes.

7. Alternate Forms / Equivalence

7.1. Alternate Forms/ Equivalence is an estimate of two equal forms of a test.

7.1.1. This test requires two very similar assessments given under as similar conditions as possible.

7.1.2. This eliminates practice and memory problems present with test-retest reliability measurement.