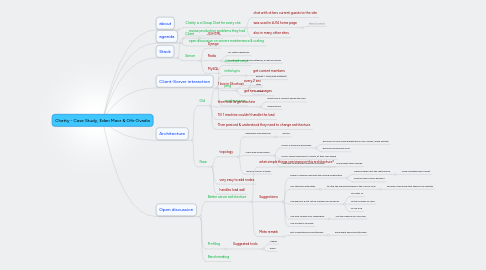

1. about

1.1. Chatty is a Group Chat for every site

1.1.1. chat with others current guests to the site

1.1.2. was used in #J14 home page

1.1.2.1. http://j14.org.il/

1.1.3. also in many other sites

2. agenda

2.1. review production problems they had

2.2. open discussion on servers maintenance & scaling

3. Stack

3.1. Client

3.1.1. JS/HTML

3.2. Server

3.2.1. Django

3.2.2. Redis

3.2.2.1. for faster operations

3.2.2.2. to lower the load on the database, on the poll pings

3.2.3. MySQL

4. Client-Server interaction

4.1. download script

4.2. initial sync

4.2.1. get current members

4.3. ping

4.3.1. every 2 sec

4.3.2. get new messages

4.4. send message

5. Architecture

5.1. Old

5.1.1. 1 box in Slicehost

5.1.1.1. apache + mod_wsgi instances

5.1.1.2. redis

5.1.1.3. MySQL

5.1.2. then took larger machine

5.1.2.1. every time it couldn't handle the load

5.1.2.2. several times

5.1.3. Till 1 machine couldn't handle the load

5.1.4. Then paniced & understood they need to change architecture

5.2. New

5.2.1. topology

5.2.1.1. Rackspace load balancer

5.2.1.1.1. service

5.2.1.2. Many web server boxes

5.2.1.2.1. NGINX & gunicorn processes

5.2.1.2.2. NGINX feeds responses to clients, at their own speed

5.2.1.2.3. Very easy to work with Gunicorn & Django

5.2.1.3. 1 box for MySQL & Redis

5.2.2. very easy to add nodes

5.2.3. handles load well

6. Open discussion

6.1. Better server architecture

6.1.1. what simple things can improve this architecture?

6.1.2. Suggestions

6.1.2.1. There's a problem because the Polling architecture

6.1.2.1.1. Maybe Django isn't the right choice

6.1.2.1.2. Gunicorn have async handlers

6.1.2.2. Can optimize with etags

6.1.2.2.1. to stop the request handling in the NGINX level

6.1.2.3. Use physical & not virtual machine for DB server

6.1.2.3.1. for faster IO

6.1.2.3.2. do top & check on load

6.1.2.3.3. or use SAR

6.1.2.4. Use Pure Django over AppEngine

6.1.2.4.1. use the Channel API for push

6.1.2.5. Use Socket.io for push

6.1.3. Meta remark

6.1.3.1. first understand your bottleneck

6.1.3.1.1. know what should be attacked

6.2. Profiling

6.2.1. Suggested tools

6.2.1.1. Nagios

6.2.1.2. Zabix