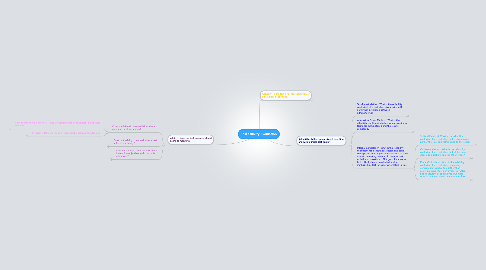

1. Validity: Does the test measure what it claims to measure?

1.1. Criterion-Related: How well does the test match an outside variable?

1.1.1. Concurrent:-How do two tests' (that are administered at concurrent times) data compare?

1.1.1.1. -Measured to determine validity of new tests to old tests when they would be more advantageous(i.e. shorter)-Measured by correlation coefficients-Anchored to an established assessment-Little variability because short time range...higher coefficients

1.1.2. Predictive:-How well does a test predict a behavioral outcome?

1.1.2.1. -Measured by correlation coefficient-Anchored to the predicted outcome-Greter variability because longer time range, therefore, smaller coefficients.

1.2. Construct Validity: How well does a test adhere to a theory?

1.2.1. -Not anchored to pre-measured variables-Many correlation coefficients

1.3. Content Validity: Does the test match instruction objectives and standards addressed?

1.3.1. -Mostly achievement tests-Measured by judgement-Anchored to objectives and standards

2. Accuracy: Is the test a fair representation of skill, ability, or aptitude?

3. Reliability: Is the test consistent over time and with multiple test-takers?

3.1. Test-Retest Method: What is the reliability coefficient of a test when given twice with no further instruction between administering?

3.1.1. -Usually some memory recall if given too soon and problems with extra instruction if given too far apart.

3.2. Alternative Forms Method: What is the reliability coefficient of a test when two similar forms are administered and the results compared?

3.2.1. -Must be administered under similar conditions-Hard to come up with two good tests-Mostly publishers do in conjunction with another reason (i.e. security)

3.3. Internal Consistency: What is the reliability coefficient for a test that assesses one basic concept and whose questions correlate to each other? Limited by number of concepts and variation of questions. Not good for speeded tests. Best when evaluated with other methods (i.e. Test-Retest or Alternative Forms)

3.3.1. Split-half Method: What is the reliability coefficient for a test when half is given to one part of the class and half is given to the other?

3.3.1.1. -Test items must vary throughout in difficulty-Fewer test questions reduce reliability

3.3.2. Odd-even Method: What is the reliability coefficient for a test when half of the class does odd questions and the other evens?

3.3.2.1. -Very similar to split-half method, however, difficulty levels can rise as the test progresses-Fewer test questions reduce reliability.

3.3.3. Kinder-Richardson: What is the reliability coefficient for a test when comparing comparable tests by item rather than solely on overall test performance? -Often not as accurate as split-halves, but more than so than test-retest and alternate-form

3.3.3.1. Evaluated by student performance per item.

3.3.3.2. Evaluated based on number of items, mean, and variance (not as accurate as student performance per item method).