1. A valid test measures what it is primarily supposed to measure.

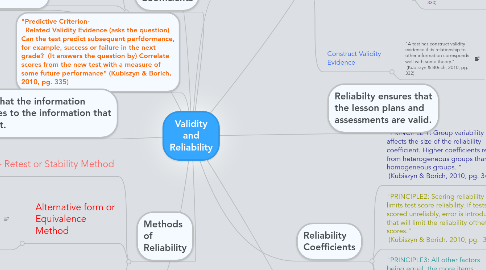

2. Methods of Reliability

2.1. Test- Retest or Stability Method

2.1.1. "The test is given twice and the correlation between the first set of scores and the second set of scores is determined." (Kubiszyn & Borich, 2010, pg. 341)

2.2. Alternative form or Equivalence Method

2.2.1. "To use this method of estimating reliability, two equivalent forms of the test must be available, and they must be administered under conditions as nearly equivalent as possible." (Kubiszyn & Borich, 2010, pg. 343)

2.3. Internal Consistency Method

2.3.1. "If the test in question is designed to measure a single basic concept, it is reasonable to assume that people who get one item right will be more likely to get other, similar items right. In other words, items ought to be correlated with each other, and the test ought to be internally consistent." (Kubiszyn & Borich, 2010, pg. 343)

3. Validity Coefficients

3.1. Content Validity

3.2. Concurrent Criterion Validity

3.3. Predictive Criterion Validity

4. "Content Validity Evidence (asks the question:) Do test items match and measure objectives? (and answer the questions by:) Match the items with objectives. " (Kubiszyn & Borich, 2010, pg. 335)

5. "Concurrent Criterion- Related Validity Evidence (asks the question) How well does performance on the new test match performance on an established test? (It answeres the question by) Correlate new test with an accepted criterion, for example, a well-established test measuring the same behavior. "

6. "Predictive Criterion- Related Validity Evidence (asks the question) Can the test predict subsequent perfdormance, for example, success or failure in the next grade? (It answers the question by) Correlate scores from the new test with a measure of some future performance" (Kubiszyn & Borich, 2010, pg. 335)

7. The reliability of a test refers to the consistency with which it yields the same rank for individuals that take the test more than once.

8. Two Methods

8.1. Split Halves Method

8.2. Kuder- Richardson Methods

9. Valididty ensures that the information being tested relates to the information that was actually taught.

10. Types of Validity

10.1. Content Validity Evidence

10.1.1. " The content validity evidence for a test is established by inspecting test questions to see whether they correspond to what the user decides should be covered by the test." (Kubiszyn & Borich, 2010, pg. 330)

10.2. Criterion Related Validity Evidence

10.2.1. "In establishing criterion-related validity evidence, scores from a test are correlated with an external criterion." (Kubiszyn & Borich, 2010, pg. 330)

10.2.1.1. Types of Criterion-Related Validity Evidence

10.2.1.1.1. Predictive

10.2.1.1.2. Concurrent

10.3. Construct Validity Evidence

10.3.1. "A test has construct validity evidence if its relationship to other information corresponds well with some theory." (Kubiszyn & B0rich, 2010, pg. 332)