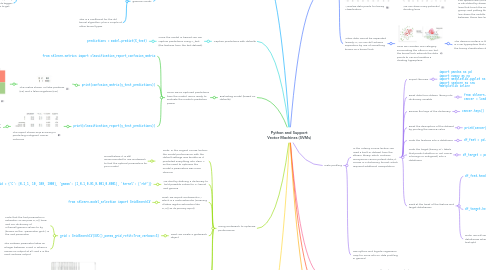

1. Train with default C, kernel and gamma values

1.1. After defining the X and y data and splitting it into the train/test subsets, we're ready to create the model

1.1.1. from sklearn.svm import SVC

1.1.2. model = SVC()

1.1.3. model.fit(X_train,y_train)

1.2. Looking at the parameters for SVC(), we can note the following options and their defaults

1.2.1. C=1.0

1.2.1.1. This is known as the regulization parameter

1.2.1.2. The main thing to note about this is that making this number bigger penalizes mistakes more heavily and this is useful when our model's predictions show an obvious bias

1.2.1.2.1. It serves as a tool to adjust the bias-variance trade-off in the model

1.2.2. kernel='rbf'

1.2.2.1. Specifies the kernel type to be used in the algorithm; there are 4 other options in addition to the default rbf

1.2.3. gamma='scale'

1.2.3.1. This can either be the string value 'scale' or 'auto', or it can be a float

1.2.3.1.1. When thinking of the float numbers, it may be helpful to think of gamma values as inversely proportional to C

1.2.3.2. This is a coefficient for the rbf kernel algorithm, plus a couple of other kernel types

2. Capture predictions with defaults

2.1. Once the model is trained, we can capture predictions using X_test (the features from the test dataset)

2.1.1. predictions = model.predict(X_test)

3. Evaluating model (based on defaults)

3.1. Once we've captured predictions from the model, we're ready to evaluate the model's predictive power

3.1.1. from sklearn.metrics import classification_report,confusion_matrix

3.1.2. print(confusion_matrix(y_test,predictions))

3.1.2.1. This matrix shows 10 false positives (FP) and 3 false negatives (FN)

3.1.2.1.1. Remember the layout of a confusion matrix

3.1.3. print(classification_report(y_test,predictions))

3.1.3.1. The report shows 92% accuracy in predicting malignant cancer outcome

3.1.3.1.1. Note: this performance is way better than the one given in the course lecture, which is because the default value for gamma changed from 'auto' to 'scale'

4. Using Gridsearch to optimize performance

4.1. Note: in the original course lecture, the model performance with the default settings was terrible as it predicted everything into class 1, so the need to optimize the model's parameters was more obvious

4.1.1. Nonetheless, it is still recommended to use Gridsearch to find the optimal parameters for your model

4.2. We start by defining a dictionary to hold possible values for C, kernel and gamma

4.2.1. param_grid = {'C': [0.1,1, 10, 100, 1000], 'gamma': [1,0.1,0.01,0.001,0.0001], 'kernel': ['rbf']}

4.3. Next, we import GridSearchCV, which is a meta-estimator (meaning it takes regular estimators like SVC() as its primary input)

4.3.1. from sklearn.model_selection import GridSearchCV

4.4. Next, we create a gridsearch object

4.4.1. grid = GridSearchCV(SVC(),param_grid,refit=True,verbose=3)

4.4.1.1. Note that the first parameter is estimator, so we pass SVC() here, and our dictionary of C/kernel/gamma values to try (known as the "parameter grid") is the 2nd parameter

4.4.1.2. The verbose parameter takes an integer between 0 and 3, where 0 means no output at all, and 3 is the most verbose output

4.5. Next, we train our gridsearch using the same training data we used when training the SVC

4.5.1. grid.fit(X_train,y_train)

4.5.1.1. Here's what the verbose output from the gridsearch training looks like

4.6. With the gridsearch trained, we can review the best estimator and best params found

4.6.1. grid.best_params_

4.6.1.1. returned the following:

4.6.1.1.1. {'C': 1, 'gamma': 0.0001, 'kernel': 'rbf'}

4.6.2. grid.best_estimator_

4.6.2.1. returned the following:

4.6.2.1.1. SVC(C=1, gamma=0.0001)

5. Compare the grid (i.e. optimized SVC model) to the original

5.1. grid_predictions = grid.predict(X_test)

5.1.1. Note how how can re-run predictions on the grid object, just like a normal model

5.2. print(confusion_matrix(y_test,grid_predictions))

5.2.1. Confusion matrix shows 7 false positives and 4 false negatives

5.3. print(classification_report(y_test,grid_predictions))

5.3.1. Classification report shows 94% accuracy

6. Theory

6.1. SVMs are supervised learning models that can be used for both classification and regression analysis

6.1.1. Note: this mind map is based around using SVM for classification analysis

6.2. SVMs seek to divide data points into binary classification areas that can be separated by a clear gap

6.2.1. The idea is to maximize the gap between the two classifications and predict new data point classification based on which side of the gap they fall

6.3. Visualize data points for binary classification

6.3.1. We can draw many potential dividing lines

6.3.1.1. The optimal line (a.k.a. hyperplane) is calculated by drawing "support" lines that touch the edges of each group, and putting the dividing line down the middle of gaps between those two lines

6.3.1.1.1. The data points (or vectors) where the support lines touch are known as support vectors, and this is where the SVM name comes from

6.3.1.1.2. We see the support lines here are the dotted lines and the grey line as the optimal dividing line

6.4. When data cannot be separated linearly, SVMs can still achieve separation by use of something known as a kernel trick

6.4.1. Here we visualize one category surrounding the other in 2D, but the kernel trick extends the data points to 3D and enables a dividing hyperplane

6.4.1.1. The decision surface in the image is a 2D hyperplane that separates the binary classification data points

7. Data profiling

7.1. In the Udemy course lecture, we used a built-in dataset from the sklearn library, which contains anonymous cancer patient data; it comes in a dictionary format, which required additional manipulation

7.1.1. Import libraries

7.1.1.1. import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns %matplotlib inline

7.1.2. Read data from sklearn library into dictionary variable

7.1.2.1. from sklearn.datasets import load_breast_cancer cancer = load_breast_cancer()

7.1.3. Review the keys of the dictionary

7.1.3.1. cancer.keys()

7.1.3.1.1. dict_keys(['data', 'target', 'frame', 'target_names', 'DESCR', 'feature_names', 'filename'])

7.1.4. Read the description of the dataset by printing the DESCR value

7.1.4.1. print(cancer['DESCR'])

7.1.4.1.1. This returns scrollable text output that describes the dataset's features (30 numeric attributes)

7.1.5. Grab the features into a dataframe

7.1.5.1. df_feat = pd.DataFrame(cancer['data'],columns=cancer['feature_names'])

7.1.6. Grab the target (binary 0/1 labels that predict whether or not cancer is benign or malignant) into a dataframe

7.1.6.1. df_target = pd.DataFrame(cancer['target'],columns=['Cancer'])

7.1.7. Peek at the head of the feature and target dataframes

7.1.7.1. df_feat.head()

7.1.7.1.1. There are 30 features (all numeric)

7.1.7.2. df_target.head()

7.1.7.2.1. The target is a binary 0 or 1 representing whether or not the patient's tumor is malignant

7.1.7.3. Note: we will use these two dataframes when doing the train test split

7.2. See Python and logistic regression map for more info on data profiling in general

8. Exploratory Data Analysis (EDA)

8.1. In the Udemy course lecture, we didn't do any EDA on this dataset as it requires some domain knowledge to make sense of it

8.1.1. See Python and logistic regression map for more info on EDA in general

9. Data cleaning

9.1. In the Udemy course lecture, we didn't do any data cleaning for SVM

9.1.1. See Python and logistic regression map for more info on data cleaning in general

10. Define X and y arrays, and split the data

10.1. from sklearn.model_selection import train_test_split

10.2. X = df_feat y = np.ravel(df_target)

10.2.1. Note: the numpy ravel() function flattens the dataframe into a 1 dimensional array, which is the format needed by the train_test_split() function for the y parameter