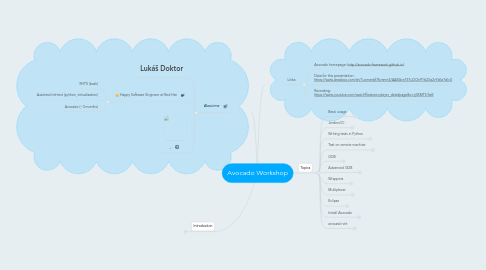

1. About me

1.1. Lukáš Doktor

1.2. Happy Software Engineer at Red Hat

1.2.1. RHTS (bash)

1.2.2. Autotest/virt-test (python, virtualization)

1.2.3. Avocado (~3 months)

1.3. _

2. Introduction

2.1. Avocado is a next generation testing framework inspired by Autotest and modern development tools such as git. Whether you want to automate the test plan made by your development team, do continuous integration, or develop quick tests for the feature you're working on, avocado delivers the tools to help you out.

2.2. What is Avocado for me

2.2.1. .

2.2.2. Program which stores results of anything I want

2.2.3. Binary which provides means to help me execute anything using various environments

2.2.4. Set of python* libraries to simplify writing tests

2.2.5. Something easily integrateable with Jenkins (or othe CI)

2.2.6. Tool which allows me to share the results

2.2.7. Most importantly tool which allows me to do all the above in all possible variants

2.2.8. .

2.3. Avocado structure

3. Topics

3.1. Basic usage

3.1.1. Execute binary

3.1.1.1. ./scripts/avocado run /bin/true

3.1.1.1.1. when installed use only "avocado run /bin/true"

3.1.1.2. ./scripts/avocado run /bin/true /bin/false

3.1.1.3. ./scripts/avocado run passtest failtest warntest skiptest errortest sleeptest

3.1.2. Help and docs

3.1.2.1. ./scripts/avocado -h

3.1.2.2. ./scripts/avocado run -h

3.1.2.3. ...

3.1.2.4. cd docs && make html

3.1.3. Execute binary and compare outputs

3.1.3.1. Used for tests which should generate the same stdout/stderr

3.1.3.2. Possibility to combine with other plugins (run inside GDB,...)

3.1.3.3. ./scripts/avocado run ./DEVCONF/Basic_usage/output_compare.sh

3.1.3.4. ./scripts/avocado run ./DEVCONF/Basic_usage/output_compare.sh --output-check-record all

3.1.3.5. ./scripts/avocado run ./DEVCONF/Basic_usage/output_compare.sh

3.1.3.6. # modify the file

3.1.3.7. ./scripts/avocado run ./DEVCONF/Basic_usage/output_compare.sh

3.1.3.8. ./scripts/avocado run ./DEVCONF/Basic_usage/output_compare.sh --disable-output-check

3.1.3.9. # exit -1

3.1.3.10. ./scripts/avocado run ./DEVCONF/Basic_usage/output_compare.sh

3.1.3.11. ./scripts/avocado run ./DEVCONF/Basic_usage/output_compare.sh --disable-output-check

3.1.4. Execute binary and parse results using wrapper

3.1.4.1. Used to run the same steps with various wrappers

3.1.4.2. For example run complex testsuite with perf/strace/... (viz. Wrapper section)

3.1.4.3. Alternatively use it to "translate" existing binaries to Avocado (here)

3.1.4.4. DEVCONF/Basic_usage/testsuite.sh

3.1.4.4.1. nasty testsuite which doesn't return exit_code

3.1.4.5. ./scripts/avocado run DEVCONF/Basic_usage/testsuite.sh

3.1.4.6. ./scripts/avocado run DEVCONF/Basic_usage/testsuite.sh --wrapper DEVCONF/Basic_usage/testsuite-wrapper.sh:*testsuite.sh

3.1.4.7. # remove FAIL test

3.1.4.8. ./scripts/avocado run DEVCONF/Basic_usage/testsuite.sh --wrapper DEVCONF/Basic_usage/testsuite-wrapper.sh:*testsuite.sh

3.1.4.9. # remove WARN test

3.1.4.10. ./scripts/avocado run DEVCONF/Basic_usage/testsuite.sh --wrapper DEVCONF/Basic_usage/testsuite-wrapper.sh:*testsuite.sh

3.1.4.11. More details in Wrapper section

3.1.5. Multiplex using ENV

3.1.5.1. ./scripts/avocado run examples/tests/env_variables.sh

3.1.5.2. ./scripts/avocado run examples/tests/env_variables.sh -m examples/tests/env_variables.sh.data/env_variables.yaml

3.1.5.3. ./scripts/avocado multiplex

3.1.5.3.1. -c

3.1.5.3.2. -d

3.1.5.3.3. -t

3.1.5.3.4. -h ;-)

3.1.5.4. This is pretty close to QA, right?

3.1.5.5. More details in Multiplex section

3.1.6. Grab sysinfo

3.1.6.1. ./scripts/avocado run passtest --open-browser

3.2. Jenkins/CI

3.2.1. Jenkins?

3.2.1.1. Jenkins

3.2.2. Quick&dirty setup

3.2.3. avocado simple

3.2.4. avocado parametrized

3.2.5. avocado lots of tests

3.2.6. avocado multi

3.2.6.1. multi scenarios

3.2.6.2. multi archs

3.2.6.3. multi workers (OSs)

3.2.7. avocado git poll

3.3. Writing tests in Python

3.3.1. Can be executed as

3.3.1.1. Inside Avocado

3.3.1.1.1. ./scripts/avocado run examples/tests/passtest.py

3.3.1.2. As python scripts

3.3.1.2.1. examples/tests/passtest.py

3.3.2. Logging

3.3.2.1. Possibility to set test as WARN

3.3.2.1.1. self.log.warn("In the state of Denmark there was an odor of decay")

3.3.2.1.2. See warntest test

3.3.2.2. Log more important steps

3.3.2.2.1. self.log.info("I successfully executed this important step with %s args" % args)

3.3.2.2.2. self.log.debug("Executing iteration %s" % iteration)

3.3.2.2.3. self.log.warn("You already know me")

3.3.2.2.4. self.log.error("Everyone should check these messages on failure")

3.3.2.2.5. stdout

3.3.2.2.6. stderr

3.3.3. Whiteboard

3.3.3.1. ./scripts/avocado run examples/tests/whiteboard.py

3.3.3.2. cat ~/avocado/job-results/latest/test-results/examples/tests/whiteboard.py/whiteboard

3.3.4. Advanced usage of params

3.3.4.1. See Multiplexer section

3.3.5. Advanced GDB

3.3.5.1. See GDB section

3.3.6. Avocado-virt

3.3.6.1. See Avocado-virt section

3.4. Test on remote machine

3.4.1. remote

3.4.1.1. ./scripts/avocado run passtest --remote-hostname 192.168.122.235 --remote-username root --remote-password 123456

3.4.1.1.1. Connects

3.4.1.1.2. Copies tests directories

3.4.1.1.3. Runs avocado inside machine with --json --archive

3.4.1.1.4. Grab and store results

3.4.1.1.5. Report results localy

3.4.1.2. --remote-no-copy

3.4.2. vm

3.4.2.1. run in libvirt machine

3.4.2.2. ./scripts/avocado run examples/tests/passtest.py importerror.py corrupted.py pass.py passtest --vm-domain vm1 --vm-hostname 192.168.122.235 --vm-username root --vm-password 123456 --vm-cleanup

3.4.2.2.1. Checks if domain exists

3.4.2.2.2. Start it if not already started

3.4.2.2.3. (--vm-clenaup) Creates snapshot

3.4.2.2.4. call remote test

3.4.2.2.5. (--vm-cleanup) Restore snapshot

3.4.2.3. --vm-cleanup

3.4.2.4. --vm-no-copy

3.4.3. docker plugin

3.4.3.1. first think what we require

3.4.3.1.1. docker run -v $LOCAL_PATH:$CONT_PATH $IMAGE avocado run $tests

3.4.3.1.2. grab the results

3.4.3.1.3. we don't require any remote interaction, only simple execution

3.4.3.2. plugin initialization

3.4.3.2.1. 1) name, status

3.4.3.2.2. 2) configuration hook

3.4.3.2.3. 3) activation hook

3.4.3.2.4. class RunDocker

3.4.3.3. Prerequisites

3.4.3.3.1. prepare docker cmd

3.4.3.3.2. copy tests to shared location

3.4.3.3.3. class DockerTestResult

3.4.3.4. Actual execution

3.4.3.4.1. 1) Execute the tests

3.4.3.4.2. 2) Grab the results

3.4.3.4.3. class DockerTestRunner

3.5. GDB

3.5.1. ./scripts/avocado run DEVCONF/GDB/doublefree*.py

3.5.2. ./scripts/avocado run --gdb-run-bin=doublefree: DEVCONF/GDB/doublefree.py

3.5.2.1. backtrace

3.5.2.2. ...

3.5.3. ./scripts/avocado run --gdb-run-bin=doublefree DEVCONF/GDB/doublefree2.py

3.5.3.1. without ':' it stops at the beginning

3.5.3.2. we can use `ddd` or other GDB compatible programs

3.5.3.3. don't forget to resume the test (FIFO)

3.5.4. ./scripts/avocado run --gdb-run-bin=doublefree: DEVCONF/GDB/doublefree3.py --gdb-prerun-commands DEVCONF/GDB/doublefree3.py.data/gdb_pre --multiplex DEVCONF/GDB/doublefree3.py.data/iterations.yaml

3.5.4.1. OK, we reached the "handle_exception" function we want to investigate

3.5.4.2. n

3.5.4.3. n

3.5.4.4. jump +1

3.5.4.5. Works fine, hurray

3.6. Advanced GDB

3.6.1. vim DEVCONF/Advanced_GDB/modify_variable.py.data/doublefree.c

3.6.2. ./scripts/avocado run DEVCONF/Advanced_GDB/modify_variable.py --show-job-log

3.7. Wrappers

3.7.1. Valgrind

3.7.1.1. ./scripts/avocado run DEVCONF/Wrappers/doublefree.py --wrapper examples/wrappers/valgrind.sh:*doublefree --open-browser

3.7.1.2. cat /home/medic/avocado/job-results/latest/test-results/doublefree.py/valgrind.log.*

3.7.2. Strace

3.7.2.1. The same way as valgrind

3.7.2.2. Imagine for example crashing qemu machine running windows under very complex workload defined in test

3.7.3. Other

3.7.3.1. ltrace

3.7.3.2. perf

3.7.3.3. strace

3.7.3.4. time

3.7.3.5. valgrind

3.7.4. Custom wrappers

3.7.4.1. qemu on PPC

3.7.4.2. wrap executed programs

3.7.4.3. grab results

3.8. Multiplexer

3.8.1. Motivation

3.8.1.1. QA Engineer walks into a bar. Orders a beer. Orders 0 beers. Orders 999999999 beers. Orders a lizard. Orders -1 beers. Orders a sfdeljknesv

3.8.1.1.1. by_order: a_beer: msg: a beer 0_beers: msg: 0 beers 999999999: msg: 999999999 beers lizard: msg: lizard negative: msg: -1 beers noise: msg: sfdeljknesv

3.8.2. How would you solve our problem?

3.8.2.1. !multiplex vs. !join

3.8.2.1.1. virt: hw: cpu: intel: amd: arm: fmt: !join qcow: qcow2: qcow2v3: raw: os: !join linux: !join Fedora: 19: Gentoo: windows: 3.11:

3.8.2.2. map tests to test->files

3.8.3. Usage

3.8.3.1. ./scripts/avocado multiplex -h

3.8.3.2. ./scripts/avocado multiplex -t

3.8.3.2.1. ./scripts/avocado multiplex -t DEVCONF/Multiplexer/nomux.yaml

3.8.3.2.2. ./scripts/avocado multiplex -t DEVCONF/Multiplexer/simple.yaml

3.8.3.2.3. ./scripts/avocado multiplex -t DEVCONF/Multiplexer/advanced.yaml

3.8.3.3. ./scripts/avocado multiplex -c

3.8.3.3.1. ./scripts/avocado multiplex -c DEVCONF/Multiplexer/simple.yaml

3.8.3.3.2. ./scripts/avocado multiplex -c DEVCONF/Multiplexer/advanced.yaml

3.8.3.4. ./scripts/avocado multiplex -cd examples/mux-selftest-advanced.yaml

3.8.3.4.1. eg. variant: 21

3.8.3.5. ./scripts/avocado run examples/tests/sleeptenmin.py -m DEVCONF/Multiplexer/simple.yaml

3.8.3.6. ./scripts/avocado run examples/tests/sleeptenmin.py -m DEVCONF/Multiplexer/advanced.yaml

3.8.3.7. ./scripts/avocado multiplex --filter-only/filter-out

3.8.3.7.1. ./scripts/avocado multiplex -c DEVCONF/Multiplexer/advanced.yaml --filter-only /by_method/shell

3.8.3.7.2. 2nd level filters

3.8.3.8. Additional tags

3.8.3.8.1. !using

3.8.3.8.2. !include

3.8.3.8.3. !remove_node

3.8.3.8.4. !remove_value

3.8.3.8.5. !join

3.8.4. Let's go crazy

3.8.4.1. ./scripts/avocado multiplex DEVCONF/Multiplexer/crazy.yaml

3.8.5. Future

3.8.5.1. Currently we update environment from used leaves

3.8.5.1.1. by_something: first: foo: bar by_whatever: something: foo: baz

3.8.5.2. Soon we want provide the whole layout

3.8.5.2.1. by_something: first: hello: world foo: bar by_whatever: something: foo: baz

3.8.5.3. Automatic multiplexation

3.8.5.3.1. 1) without multiplexation

3.8.5.3.2. 2) test's file multiplexation

3.8.5.3.3. 3) global file multiplexation

3.9. Eclipse

3.9.1. Debugging

3.9.2. Remote debugging

3.9.2.1. download pydev

3.9.2.2. copy it to $PYTHONPATH

3.9.2.2.1. or add path in code: import sys sys.path.append('$PATH_TO_YOUR_PYDEV')

3.9.2.3. import pydevd pydevd.settrace("$ECLIPSE_IP_ADDR", True, True)

3.9.2.3.1. 1. True = forward stdout (optional)

3.9.2.3.2. 2. True = forward stderr (optional)

3.10. Install Avocado

3.10.1. Using from sources

3.10.1.1. # Uninstall previously installed versions

3.10.1.2. git clone https://github.com/avocado-framework/avocado.git

3.10.1.3. cd avocado

3.10.1.4. ./scripts/avocado ...

3.10.2. git

3.10.2.1. git clone https://github.com/avocado-framework/avocado.git

3.10.2.2. # pip install -r requirements.txt

3.10.2.3. python setup.py install

3.10.2.4. avocado ...

3.10.3. rpm

3.10.3.1. sudo curl http://copr.fedoraproject.org/coprs/lmr/Autotest/repo/fedora-20/lmr-Autotest-fedora-20.repo -o /etc/yum.repos.d/autotest.repo

3.10.3.2. sudo yum update

3.10.3.3. sudo yum install avocado

3.10.3.4. avocado ...

3.10.4. deb

3.10.4.1. echo "deb http://ppa.launchpad.net/lmr/avocado/ubuntu trusty main" >> /etc/apt/sources.list

3.10.4.2. sudo apt-get update

3.10.4.3. sudo apt-get install avocado

3.10.4.4. avocado ...

3.11. avocado-virt

3.11.1. Currently only demonstration of the Avocado flexibility

3.11.2. using git version

3.11.2.1. 1) git clone $avocado

3.11.2.2. 2) git clone $avocado-virt

3.11.2.3. 3) git clone $avocado-virt-tests

3.11.2.4. cd avocado/avocado ln -s ../../avocado-virt/avocado/virt virt cd -

3.11.2.5. cd avocado/avocado/plugins ln -s ../../avocado-virt/avocado/plugins/virt.py virt.py ln -s ../../avocado-virt/avocado/plugins/virt_bootstrap.py cd -

3.11.3. using installed version

3.11.3.1. python setup.py install

3.11.3.2. yum install avocado-virt

3.11.4. ./scripts/avocado virt-bootstrap

3.11.4.1. Downloads JeOS

3.11.4.2. Check permissions

3.11.5. ./scripts/avocado run ../avocado-virt-tests/qemu/boot.py

3.11.6. ./scripts/avocado run ../avocado-virt-tests/qemu/migration/migration.py

3.11.7. ./scripts/avocado run ../avocado-virt-tests/qemu/usb_boot.py

3.11.8. Qemu templates

3.11.8.1. ./scripts/avocado run DEVCONF/avocado-virt/boot_lspci.py --show-job-log

3.11.8.2. ./scripts/avocado run DEVCONF/avocado-virt/boot_lspci.py --show-job-log --qemu-template DEVCONF/avocado-virt/basic.tpl