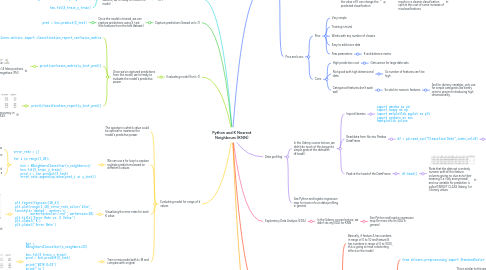

1. Create and train the model using k=1

1.1. After defining the X and y data and splitting it into the train/test subsets, we're ready to create the model

1.1.1. from sklearn.neighbors import KNeighborsClassifier

1.1.2. knn = KNeighborsClassifier(n_neighbors=1)

1.1.3. knn.fit(X_train,y_train)

2. Capture predictions (based on k=1)

2.1. Once the model is trained, we can capture predictions using X_test (the features from the test dataset)

2.1.1. pred = knn.predict(X_test)

3. Evaluating model (for k=1)

3.1. Once we've captured predictions from the model, we're ready to evaluate the model's predictive power

3.1.1. from sklearn.metrics import classification_report,confusion_matrix

3.1.2. print(confusion_matrix(y_test,pred))

3.1.2.1. This matrix shows 14 false positives (FP) and 10 false negatives (FN)

3.1.2.1.1. Remember the layout of a confusion matrix

3.1.3. print(classification_report(y_test,pred))

3.1.3.1. The report shows 92% accuracy in predicting TARGET CLASS

4. Evaluating model for range of k values

4.1. The question is what k value could be optimal to maximise the model's predictive power

4.2. We can use a for loop to capture multiple predictions based on different k values

4.2.1. error_rate = [] for i in range(1,40): knn = KNeighborsClassifier(n_neighbors=i) knn.fit(X_train,y_train) pred_i = knn.predict(X_test) error_rate.append(np.mean(pred_i != y_test))

4.2.1.1. Note that the np.mean(pred_i != y_test) method only makes sense to calculate an error rate when dealing with binary classifications

4.2.1.2. Note that every false positive and false negative comes through as a boolean True in the series produced by pred_i != y_test

4.2.1.2.1. As Python treats boolean true as 1 and boolean false as 0, pred_i != y_test effectively gives us a series of 0 and 1 values, where every 1 value represents an error; hence the mean of that series gives us an overall error rate

4.3. Visualising the error rates for each K value

4.3.1. plt.figure(figsize=(10,6)) plt.plot(range(1,40),error_rate,color='blue', linestyle='dashed', marker='o', markerfacecolor='red', markersize=10) plt.title('Error Rate vs. K Value') plt.xlabel('K') plt.ylabel('Error Rate')

4.3.1.1. The resulting plot suggests we could get a better model by choosing a k value of something like 18

4.3.1.1.1. This is known as the "elbow method"

4.4. Train a new model with k=18 and compare with original

4.4.1. knn = KNeighborsClassifier(n_neighbors=23) knn.fit(X_train,y_train) pred = knn.predict(X_test) print('WITH K=23') print('\n') print(confusion_matrix(y_test,pred)) print('\n') print(classification_report(y_test,pred))

4.4.1.1. The resulting confusion matrix and classification report shows an improvement in our model

5. Theory

5.1. KNN is classification algorithm based on a simple principle; best explained by example

5.2. Imagine data of the heights and weights of dogs and horses

5.2.1. Based on the training data in red and blue, where red = horse and blue = dog, predict whether new data in green is likely to be a horse or a dog

5.3. KNN prediction algorithm is based on a distance metric and a K value

5.3.1. Distance metric calculates the distance of test datapoints to all the labelled training datapoints

5.3.2. K represents the number of (training datapoint) nearest neighbours (defined by the distance metric) to the test datapoint

5.3.3. We can visualise how increasing the value of K can change the predicted classification

5.3.3.1. When we take a step back, we can also visualise how increasing K results in a cleaner classification split at the cost of some increase of misclassifications

5.3.3.1.1. The main take away is that choosing the K value is a balance; too low and too high both result in reduced predictive power for the model

5.4. Pros and cons

5.4.1. Pros

5.4.1.1. Very simple

5.4.1.2. Training is trivial

5.4.1.3. Works with any number of classes

5.4.1.4. Easy to add more data

5.4.1.5. Few parameters

5.4.1.5.1. K and distance metric

5.4.2. Cons

5.4.2.1. High prediction cost

5.4.2.1.1. Gets worse for large data sets

5.4.2.2. Not good with high dimensional data

5.4.2.2.1. So number of features can't be high

5.4.2.3. Categorical features don't work well

5.4.2.3.1. So stick to numeric features

6. Data profiling

6.1. In the Udemy course lecture, we didn't do much of this beyond a simple peek at the data with df.head()

6.1.1. Import libraries

6.1.1.1. import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns %matplotlib inline

6.1.2. Read data from file into Pandas DataFrame

6.1.2.1. df = pd.read_csv("Classified Data",index_col=0)

6.1.2.1.1. Note: this particular file is anonymized data consisting of numeric feature columns with arbitrary column names and a TARGET CLASS binary (1 or 0) column

6.1.3. Peek at the head of the DataFrame

6.1.3.1. df.head()

6.1.3.1.1. Note that the data set is entirely numeric with all the feature columns giving no clue as to their meaning (i.e. fully anonymized), and our variable for prediction is called TARGET CLASS (taking 1 or 0 binary values

6.2. See Python and logistic regression map for more info on data profiling in general

7. Exploratory Data Analysis (EDA)

7.1. In the Udemy course lecture, we didn't do any EDA for KNN

7.1.1. See Python and logistic regression map for more info on EDA in general

8. Data cleaning

8.1. An important consideration when looking at numeric features to include in your KNN model is that scale matters

8.1.1. Basically, if feature A has numbers in range of 0 to 10 and feature B has numbers in range of 0 to 1000, this is going to have a distorting effect on the model

8.1.2. To counteract scaling differences across numeric features, we need to standardize the numbers

8.1.2.1. from sklearn.preprocessing import StandardScaler

8.1.2.2. scaler = StandardScaler()

8.1.2.2.1. This is similar to the way we create models using sklearn but in this case the object is going to help us transform our numeric features into standardized numbers

8.1.2.3. scaler.fit(df.drop('TARGET CLASS',axis=1))

8.1.2.3.1. Note that we don't want to standardize the variable we want to predict (the binary classifier named TARGET CLASS in this case), so we pass in the whole dataframe minus that column

8.1.2.4. scaled_features = scaler.transform(df.drop('TARGET CLASS',axis=1))

8.1.2.4.1. Note how we need to pass the same dataframe into the scaler object's transform() method, which I think is rather unintuitive

8.1.2.5. df_feat = pd.DataFrame(scaled_features,columns=df.columns[:-1]) df_feat.head()

8.1.2.5.1. Note that the features in our new dataframe are now standardized and ready for the KNN model