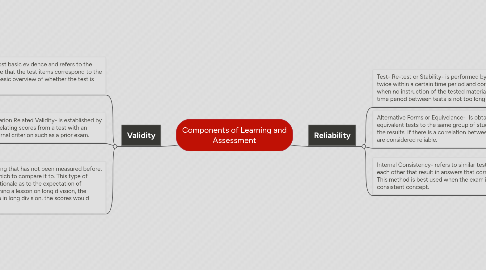

1. Validity

1.1. Content Validity- is the most basic evidence and refers to the review of the test to ensure that the test items correspond to the test objectives. It gives a basic overview of whether the test is valid.

1.2. Criterion Related Validity- is established by correlating scores from a test with an external criterion such as a prior exam.

1.2.1. Concurrent Criterion-Related Validity- is determined by administering both a new test and a prior assessment to a group and then finding the correlation between the two sets of scores. If the scores indicate a strong correlation in a criterion that people have confidence in, then the new test can be considered as a good alternative.

1.2.2. Predictive Validity- Refers to how well the test predicts the future performance of the test takers. OAA, SAT and ACT are just some examples of this type of validity evidence.

1.3. Construct Validity- is used when measuring something that has not been measured before, has invalid results or when no criterion exists with which to compare it to. This type of validity evidence is based on theory that provides rationale as to the expectation of performance on a test. For example, if one was teaching a lesson on long division, the expectation would be that after intensive instruction in long division, the scores would improve

2. Reliability

2.1. Test- Re-test or Stability- is performed by giving the test to the students twice within a certain time period and comparing the results. This is valid when no instruction of the tested material has been performed and the time period between tests is not too long that the results become invalid.

2.2. Alternative Forms or Equivelance- Is obtained by giving two equivalent tests to the same group of students and then comparing the results. If there is a correlation between the results then the tests are considered reliable.

2.3. Internal Consistency- refers to similar test items correlated with each other that result in answers that correlate with each other. This method is best used when the exam is measuring one consistent concept.

2.3.1. Split-half Method- Analyzes the correlation between two equivalent halves of a test.

2.3.2. Kuber-Richardson Methods- measure the correspondence between multiple tests of different forms. The strength of reliability is greater when an entire test measures a single concept.