1. Observability (15%)

1.1. Health Check

1.1.1. Probe

1.1.1.1. Types

1.1.1.1.1. Startup

1.1.1.1.2. Readiness

1.1.1.1.3. Liveness

1.1.1.2. Method

1.1.1.2.1. httpGet

1.1.1.2.2. TCP socket

1.1.1.2.3. cmd

1.1.1.2.4. gRPC

1.1.1.3. Parms

1.1.1.3.1. initialDelaySeconds

1.1.1.3.2. periodSeconds

1.1.1.3.3. timeoutSeconds

1.1.1.3.4. successThreshold

1.1.1.3.5. failureThreshold

1.2. Monitor

1.2.1. Metric server per cluster in memory

1.2.1.1. kubectl top pod/node

1.2.2. Prometheus

1.2.2.1. install

1.2.2.1.1. helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

1.2.2.1.2. helm upgrade -i prometheus prometheus-community/prometheus \ --namespace prometheus \ --set alertmanager.persistentVolume.storageClass="gp2",server.persistentVolume.storageClass="gp2"

1.3. Deamon set

1.3.1. 确保全部(或者某些)节点上运行一个 Pod 的副本

1.3.1.1. 在每个节点上运行集群守护进程 在每个节点上运行日志收集守护进程 在每个节点上运行监控守护进程

1.3.2. nodeSelector: beta.kubernetes.io/os: linux tolerations: - key: node-role.kubernetes.io/master operator: Exists effect: NoSchedule

1.3.3. apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-elasticsearch namespace: kube-system labels: k8s-app: fluentd-logging spec: selector: matchLabels: name: fluentd-elasticsearch template: metadata: labels: name: fluentd-elasticsearch spec: tolerations: # these tolerations are to have the daemonset runnable on control plane nodes - key: node-role.kubernetes.io/control-plane operator: Exists effect: NoSchedule

1.4. Container logging

1.4.1. kubectl logs podname -c container_name

1.4.2. k logs -f podname (rolling log)

1.4.3. write app log to stdout

1.5. Debugging

1.5.1. k get pods --all-namespaces (-A)

1.5.2. k get/describe pod xxx -o yaml

1.5.3. k get events --sort-by=.metadata.creationTimestamp

1.5.4. cluster level /var/log/containers/

1.5.5. sudo journalctl -u kubelet

2. Environment, Config and Security (25%)

2.1. YAML

2.1.1. apiVersion

2.1.1.1. Version

2.1.1.1.1. v1 - GA, stable

2.1.1.1.2. v1beta

2.1.1.1.3. v1alpha1

2.1.1.2. k api-resources

2.1.2. API deprecation

2.1.2.1. convert

2.1.2.1.1. kubectl-convert -f ingress-old.yaml --output-version networking.k8s.io/v1 | kubectl apply -f -

2.1.2.2. 12 months or 3 releases

2.1.3. kind

2.1.3.1. k explain

2.1.4. metadata (dict)

2.1.4.1. name

2.1.4.2. labels

2.1.4.2.1. app

2.1.4.2.2. type

2.1.4.2.3. anything

2.1.5. spec

2.1.5.1. containers (list/array)

2.1.5.1.1. - name: image: command: ["override entrypoint"] args: ["override CMD"]

2.1.5.2. configmap

2.1.5.2.1. spec: containers: - name: test-container image: registry.k8s.io/busybox command: [ "/bin/sh", "-c", "env" ] envFrom: - configMapRef: name: special-config

2.1.5.2.2. spec: containers: - name: test-container image: registry.k8s.io/busybox command: [ "/bin/echo", "$(SPECIAL_LEVEL_KEY) $(SPECIAL_TYPE_KEY)" ] env: - name: SPECIAL_LEVEL_KEY valueFrom: configMapKeyRef: name: special-config key: SPECIAL_LEVEL

2.1.5.2.3. spec: containers: - name: demo image: alpine command: ["sleep", "3600"] volumeMounts: - name: config mountPath: "/config" readOnly: true volumes: - name: config configMap: name: game-demo items: - key: "game.properties" path: "game.properties" - key: "user-interface.properties" path: "user-interface.properties"

2.1.5.2.4. kubectl create configmap webapp-config-map --from-literal=APP_COLOR=darkblue

2.1.5.3. secret

2.1.5.3.1. Note

2.1.5.3.2. as file/volume

2.1.5.3.3. as pod ENV

2.1.5.3.4. kubectl create secret generic db-secret --from-literal=DB_Host=sql01 --from-literal=DB_User=root --from-literal=DB_Password=password123

2.1.5.4. securitycontext

2.1.5.4.1. apiVersion: v1 kind: Pod metadata: labels: run: secbusybox name: secbusybox spec: securityContext: # add security context runAsUser: 1000 runAsGroup: 2000

2.1.5.5. resource limit

2.1.5.5.1. spec: containers: - image: nginx name: nginx resources: requests: memory: "100Mi" cpu: "0.5" limits: cpu: "1"

2.2. Custom Resource

2.2.1. definition

2.2.1.1. apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition metadata: name: internals.datasets.kodekloud.com spec: group: datasets.kodekloud.com versions: - name: v1 served: true storage: true schema: openAPIV3Schema: type: object properties: spec: type: object properties: internalLoad: type: string range: type: integer percentage: type: string scope: Namespaced names: plural: internals singular: internal kind: Internal shortNames: - int

2.2.2. controller

2.3. Security

2.3.1. User 1000 in Dockerfile

2.3.2. Pod level

2.3.2.1. spec: securityContext: runAsUser: 1000 runAsGroup: 3000 fsGroup: 2000

2.3.3. Container level (override pod)

2.3.3.1. spec: securityContext: runAsUser: 1000 containers: - name: sec-ctx-demo-2 image: gcr.io/google-samples/node-hello:1.0 securityContext: runAsUser: 2000 allowPrivilegeEscalation: false capabilities: add: ["SYS_TIME"]

2.3.3.1.1. k exec -it ubuntu-sleeper -- cat /proc/1/status | grep 'Cap'

2.3.3.1.2. login to container and DATE -S

2.3.3.2. spec: containers: - image: nginx securityContext: capabilities: add: ["SYS_TIME", "NET_ADMIN"]

2.4. Access Control

2.4.1. Authencication

2.4.1.1. Apiserver

2.4.1.1.1. User/Password

2.4.1.1.2. User/Token

2.4.2. Authorization

2.4.2.1. RBAC

2.4.2.1.1. Role

2.4.2.1.2. RoleBinding

2.4.2.1.3. clusterrole/binding

2.5. Service Account

2.5.1. k create serviceaccount xxxx

2.5.1.1. apiVersion: v1 kind: Pod metadata: creationTimestamp: null labels: run: busybox name: busybox spec: serviceAccountName: admin

2.5.2. k create token xxxx

2.6. admission controller

2.6.1. namespace lifecycle

2.6.2. /etc/kubernetes/manifests/kube-apiserver.yaml

2.6.2.1. apiVersion: v1 kind: Pod metadata: annotations: kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 10.4.12.6:6443 creationTimestamp: null labels: component: kube-apiserver tier: control-plane name: kube-apiserver namespace: kube-system spec: containers: - command: - kube-apiserver - --advertise-address=10.4.12.6 - --allow-privileged=true - --authorization-mode=Node,RBAC - --client-ca-file=/etc/kubernetes/pki/ca.crt - --enable-admission-plugins=NodeRestriction,NamespaceAutoProvision - --disable-admission-plugins=DefaultStorageClass

2.6.3. webhook

2.6.3.1. apiVersion: apps/v1 kind: Deployment metadata: name: webhook-server namespace: webhook-demo labels: app: webhook-server spec: replicas: 1 selector: matchLabels: app: webhook-server template: metadata: labels: app: webhook-server spec: securityContext: runAsNonRoot: true runAsUser: 1234 containers: - name: server image: stackrox/admission-controller-webhook-demo:latest imagePullPolicy: Always ports: - containerPort: 8443 name: webhook-api volumeMounts: - name: webhook-tls-certs mountPath: /run/secrets/tls readOnly: true volumes: - name: webhook-tls-certs secret: secretName: webhook-server-tls

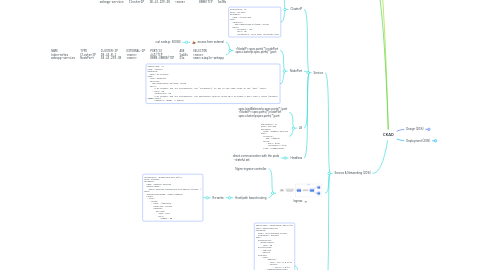

3. Service & Networking (20%)

3.1. Service

3.1.1. ClusterIP

3.1.1.1. spec.clusterIp:spec.ports[*].port

3.1.1.1.1. access within the cluster

3.1.1.1.2. NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 10m webapp-service ClusterIP 10.43.239.38 <none> 8080/TCP 5m30s

3.1.1.2. apiVersion: v1 kind: Service metadata: name: my-service spec: selector: app.kubernetes.io/name: MyApp ports: - protocol: TCP port: 80 targetPort: 9376 #pod listening port

3.1.2. NodePort

3.1.2.1. <NodeIP>:spec.ports[*].nodePort spec.clusterIp:spec.ports[*].port

3.1.2.1.1. access from external

3.1.2.1.2. NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 5m48s <none> webapp-service NodePort 10.43.239.38 <none> 8080:30080/TCP 25s name=simple-webapp

3.1.2.2. apiVersion: v1 kind: Service metadata: name: my-service spec: type: NodePort selector: app.kubernetes.io/name: MyApp ports: # By default and for convenience, the `targetPort` is set to the same value as the `port` field. - port: 80 targetPort: 80 # By default and for convenience, the Kubernetes control plane will allocate a port from a range (default: 30000-32767) nodePort: 30007 # expose

3.1.3. LB

3.1.3.1. spec.loadBalancerIp:spec.ports[*].port <NodeIP>:spec.ports[*].nodePort spec.clusterIp:spec.ports[*].port

3.1.3.2. apiVersion: v1 kind: Service metadata: name: example-service spec: selector: app: example ports: - port: 8765 targetPort: 9376 type: LoadBalancer

3.1.4. Headless

3.1.4.1. direct communication with the pods - stateful set

3.2. Ingress

3.2.1. Nginx-ingress-controller

3.2.2. Host/path based routing

3.2.2.1. Re-write

3.2.2.1.1. apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: minimal-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx-example rules: - http: paths: - path: /testpath pathType: Prefix backend: service: name: test port: number: 80

3.3. NetworkPolicy

3.3.1. apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: default spec: podSelector: matchLabels: role: db policyTypes: - Ingress - Egress ingress: - from: - ipBlock: cidr: 172.17.0.0/16 except: - 172.17.1.0/24 - namespaceSelector: matchLabels: project: myproject - podSelector: matchLabels: role: frontend ports: - protocol: TCP port: 6379 egress: - to: - ipBlock: cidr: 10.0.0.0/24 ports: - protocol: TCP port: 5978

3.3.2. apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: np1 namespace: venus spec: podSelector: matchLabels: id: frontend # label of the pods this policy should be applied on policyTypes: - Egress # we only want to control egress egress: - to: # 1st egress rule - podSelector: # allow egress only to pods with api label matchLabels: id: api - ports: # 2nd egress rule - port: 53 # allow DNS UDP protocol: UDP - port: 53 # allow DNS TCP protocol: TCP

3.3.2.1. Limit frontend only can access api and DNS

4. Design (20%)

4.1. Arch design

4.1.1. Control Plane

4.1.1.1. API server

4.1.1.1.1. front end of the Kubernetes control plane - Any interaction or request from users or internal Kubernetes components with the control plane

4.1.1.2. etcd - key value store (DB)

4.1.1.2.1. 用于存储集群的所有状态信息。etcd的高可用性是通过在多个节点上进行数据复制和自动故障转移来实现的

4.1.1.3. scheduler

4.1.1.3.1. 负责将Pod调度到集群中的节点上,根据Pod的资源需求和节点的可用性等因素进行决策

4.1.1.4. controller manager

4.1.1.4.1. watches the shared state of the cluster through the apiserver and makes changes attempting to move the current state towards the desired state

4.1.2. Node

4.1.2.1. kubelet - agent

4.1.2.1.1. register the node with the apiserver

4.1.2.1.2. manage PodSpec

4.1.2.2. kube-proxy

4.1.2.2.1. 服务发现:当Kubernetes集群中的服务被创建时,Kube-proxy会监视服务的创建和删除,并根据服务规范自动生成iptables规则或ipvs规则,用于将服务的流量路由到正确的Pod。

4.1.2.2.2. 负载均衡:Kube-proxy会自动将服务的流量均匀地分布到服务对应的Pod上,以实现负载均衡和高可用性。

4.1.2.2.3. 网络代理:Kube-proxy会在节点上运行一个代理进程,负责转发流量到正确的Pod上,并将Pod的源IP地址伪装为服务的IP地址,从而保证了网络安全性。

4.1.2.2.4. 高可用性:Kube-proxy使用一些策略来保证其本身的高可用性,例如在集群中部署多个Kube-proxy实例,从而避免单点故障,并使用选举机制来选举主节点来确保服务的持续可用性。

4.1.2.3. container runtime

4.1.2.3.1. such as containerd, CRI-O

4.2. Container

4.2.1. initContainers

4.2.1.1. usage: install and run tools, wait for other components

4.2.1.2. spec: containers: - ... initContainers: - name: startup-delay image: busybox:stable command: ['sh', '-c', 'sleep 10']

4.2.1.3. - name: init-myservice image: busybox:1.28 command: ['sh', '-c', "until nslookup myservice.$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace).svc.cluster.local; do echo waiting for myservice; sleep 2; done"]

4.2.2. multi-container

4.2.2.1. Ambassador

4.2.2.1.1. containers: - command: - sh - -c - while true; do curl localhost:9090; sleep 5; done image: radial/busyboxplus:curl name: busybox - name: ambassador image: haproxy:2.4 volumeMounts: - name: haproxy-config mountPath: /usr/local/etc/haproxy/ volumes: - name: haproxy-config configMap: name: haproxy-config

4.2.2.1.2. Usage: proxy, DB routing

4.2.3. spec: containers: - args: - bin/sh - -c - ls; sleep 3600 image: busybox name: busybox1 - args: - bin/sh - -c - echo Hello world; sleep 3600 image: busybox name: busybox2

4.2.4. Jobs

4.2.4.1. apiVersion: batch/v1 kind: Job metadata: name: pi spec: template: spec: containers: - name: pi image: perl:5.34.0 command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"] restartPolicy: Never backoffLimit: 4

4.2.4.1.1. activeDeadlineSeconds: 30 completions: 5 parallelism: 2

4.2.4.2. apiVersion: batch/v1 kind: CronJob metadata: name: hello spec: schedule: "*/1 * * * *" jobTemplate: spec: template: spec: containers: - name: hello image: busybox:1.28 imagePullPolicy: IfNotPresent command: - /bin/sh - -c - date; echo Hello from the Kubernetes cluster restartPolicy: OnFailure

4.2.4.2.1. startingDeadlineSeconds: 10 (terminate if taking more 10secs to start)

4.3. Pod

4.3.1. Labels

4.3.1.1. tag - app/function/tier/release/env

4.3.1.2. kind: Pod metadata: name: label-demo labels: environment: production app: nginx

4.3.1.3. kubectl get pods -l env=dev --no-headers | wc -l

4.3.2. ReplicaSet

4.3.2.1. selector

4.3.2.1.1. matchLabels

4.3.2.1.2. apiVersion: apps/v1 kind: ReplicaSet metadata: name: replicaset-1 spec: replicas: 2 selector: matchLabels: tier: front-end template: metadata: labels: tier: front-end spec: containers: - name: nginx image: nginx

4.3.2.2. Provide HA, grouping and deployment selection

4.3.3. Toleration

4.4. Deploy

4.4.1. apiVersion: apps/v1 kind: Deployment metadata: name: "2048-deployment" namespace: "2048-game" spec: replicas: 5 selector: matchLabels: app: "2048" template: metadata: labels: app: "2048" spec: containers: - image: alexwhen/docker-2048 imagePullPolicy: Always name: "2048" ports: - containerPort: 30080

4.5. Node

4.5.1. nodeSelector

4.5.1.1. spec: nodeSelector: nodeName: nginxnode

4.5.2. Affinity - 指定Pod应该在哪些节点上运行

4.5.2.1. apiVersion: v1 kind: Pod metadata: name: with-node-affinity spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: topology.kubernetes.io/zone operator: In values: - antarctica-east1 - antarctica-west1 preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 preference: matchExpressions: - key: another-node-label-key operator: In values: - another-node-label-value containers: - name: with-node-affinity image: registry.k8s.io/pause:2.0

4.5.2.2. 选择AWS的AZ

4.5.2.2.1. affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: failure-domain.beta.kubernetes.io/zone operator: In values: - us-west-2a - us-west-2b

4.5.3. Taint and Toleration - block pod on node

4.5.3.1. taint on node

4.5.3.1.1. k taint nodes node01 app=green:NoSchedule

4.5.3.2. toleration on pod

4.5.3.2.1. spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent tolerations: - key: "example-key" operator: "Exists" effect: "NoSchedule - key: "key1" operator: "Equal" value: "value1" effect: "NoExecute"

4.6. Volume

4.6.1. apiVersion: v1 kind: Pod metadata: name: hostpath-volume-test spec: containers: - name: busybox image: busybox:stable command: ['sh', '-c', 'cat /data/data.txt'] volumeMounts: - name: host-data mountPath: /data volumes: - name: host-data hostPath: path: /etc/hostPath type: Directory

4.6.1.1. hostPath volume types: Directory – Mounts an existing directory on the host. DirectoryOrCreate – Mounts a directory on the host, and creates it if it doesn’t exist. File – Mounts an existing single file on the host. FileOrCreate – Mounts a file on the host, and creates it if it doesn’t exist.

4.6.2. apiVersion: v1 kind: Pod metadata: name: emptydir-volume-test spec: containers: - name: busybox image: busybox:stable command: ['sh', '-c', 'echo "Writing to the empty dir..." > /data/data.txt; cat /data/data.txt'] volumeMounts: - name: emptydir-vol mountPath: /data volumes: - name: emptydir-vol emptyDir: {}

4.6.3. PersistentVolume

4.6.3.1. apiVersion: v1 kind: PersistentVolume metadata: name: hostpath-pv spec: capacity: storage: 1Gi accessModes: - ReadWriteOnce storageClassName: slow hostPath: path: /etc/hostPath type: Directory

4.6.3.1.1. Reclaim Policy

4.6.3.2. apiVersion: v1 kind: PersistentVolumeClaim metadata: name: hostpath-pvc spec: accessModes: - ReadWriteOnce resources: requests: storage: 200Mi storageClassName: slow

4.6.3.3. apiVersion: v1 kind: Pod metadata: name: pv-pod-test spec: containers: - name: busybox image: busybox:stable command: ['sh', '-c', 'cat /data/data.txt'] volumeMounts: - name: pv-host-data mountPath: /data volumes: - name: pv-host-data persistentVolumeClaim: claimName: hostpath-pvc

4.6.3.4. Cloud

4.6.3.4.1. in-tree (to be deprecated)

4.6.3.4.2. Container Storage Interfact

4.7. StatefulSet (optional)

4.7.1. 按顺序部署:StatefulSet可以按照编号顺序部署Pod,确保有序启动和关闭。

4.7.2. 稳定的网络标识符:每个Pod都有一个唯一的网络标识符,可以用于集群内部的服务发现和通信。

4.7.3. 稳定的持久化存储:每个Pod都有一个唯一的持久化存储卷,可以在Pod重新启动时保留数据。

4.7.4. 升级和扩展:StatefulSet支持滚动升级和扩展,在保证应用程序可用性的同时,动态地调整Pod的数量和版本。

5. Deployment (20%)

5.1. Rolling update

5.1.1. apiVersion: apps/v1 kind: Deployment metadata: name: rolling-deployment spec: replicas: 5 selector: matchLabels: app: rolling template: metadata: labels: app: rolling spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80

5.1.1.1. kubectl set image deployment/rolling-deployment nginx=nginx:1.16.1

5.1.1.2. kubectl rollout status deployment/rolling-deployment

5.1.2. or

5.1.3. kubectl edit deployment rolling-deployment containers: - name: nginx image: nginx:1.20.1

5.1.4. k apply -f deploy.yaml --record=true

5.1.5. kubectl rollout undo deployment/rolling-deployment

5.1.6. k rollout history deployment/myapp-deployment

5.2. Blue/Green

5.2.1. Labels

5.2.1.1. apiVersion: apps/v1 kind: Deployment metadata: name: blue-deployment spec: replicas: 1 selector: matchLabels: app: bluegreen-test color: blue template: metadata: labels: app: bluegreen-test color: blue spec: containers: - name: nginx image: nginx:blue ports: - containerPort: 80

5.2.1.1.1. apiVersion: apps/v1 kind: Deployment metadata: name: green-deployment spec: replicas: 1 selector: matchLabels: app: bluegreen-test color: green template: metadata: labels: app: bluegreen-test color: green spec: containers: - name: nginx image: nginx:green ports: - containerPort: 80

5.2.2. Service - Selector - label

5.2.2.1. apiVersion: v1 kind: Service metadata: name: bluegreen-test-svc spec: selector: app: bluegreen-test *color: blue* ports: - protocol: TCP port: 80 targetPort: 80

5.2.2.1.1. spec: selector: app: bluegreen-test color: green

5.3. Canary (small %)

5.3.1. apiVersion: apps/v1 kind: Deployment metadata: name: main-deployment spec: replicas: 3 selector: matchLabels: app: canary-test environment: main template: metadata: labels: app: canary-test environment: main spec: containers: - name: nginx image: linuxacademycontent/ckad-nginx:1.0.0 ports: - containerPort: 80

5.3.1.1. Control by replica

5.3.1.1.1. apiVersion: apps/v1 kind: Deployment metadata: name: canary-deployment spec: replicas: 1 selector: matchLabels: app: canary-test environment: *canary* template: metadata: labels: app: canary-test environment: canary spec: containers: - name: nginx image: linuxacademycontent/ckad-nginx:canary ports: - containerPort: 80

5.3.2. apiVersion: v1 kind: Service metadata: name: canary-test-svc spec: selector: **app: canary-test** ports: - protocol: TCP port: 80 targetPort: 80

5.3.2.1. k scale deployment --replicas=x main

5.3.3. istio - control %

5.3.3.1. virtual service

5.3.3.2. destination rule

5.4. Helm

5.4.1. Chart - package contains K8S resources for app

5.4.2. Repo

5.4.2.1. helm repo add bitnami https://charts.bitnami.com/bitnami

5.4.2.2. helm repo update

5.4.2.3. helm search repo wordpress

5.4.2.4. helm search hub wordpress

5.4.3. Install

5.4.3.1. kubectl create namespace dokuwiki

5.4.3.2. helm show values bitnami/appache | yq e | grep repli # parse yaml with color

5.4.3.2.1. helm -n mercury install rel-int bitnami/apache --set replicaCount=2

5.4.3.2.2. helm install my-release ./my-chart --set service.port=8080

5.4.3.3. helm install --set persistence.enabled=false -n dokuwiki dokuwiki bitnami/dokuwiki

5.4.3.4. helm install release-1 bitnami/wordpress

5.4.3.4.1. helm install release-1 .

5.4.3.5. helm uninstall release-1

5.4.3.6. helm pull --untar bitnami/apache

5.5. Docker

5.5.1. docker build -t name:tag .

5.5.2. docker push name/repo

5.5.3. docker run python:3.6 cat /etc/*release*