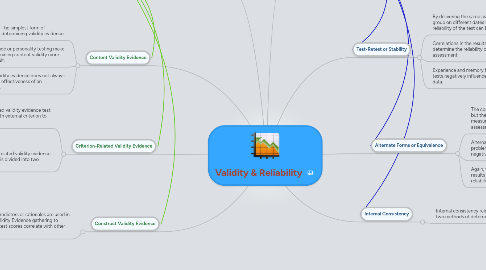

1. Content Validity Evidence

1.1. The simplest form of determining validity evidence.

1.2. Aptitude or personality testing make determining content validity more difficult.

1.3. Content validity evidence does not always support the effectiveness of an assessment.

2. Criterion-Related Validity Evidence

2.1. To obtain criterion-related validity evidence test scores are correlated with external criterion to determine their validity.

2.2. Criterion-related validity evidence gathering is divided into two subsets.

2.2.1. Predictive Validity Evidence

2.2.1.1. "Determined by correlating test scores with criterion collected after a period of time has passed" (Kubiszyn & Borich, 2012).

2.2.1.1.1. In other words, the student test scores are measured against future criteria, such as student test scores in 2012 being compared to student test scores in 2014.

2.2.2. Concurrent Criterion-related Evidence

2.2.2.1. "Determined by correlating test scores with a criterion measure at the same time" (Kubiszyn & Borich, 2012, p. 339).

2.2.2.1.1. In other words, the student test scores are measured against current criteria, such as 2012 national test score averages.

3. Construct Validity Evidence

3.1. Theoretical predictors or rationales are used in Construct Validity Evidence gathering to determine if test scores correlate with other variables.

4. "Validity: Does the test measure what it is supposed to measure?" (Kubiszyn & Borich, 2012, p. 329)

4.1. If a test has an intended purpose, then validity evidence demonstrates if the test fulfills that purpose.

5. Test-Retest or Stability

5.1. By delivering the same assessment to the same group on different dates more than once, the reliability of the test can be measured.

5.2. Correlations in the results can often determine the reliability of the assessment.

5.3. Experience and memory from past tests negatively influence test-retest data.

6. Alternate Forms or Equivalence

6.1. The concept of alternate forms is similar to test-retest, but the tests are not identical. Instead, the tests measure the same basic concepts using different assessment items.

6.2. Alternate forms can solve the test-retest problem in which experience and memory negatively impact the results.

6.3. Again, the correlation in the results is measured to test for reliability.

7. Internal Consistency

7.1. Internal consistency relies on two methods of determination.

7.1.1. The Kuder-Richardson method shows how reliably the test measures a single concept.

7.1.2. The split-half method shows the overall reliability of a test by dividing that test in half and delivering one half as an assessment to half the students, and the other half of the test to the other half of students.

7.1.2.1. The reliability of the spilt-half method is undermined by the correlations, thus the results are merely estimates.

7.1.2.2. The Spearman-Brown prophecy formula is employed to adjust these estimates, thereby making them more reliable.