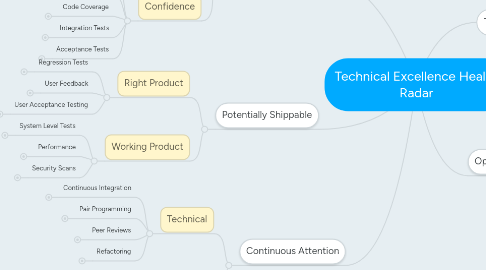

1. Continuous Attention

1.1. Technical

1.1.1. Continuous Integration

1.1.1.1. What percentage of the team commits code on the same day they change it

1.1.1.2. What percentage of team members commit to the baseline

1.1.1.3. How easy are your merges (1-5)

1.1.1.4. How effective are your mitigation processes when a feature needs to be disabled/removed from an implementation (1-5)

1.1.2. Pair Programming

1.1.2.1. What percentage of the sprint does your team pair

1.1.2.2. What percentage of the team participates in pairing

1.1.2.3. How frequently do you rotate individuals to pair with (1-5)

1.1.2.4. How frequently do you rotate driver & navigator (1-5)

1.1.2.5. How effective is your team at handling conflict within pairs (1-5)

1.1.2.6. How focused is the navigator on average (1-5)

1.1.3. Peer Reviews

1.1.3.1. How helpful are peer reviews (1-5)

1.1.3.2. How lean are your peer reviews (1-5)

1.1.3.3. What percentage of code comited gets peer reviewed

1.1.3.4. How confident do you feel the peer reviewers actually understand and followed the code they reviewed (1-5)

1.1.4. Refactoring

1.1.4.1. How effective is the frequency of refactoring (1-5)

1.1.4.2. How effective is the average size of refactoring (1-5)

1.1.4.3. How effective is the teams refactoring efforts (1-5)

1.2. Iteration

1.2.1. Sustainable Pace

1.2.1.1. What percentage of the team does not work overtime

1.2.1.2. On average, what percentage of each sprint gets done

1.2.1.3. How sustainable do you believe your development process is (1-5)

1.2.1.4. How well is the team taking on the right amount of work (1-5)

1.2.2. Focus

1.2.2.1. How well known is the sprint goal (1-5)

1.2.2.2. What percentage of a sprint revolve around a sprint goal

1.2.2.3. What percentage of time during the sprint does the team work on the sprint

1.2.2.4. How well does the team handle distractions and interruptions during the sprint (1-5)

1.2.3. Technical Debt

1.2.3.1. How well known is the project's technical debt (1-5)

1.2.3.2. How effective is the team's process in paying off technical debt (1-5)

1.2.3.3. How effective is the current rate of technical debt payment (1-5)

1.2.3.4. What percentage of the baseline is not dead code

2. Development

2.1. Habits

2.1.1. Simple/Emergent Design

2.1.1.1. What percentage of work is done to accomplish the stories at hand and not potential future stories

2.1.1.2. What percentage of time does the team do the simplest thing that could possibly work next instead of fully design up front

2.1.1.3. How testable is the code/design (1-5)

2.1.1.4. How understandable is the code/design (1-5)

2.1.1.5. How browsable is the code/design (1-5)

2.1.1.6. How explainable is the code/design (1-5)

2.1.2. Maintainability

2.1.2.1. How easy is it to maintain the baseline (1-5)

2.1.2.2. How easy is to maintain new features in the baseline (1-5)

2.1.2.3. What percentage of builds are static analysis tools run against

2.1.2.4. What percentage of code is not repeated lines of code

2.1.2.5. How effective is your use of static analysis tools output (1-5)

2.1.2.6. How well known is the code complexity to the team (1-5)

2.1.3. Clean Code

2.1.3.1. How well are newcomers able to read the code that has been written (1-5)

2.1.3.2. What percentage of comments answer the "why" not the "how"

2.1.3.3. What percentage of methods have less than 3 arguments

2.1.3.4. What percentage of methods are less than X (7?) lines of code

2.1.3.5. What percentage of classes are less than 1000 lines of code

2.1.3.6. What percentage of your tests are written to the same level as production code

2.1.3.7. What percentage of the code follows an agreed upon coding standard

2.1.4. Test Driven Development

2.1.4.1. How often do you write a test before the code (1-5)

2.1.4.2. How often do you write the right tests (1-5)

2.1.4.3. Have to fix a failing test because it was written wrong (1-5)

2.1.4.4. How often do you run all the class tests after changing code (1-5)

2.1.4.5. What percentage of the team participates in TDD

2.1.5. Security

2.1.5.1. How secure is the baseline (1-5)

2.1.5.2. How mindful is the team of vulnerabilities in the system (1-5)

2.1.5.3. How confident are you that your code will maintain it's level of secureness with the current DoD and team practices (1-5)

2.2. Confidence

2.2.1. Automated Builds

2.2.1.1. From a fresh clone how easy is it to build the project (1-5)

2.2.1.2. How efficient are your builds (1-5)

2.2.1.3. A question that suggests builds aren't slowing down the team and aren't a big impediment to improving velocity or quality

2.2.1.3.1. How long does the build take? Is it less than 10 minutes?

2.2.2. Unit Tests

2.2.2.1. How empowered and willing is the team in writing unit tests (1-5)

2.2.2.2. When a test fails how easy is it to identify why (1-5)

2.2.2.3. How much closer did your last sprint get you to your team's unit test goals (1-5)

2.2.2.4. How efficient are your unit tests (1-5)

2.2.2.5. How fast are your unit tests (1-5)

2.2.2.6. What percentage of unit tests are automated

2.2.3. Code Coverage

2.2.3.1. What percentage of test code coverage is your project at

2.2.3.2. What percentage of branch coverage is your project at

2.2.3.3. How much closer did your last sprint get you to your team's code coverage goals (1-5)

2.2.4. Integration Tests

2.2.4.1. How empowered and willing is the team in writing integration tests (1-5)

2.2.4.2. How often are integration tests run (1-5)

2.2.4.3. How much of your system is covered by integration tests (1-5)

2.2.4.4. What percentage of your integration tests are automated

2.2.4.5. How fragile are your integration tests (5-1)

2.2.4.6. How often do you have false positives (5-1)

2.2.5. Acceptance Tests

2.2.5.1. How empowered and willing is the team in writing acceptance tests (1-5)

2.2.5.2. How confident are you that a sprint is complete and bug free (1-5)

2.2.5.3. How easy is it to validate and verify a story is complete when a change is made (1-5)

2.2.5.4. How confident are you that at the end of the sprint your build will run on prod the same as it will on test (1-5)

2.2.5.5. What percentage of acceptance tests are automated

3. Potentially Shippable

3.1. Right Product

3.1.1. Regression Tests

3.1.1.1. How confident are you that this sprint has not broken any previous stories (1-5)

3.1.1.2. What percentage of regression bugs are found during the sprint

3.1.1.3. What percentage of regression tests are automated

3.1.2. User Feedback

3.1.2.1. At what level do you believe you have solved the real problem a user had (1-5)

3.1.2.2. How effective was the interaction with the PO, customer, stakeholders and users last sprint (1-5)

3.1.2.3. What percentage of stories in the sprint involved user feedback

3.1.3. User Acceptance Testing

3.1.3.1. When a user first sees the outcome of the latest sprint, how do you think they will respond? (wtf, that's not working right, hmm what is that supposed to do, wow that's nice)

3.1.3.2. When a user first sees the outcome of the latest sprint how well understood will the work be (1-5)

3.1.3.3. How effective do you believe the UAT testing processes are (1-5)

3.1.3.4. How efficient do you believe the UAT testing processes are (1-5)

3.1.3.5. How happy was the Product Owner with the last sprint (1-5)

3.1.3.6. What was the PO's minimum happiness level for all the stories last sprint (1-5)

3.2. Working Product

3.2.1. System Level Tests

3.2.1.1. How quickly can you validate and verify the baseline is potentially shipable when a change is made (1-5)

3.2.1.2. If the last sprint's work was used to run a lasik machine, how comfortable would you feel going under the laser tomorrow (1-5)

3.2.1.3. How sturdy (non-fragile) is the team's system level tests (1-5)

3.2.1.4. How easy is it to add new system level tests (1-5)

3.2.2. Performance

3.2.2.1. How well known is it if the last sprint improved or hurt performance (1-5)

3.2.2.2. What percentage of stories passed the team's minimum performance requirements

3.2.2.3. How confidant are you that the product will be able to support all the users in production (1-5)

3.2.3. Security Scans

3.2.3.1. What percentage of builds are security scanned

3.2.3.2. How timely is your security scan feedback (1-5)

3.2.3.3. What percentage of security scans are automated

4. Health Radar draft

4.1. Continuous Delivery

4.1.1. Environment

4.1.1.1. Control of Test

4.1.1.1.1. rename?

4.1.1.2. Hardware to Support

4.1.1.2.1. rename?

4.1.1.3. Tools to Support

4.1.2. Development

4.1.2.1. Commit Often

4.1.2.2. Continuous Integration

4.1.2.3. Automated Unit Tests

4.1.2.3.1. do we need word "automated"?

4.1.2.4. Code Coverage

4.1.2.5. Tests Run Quickly

4.1.2.6. Tests Quickly Identify What is a Problem

4.1.2.6.1. rename?

4.1.2.7. Automated Builds

4.1.3. Testing

4.1.3.1. Automated Deployments to Test

4.1.3.1.1. do we need word "automated"

4.1.3.2. Automated Security Scanning

4.1.3.3. Integration Tests

4.1.3.4. System Level Tests

4.1.3.5. User Acceptance Testing

4.1.4. Production Deployment

4.1.4.1. Potentially Shipable

4.1.4.2. User Feedback

4.1.4.2.1. rename?

4.2. Good Design

4.2.1. Simple Design

4.2.1.1. Test Driven Development

4.2.1.2. Focus

4.2.1.2.1. rename?

4.2.1.3. Clean Code

4.2.1.3.1. rename?

4.2.2. Quality

4.2.2.1. Pair Programming

4.2.2.2. Refactoring

4.2.2.3. Tools and Resources

4.2.2.4. Peer Reviews

4.2.2.4.1. rename?

4.2.2.5. Security

4.3. Confidence

4.3.1. Stability

4.3.1.1. rename?

4.3.1.1.1. regression.. mm don't like this

4.3.1.2. Automated Regression Tests

4.3.1.3. Technical Debt

4.3.1.3.1. metrics

4.3.1.4. Number of Critical Defects

4.3.1.4.1. rename?

4.3.2. Proof of Done

4.3.2.1. Automated Acceptance Tests

4.3.2.2. Definition of Done

4.3.2.3. Verification

4.3.3. Production

4.3.3.1. Stability

4.3.3.2. Root Cause Analysis

4.3.3.3. Performance

4.3.3.4. Real User Monitoring

4.3.3.5. Validation

4.3.3.5.1. rename?

4.4. People

4.4.1. Skills

4.4.1.1. Cross Functional

4.4.1.2. Empowered

4.4.1.2.1. rename?

4.4.1.3. Learning/Applying New Skills

4.4.1.4. Community of Practice

4.4.1.5. Innovation

4.4.2. Whole Team

4.4.2.1. Everyone Commits

4.4.2.1.1. hg commit

4.4.2.2. Collective Ownership

4.4.2.3. Bus Value

4.4.2.3.1. rename?

4.4.2.4. Team Improvement

4.4.2.4.1. rename?

4.4.2.5. Sustainable Pace

4.5. Where Do These Go?

4.5.1. Lean Project Feedback

4.5.1.1. when do we cut features?

4.5.1.2. maybe a measurement of something else

4.5.1.3. maybe delete?

5. Technical Excellence

5.1. Definitions

5.1.1. Excellence

5.1.1.1. the state, quality, or condition of excelling; superiority, with excel being defined as to be better than; surpass-- to surpass or do better than others

5.1.1.1.1. Webster

5.1.2. Agile Principle #9

5.1.2.1. "Continuous attention to technical excellence and good design enhances agility."

5.1.2.1.1. Continuous Attention

5.1.2.1.2. Technical Excellence

5.1.2.1.3. Good Design

5.1.2.1.4. Enhances Agility

5.1.3. technical excellence means building quality in

5.1.4. Agile Principle #9 - Cloud Space

5.1.4.1. Not Business Value

5.1.4.1.1. Working features

5.1.4.1.2. ability to add new features quickly

5.1.4.1.3. daily updates on progress

5.1.4.1.4. regular planning meetings

5.1.4.2. Technical Value

5.1.4.2.1. clean & easy to understand code

5.1.4.2.2. automated tests

5.1.4.2.3. clear direction

5.1.4.2.4. good tools

5.1.4.2.5. snacks

5.1.4.3. Examples

5.1.4.3.1. Clean code

5.1.4.3.2. Technical Debt

5.2. Metrics to Gather for each Category

5.2.1. hmmm should this just be bubbles off the health diagram so they don't have to be synced up???

6. Take the Quiz

7. Some Hard Work

8. Operations

8.1. Performance

8.1.1. Stability

8.1.1.1. # of critical defects - need better questions somehow

8.1.1.1.1. How much feedback on critical defects do you get(1-5)

8.1.1.1.2. How frequently do you address critical outages(1-5)

8.1.1.1.3. How long does the average critical defect live before being fixed

8.1.1.2. What is the up time percentage of your system

8.1.1.3. How effective is the team at fixing critical production bugs (1-5)

8.1.1.4. How efficient is the team at fixing critical production bugs (1-5)

8.1.2. Real User Monitoring (RUM)

8.1.2.1. How quickly would you know when a system is degraded (1-5)

8.1.2.2. How easy is it to gather new application and user statistics (1-5)

8.1.2.3. How well does the team utilize RUM stats in making decisions (1-5)

8.1.2.4. How well known are the most used and least used features of your system (1-5)

8.2. Verification

8.2.1. Root Cause Analysis

8.2.1.1. How empowered and willing is the team in performing root cause analysis when a problem is identified in production (1-5)

8.2.1.2. How easy is to identify what a problem is in production (1-5)

8.2.1.3. How effective are the tools and processes in automatically identifying the root cause of a production problem (1-5)

8.2.2. Implementation

8.2.2.1. Is the software running in production the same software built by build tool after initial commit?

8.2.2.2. What percentage of your production configurations are managed by source control

8.2.2.3. How quick is it to verify the system is functioning correctly after an implementation (1-5)

8.2.2.4. How successful was the last implementation (1-5)

8.2.2.5. How successful is the average implementation (1-5)

8.2.2.6. How repeatable is your deployment process (1-5)

8.2.2.7. How similar are your test and prod deployment processes (1-5)

9. Team

9.1. Core

9.1.1. Empowered

9.1.1.1. To what level do you feel you are empowered to do what needs to be done to ensure quality (1-5)

9.1.1.2. What percentage of the Definition of Done and team processes were defined by the team

9.1.1.3. How lean do you feel your team is (1-5)

9.1.1.4. How do you feel management is supporting your team (1-5)

9.1.2. Collective Ownership

9.1.2.1. How empowered and willing is the team to make changes on any project within the team (1-5)

9.1.2.2. How empowered and willing is the team to make design decisions on any project within the team (1-5)

9.1.2.3. What level of individual team members mistakes are seen as the team's problems (1-5)

9.1.2.4. When work is held up (by non external dependencies), how empowered and willing is the team to continue with that work (1-5)

9.1.3. Cross Functional

9.1.3.1. For any given feature what percentage can your team accomplish without external help/dependencies?

9.1.3.2. What percentage of all the roles needed to accomplish any given story are team members

9.1.3.3. What percentage of roles on the team can all the team members effectively participate in and accomplish

9.1.3.4. What percentage of roles on the team are all the team members empowered to participate in and accomplish

9.1.4. Bus Factor

9.1.4.1. What percentage of the team would you have to lose to really stop progress on any story/feature/baseline/project

9.1.4.2. What percentage of the team would have to miss work for any single story to stop progress

9.1.4.3. What percentage of team members would have to be out of the country for your On Call Return to Service Time to double

9.1.4.4. What percentage of roadblocks were not due to vacation/sick/missing/absent team members

9.1.4.4.1. How many roadblocks/impediments arose last sprint due to vacation/sickness/missing/absent team members?

9.1.4.5. When a new feature is added to the project how many sprints will it take for the rest of the team to be able to work on it without help from the original implementers?

9.1.5. Definition of Done

9.1.5.1. How well does the team know the definition of done (1-5)

9.1.5.2. How well does the team commit to the definition of done (1-5)

9.1.5.3. How useful is the definition of done (1-5)

9.1.5.4. On average what percentage of the definition of done are stories being accepted at

9.1.5.5. On average how many stories a sprint are being accepted that meet the definition of done

9.2. Adapting

9.2.1. New Skills

9.2.1.1. How adaptive is your team in learning/applying the required skills to accomplish a sprint (1-5)

9.2.1.2. How adaptive is your team in learning/applying new skills to better meet the definition of done (1-5)

9.2.1.3. How effective are Communities of Practice to your team (1-5)

9.2.1.4. How helpful is your team in presenting at Communities of Practice (1-5)

9.2.1.5. At what level of attendance of conferences is being effectively utilized (1-5)

9.2.2. Team Improvement

9.2.2.1. How well is your team reflecting on how to become more effective and adjusting to those reflections (1-5)

9.2.2.2. How effective are your team retrospectives in producing positive changes to the team processes (1-5)

9.2.2.3. What percentage of the team dedicates themselves to honestly attempting retrospective items throughout the sprint

9.2.2.4. What percentage of the team helps each other out in any circumstance when it's needed or could offer substantial help

9.2.3. Innovation

9.2.3.1. How empowered and willing is the team to innovate (1-5)

9.2.3.2. How innovative is your team (1-5)

9.2.3.3. How innovative is your surrounding environment (1-5)

9.3. Support/ Environment

9.3.1. Resources

9.3.1.1. How much do you feel you have enough hardware to be technically excellent (1-5)

9.3.1.2. How effective are the resources available to you in accomplishing the sprint (1-5)

9.3.1.3. How easy is it to get new resources to support the success of the sprint (1-5)

9.3.1.4. What percentage of the sprint couldn't have been solved sooner or more effectively with more resources

9.3.2. Tools

9.3.2.1. How effective are the tools available to you in accomplishing the sprint (1-5)

9.3.2.2. How easy is it to get new tools to support the success of the sprint (1-5)

9.3.2.3. How well utilized are the current tools (1-5)

9.3.2.4. What percentage of the sprint couldn't have been solved sooner or more effectively with more tools