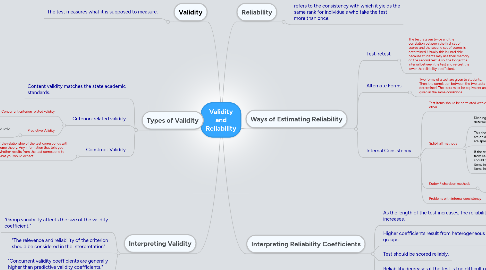

1. Validity

1.1. The test measures what it is supposed to measure.

2. Interpreting Validity

2.1. "Group variability affects the size of the validity coefficient."

2.2. "The relevence and reliability of the criterion should be considered in the interpretation"

2.3. "Concurrent validity coefficients are generally higher than predictive validity coefficients."

3. Types of Validity

3.1. Content validity matches the state academic standards.

3.2. Criterion-related validity

3.2.1. Concurrent criterion related validity

3.2.1.1. A new test and established test are given to students. Then, find the correlation between the two scores.

3.2.2. Predictive Validity

3.2.2.1. How well the tests predicts a future behavior of the test takers.

3.3. Construct Validity

3.3.1. If the relationship of the test corresponds with some theory. Any information that tells you whether results from the test correspond to what you would expect.

4. Reliability

4.1. refers to the consistency with which it yields the same rank for individuals who take the test more than once.

5. Ways of Estimating Reliability

5.1. Test-retest

5.1.1. The test is given twice and the correlation between the first set of scores and the second set of scores is determined. Usually this is unreliable because students may use their memory on the second test. Also, the longer the interval between the test and re-test the lower the reliability coefficient.

5.2. Alternate Forms

5.2.1. Two forms of a test are given to students. Then, the correlation between the two tests is determined. The tests must be equivalent and given in the same conditions.

5.3. Internal Consistency

5.3.1. Test items should be correlated with each other.

5.3.2. Split-half methods

5.3.2.1. Dividing the test into two halves and determining the correlation between them.

5.3.2.2. This should be used when the items are on different levels of difficulty and are spread out on the test.

5.3.2.3. If the test consists of the questions ranging from easiest to moredifficult, then the test should be divided by placing all odd-numbered items into one half and all even-numbered items into the other half.

5.3.3. Kuder-Richardson methods

5.3.3.1. Used to determne the extent to which the entire test represents a single consisten measure of a concept.

5.3.4. Problems with internal consistency

5.3.4.1. Sometimes yield inflated reliability estimates