1. 1. Probability

1.1. Chance Behavior

1.1.1. Unpredictable in the short run

1.1.2. Predictable pattern in the long run

1.2. Probability Model

1.2.1. Sample Space S: All possible outcomes

1.2.2. Event E: One outcome

1.2.3. Finds probability of E happening in S

1.3. Important Probability Results

1.3.1. 0 ≤ P(E) ≤ 1

1.3.2. P(E) = 1 → E will happen

1.3.3. P(E) = 0 → E will never happen

1.3.4. S is the sample space → P(S) = 1

1.3.5. Eᶜ is the complement of E → P(Eᶜ) = 1 - P(E)

1.3.6. Union: A∪B → Either A or B occur, or both A and B occur

1.3.7. Intersection: A∩B → Both A and B occur

1.3.8. Mutually Exclusive

1.3.8.1. P(A∩B) = 0

1.3.8.2. P(A∪B) = P(A) + P(B)

1.3.9. Independent Events

1.3.9.1. One event's result does not affect chances of other events

1.3.9.2. P(A∩B) = P(A) × P(B)

1.4. Probability Tree

1.4.1. Useful tool for probability problems

1.4.2. Link each outcome to the next outcome

1.5. Conditional Probability

1.5.1. If B has occurred, what is the probability of A?

1.5.2. If mutually exclusive, P(A | B) = 0

1.5.3. If independent, P(A | B) = P(A)

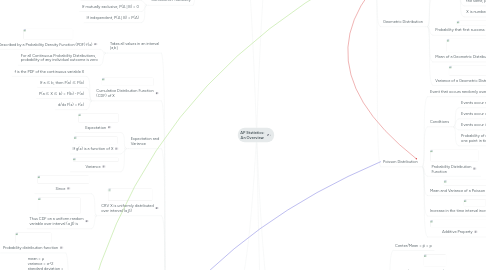

2. 2. Discrete Random Variable

2.1. Random Variable

2.1.1. Numerical outcome of a random phenomenon

2.1.2. Capital letter to denote the random variable (e.g. X)

2.1.3. Small letter for one of it's values (e.g. x)

2.2. Discrete Random Variable

2.2.1. Countable number of possible values

2.2.2. Distribution lists values and probabilities

2.2.3. Probabilities must be between 0 and 1

2.2.4. Sum of all probabilities = 1

2.2.5. Expected Value or Mean μ of DRV

2.2.6. Variance of DRV

2.3. Function of a Random Variable

2.3.1. Function of RV is also a RV

2.3.2. Function of DRV is also a DRV

2.3.3. Expected Value of f(X)

2.3.4. General Results

2.3.4.1. E(a) = a

2.3.4.2. E(aX) = aE(X)

2.3.4.3. E(aX) + b = aE(X) + b

2.3.4.4. E(aX) + E(bY) = aE(X) + bE(Y)

2.4. Distributions

2.4.1. Bernoulli Distribution

2.4.1.1. Single experiment with two outcomes of interest

2.4.1.2. X = 1 → Success

2.4.1.3. X = 0 → Failure

2.4.1.4. Probability Distribution Function Where p = P(success), q = P(failure) = 1 - p, and p + q =1

2.4.2. Binomial Distribution

2.4.2.1. Bernoulli Experiment repeated a specified number of times

2.4.2.2. Conditions

2.4.2.2.1. Two outcomes in each trial

2.4.2.2.2. Fixed number of trials n

2.4.2.2.3. Trials are independent

2.4.2.2.4. Probability of success for each trial is the same, p

2.4.2.3. Probability Distribution Function Where r is the number of successes after n trials

2.4.2.4. Mean of a Binomial Distribution

2.4.2.5. Variance of a Binomial Distribution

2.4.2.6. Approximating Binomial Distribution to Poisson Distribution

2.4.2.6.1. n > 50, p < 0.1, np < 5

2.4.2.6.2. Can be approximated with λ = np

2.4.2.6.3. n → ∞, p → 0, approximation becomes more accurate

2.4.3. Geometric Distribution

2.4.3.1. Bernoulli Experiment repeated until an expected success

2.4.3.2. Conditions

2.4.3.2.1. Two outcomes in each trial

2.4.3.2.2. Trials are independent

2.4.3.2.3. Probability of success for each trial is the same, p

2.4.3.2.4. X is number of trials before first success

2.4.3.3. Probability that first success occurs after n trials

2.4.3.4. Mean of a Geometric Distribution

2.4.3.5. Variance of a Geometric Distribution

2.4.4. Poisson Distribution

2.4.4.1. Event that occurs randomly over time

2.4.4.2. Conditions

2.4.4.2.1. Events occur singly and randomly

2.4.4.2.2. Events occur uniformly

2.4.4.2.3. Events occur independently

2.4.4.2.4. Probability of occurrence at any one point in time is negligible

2.4.4.3. Probability Distribution Function

2.4.4.4. Mean and Variance of a Poisson Distribution

2.4.4.5. Increase in the time interval increases parameter

2.4.4.6. Additive Property

3. Population Proportion and Two Populations

3.1. Population Proportion

3.1.1. Center/Mean = p̂ = p

3.1.2. Spread/Standard Deviation/Standard Error

3.1.3. Shape = np ≥ 10, n(1-p) ≥ 10 = Approximately Normal

3.1.4. Conditions

3.1.4.1. SRS: Data is an SRS from population of interest

3.1.4.2. Normality: np ≥ 10, n(1-p) ≥ 10

3.1.4.3. Independence: Individual observations are independent, i.e. population 10 times as large as sample

3.1.5. Confidence Interval

3.1.5.1. Point Estimate + Margin of Error

3.1.5.2. z* is the upper (1-C)/2 critical value

3.1.6. Choosing a sample size that will allow us to estimate the parameter within a given margin of error

3.1.7. One Proportion z Test

3.2. Two Population Parameters

3.2.1. Two Sample z Statistic

4. 3. Continuous Random Variable

4.1. Takes all values in an interval [a,b]

4.1.1. Described by a Probability Density Function (PDF) f(x)

4.1.2. For all Continuous Probability Distributions, probability of any individual outcome is zero

4.2. Cumulative Distribution Function (CDF) of X

4.2.1. f is the PDF of the continuous variable X

4.2.2. If a ≤ b, then F(a) ≤ F(b)

4.2.3. P(a ≤ X ≤ b) = F(b) - F(a)

4.2.4. d/dx F(x) = f(x)

4.3. Expectation and Variance

4.3.1. Expectation

4.3.2. If g(x) is a function of X

4.3.3. Variance

4.4. CRV X is uniformly distributed over interval (α,β)

4.4.1. Since

4.4.2. Thus CDF on a uniform random variable over interval (α,β) is

4.5. Normal/Gaussian Distribution A class of continuous probability distribution

4.5.1. Probability distribution function

4.5.2. mean = μ variance = σ^2 standard deviation = σ

4.5.3. Features

4.5.3.1. Curve is bell-shaped, symmetrical about the mean μ

4.5.3.2. Curve is single-peaked at mean = mode = median = μ

4.5.3.3. Points of inflexion occurs at μ – σ and μ + σ

4.5.3.4. Approximations: 68% of all observations fall within σ of the mean μ 95% of all observations fall within 2σ of the mean μ 99.7% of all observations fall within 3σ of the mean μ

4.5.4. Standard Normal Distribution

4.5.4.1. Take variable x with mean μ and standard deviation σ

4.5.4.2. Standardized Variable Z with mean = 0 and standard deviation = 1

4.5.4.3. INVNORM Z to "unstandardize" it and find the x value

4.5.5. Combining Normal Random Variables

4.5.5.1. Expected Value

4.5.5.2. Variance

4.5.6. Approximation of Binomial Distribution to Normal Distribution

4.5.6.1. Conditions

4.5.6.1.1. np ≥ 5

4.5.6.1.2. n(1 – p) ≥ 5

4.5.6.2. Mean = np

4.5.6.3. Standard deviation = √np(1-p)

4.5.6.4. Continuity Correction

4.5.6.4.1. Required to convert from Discrete to Continuous Variable

4.5.6.4.2. Example: Discrete: x = 6 Continuous: 5.5 < x < 6.5

4.5.7. Approximation of Poisson Distribution to Normal Distribution

4.5.7.1. Condition: λ > 10

4.5.7.2. Mean = λ

4.5.7.3. Variance = λ

4.5.7.4. Continuity Correction

5. 4. Sampling Distribution

5.1. Population and Sampling

5.1.1. Population: Group we want information on

5.1.2. Sample: Part of Population

5.2. Sampling and Methods

5.2.1. Not a Census

5.2.2. Non-probability sampling

5.2.2.1. Convenience Sampling

5.2.2.2. Quota Sampling: Stratified + Convenience

5.2.3. Probability Sampling

5.2.3.1. Simple Random Sampling

5.2.3.1.1. Random Digit Tables

5.2.3.1.2. Generally a required condition for distributions

5.2.3.2. Stratified Sampling

5.2.3.3. Systematic Sampling

5.2.3.4. Cluster Sampling

5.2.4. Multi-stage Sampling to avoid individual pitfalls

5.2.5. Cautions

5.2.5.1. Undercoverage

5.2.5.2. Non-response

5.2.5.3. (Voluntary) Response Bias

5.2.5.4. Wording of the question

5.2.6. Sample mean to estimate Population Mean

5.2.7. Sample variance to estimate Population variance: Use a Histogram

5.2.8. Sample Mean from a non-normal population

5.2.8.1. Central Limit Theorem

5.2.8.2. Can assume sample is approximately normal

5.2.9. Bias and Variability

5.2.9.1. If mean of sample is true to actual parameter, it is an unbiased estimate

5.2.9.2. Variability is spread of distribution, smaller if n is greater

5.2.9.3. Estimator and estimate