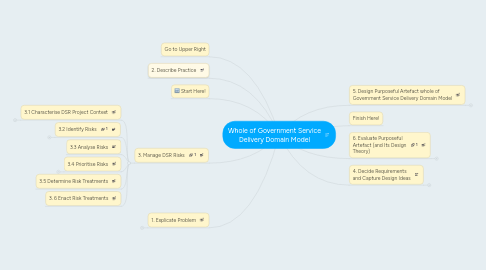

1. Go to Upper Right

2. 2. Describe Practice

3. Start Here!

4. 3. Manage DSR Risks

4.1. 3.1 Characterise DSR Project Context

4.1.1. 3.1.1 Identify and analyse research constraints

4.1.1.1. 3.1.1.1 What are the time constraints?

4.1.1.1.1. How long do you have?

4.1.1.1.2. Is that enough?

4.1.1.2. 3.1.1.2 What are the funding constraints?

4.1.1.2.1. What sources of funding do you have?

4.1.1.2.2. How much funding do you have?

4.1.1.2.3. What do you need to spend it on?

4.1.1.2.4. How much will that cost?

4.1.1.2.5. Is that enough?

4.1.1.3. 3.1.1.3 Do you have access to needed hardware and/or software?

4.1.1.3.1. What hardware do you need?

4.1.1.3.2. What software do you need?

4.1.1.3.3. Do you have access?

4.1.1.4. 3.1.1.4 Do you need and can you get access to organisations for evaluation?

4.1.1.4.1. Do you need access to one or more organisations for problem analysis and/or evaluation?

4.1.1.4.2. Would be an advantage to have the DTA support the model

4.1.1.4.3. What or how many organisations would you need to have access to?

4.1.1.4.4. Do you already have access?

4.1.1.4.5. Can you get access?

4.1.1.5. 3.1.1.5 Do you have the skills needed to conduct the research?

4.1.1.5.1. What skills are needed?

4.1.1.5.2. For each needed skill, do you have sufficient skills?

4.1.1.5.3. For each insufficient skill, can you learn and obtain sufficient skills?

4.1.1.6. 3.1.1.6 Are there any ethical constraints that limit what you can or should do on your research?

4.1.1.6.1. Animal research constraints? (List them)

4.1.1.6.2. Privacy constraints? (List them)

4.1.1.6.3. Human research subject constraints? (List them)

4.1.1.6.4. Organisational risk constraints? (List them)

4.1.1.6.5. Societal risk constraints? (List them)

4.1.2. 3.1.2 Identify development and feasibility uncertainties

4.1.2.1. Technical feasibility

4.1.2.1.1. Of what and in what way?

4.1.2.2. Human usability

4.1.2.3. Organisational feasibility

4.2. 3.2 Identify Risks

4.2.1. A. Business Needs: Risks arising from identifying, selecting, and developing understanding of the business needs (problems and requirements) to address in the research.

4.2.1.1. A-1. Selection of a problem that lacks significance for any stakeholder.

4.2.1.2. A-2. Difficulty getting other departments to evaluate the whole of government service delivery domain model.

4.2.1.2.1. Rephrase risk for your organization/domain/artefact

4.2.1.2.2. If other departments do not evaluate the model then I will not be able to determine its broad applicability

4.2.1.3. A-3. Different and even conflicting stakeholder interests (some of which may not be surfaced) may require some customization of the model.

4.2.1.3.1. Rephrase risk for your organization/domain/artefact

4.2.1.3.2. If other departments have different circular representations then there may be more that one circular representation attaching to the horizontal plane

4.2.1.4. A-4. Poor understanding of the problem to be solved.

4.2.1.5. A-5. Solving the wrong problem, i.e., a problem that isn’t a main contributor to undesirable outcomes that motivate the problem solving.

4.2.1.6. A-6. Poor/vague definition/statement of problem to be solved, with potential misunderstanding by others.

4.2.1.7. A-7. Inappropriate choice or definition of a problem according to a solution at hand.

4.2.1.8. A-8. Inappropriate formulation of the problem.

4.2.2. B. Grounding: Risks arising from searching for, identifying, and comprehending applicable knowledge in the literature.

4.2.2.1. B-1. Ignorance or lack of knowledge of existing research relevant to the problem understanding and over-reliance on personal experience with or imagination of the problem.

4.2.2.2. B-2. Ignorance or lack of knowledge of existing design science research into solution technologies for solving the problem, i.e., lack of knowledge of the state of the art.

4.2.2.3. B-3. Ignorance or lack of knowledge of existing relevant natural and behavioural science research forming kernel theories for understanding or solving the problem.

4.2.3. C. Build: Risks arising from designing artefacts, including instantiations, and developing design theories.

4.2.3.1. C-1. Development of a conjectural (un-instantiated) solution which cannot be instantiated (built or made real).

4.2.3.2. C-2. Development of a hypothetical (untried) solution which is ineffective in solving the problem, i.e., the artefact doesn’t work doesn’t work well in real situations with various socio-technical complications.

4.2.3.3. C-3. Development of a hypothetical (untried) solution which is inefficient in solving the problem, i.e., requiring overly high resource costs.

4.2.3.4. C-4. Development of a hypothetical (untried) solution which is inefficacious in solving the problem, i.e., the artefact isn’t really the cause of an improvement observed during evaluation.

4.2.3.5. C-5. Development of a hypothetical (untried) solution which cannot be taught to or understood by those who are intended to use it, e.g., overly complex or inelegant.

4.2.3.6. C-6. Development of a hypothetical (untried) solution which is difficult or impossible to get adopted by those who are intended to use it, whether for personal or political reasons.

4.2.3.6.1. Rephrase risk for your organization/domain/artefact

4.2.3.6.2. The solution was impossible to get adopted because it used open source software and there was not enough buy-in to purchase an untried solution and load it on the departmental ICT infrastructure.

4.2.3.7. C-7. Development of a hypothetical (untried) solution which causes new problems that make the outcomes of the solution more trouble than the original problem, i.e., there are significant side effects.

4.2.4. D. Evaluation: Risks arising from evaluating purposeful artefacts and justifying design theories or knowledge.

4.2.4.1. D-1a. Tacit requirements (which by definition cannot be surfaced) are not dealt with when evaluating the solution technology, leading to failure of the solution technology to meet those requirements.

4.2.4.2. D-1b. Failure to surface some or all of the relevant requirements leads to those requirements not being dealt with when evaluating the solution technology, leading to failure of the solution technology to meet those requirements.

4.2.4.3. D-2. Incorrectly matching the articulated requirements to the meta-requirements of the ISDT lead to the testing of the IDST and evaluation of an instantiation of the metadesign in a situation for which neither should be applied.

4.2.4.4. D-3. Incorrectly matching the meta-design or the design method to the meta-requirements (not following the ISDT correctly) leads to evaluation of something other than the correct solution technology or the ISDT as stated.

4.2.4.5. D-4. Improper application of the meta-design or the design method (not in accordance with the ISDT) in designing an instantiation leads to evaluation of something other than the correct solution technology or the ISDT as stated.

4.2.4.6. D-5. Improperly building an instantiation of the solution technology (such that it does not properly embody the meta-design) leads to evaluation of something other than the correct solution technology or the ISDT as stated.

4.2.4.7. D-6. Difficulties in implementing the solution technology during naturalistic evaluation, due to such things as unforeseen complications within the business/organization, prevent the instantiation of the solution technology from successfully meeting its objectives.

4.2.4.8. D-7. Success of the solution technology to meet its objectives is not obtained due to dynamic or changing requirements beyond the scope of the solution technology.

4.2.4.9. D-8. Success of the solution technology to meet its objectives is not achieved due to poor change management practices.

4.2.4.10. D-9. Determination of success or failure in reaching the objectives of the solution technology is error-prone or impossible due to disagreement about objectives or inability to measure.

4.2.4.11. D-10. Existing organizational culture, local organizational culture differences (sub-cultures), political conflicts, etc. complicate the evaluation process or weaken the abilityto make meaningful measurement of the achievement of the objectives of the solution technology.

4.2.4.12. D-11. Existing organizational priorities, structures, practices, procedures, etc. complicate the evaluation process or ability to make/measure the achievement of the objectives.

4.2.4.13. D-12 Emergence of new organizational or individual practices, structures, priorities, norms, culture, or other aspects that complicate the acceptability, workability, or efficiency of the application of the solution technology in a naturalistic setting.

4.2.5. E. Artefact dissemination and use: Risks arising from disseminating new purposeful artefacs and design theories or knowledge to people and organisations for use in practice to address business need(s).

4.2.5.1. E-1. Implementation in practice of a solution does not work effectively, efficiently, and/or efficaciously.

4.2.5.2. E-2. Misunderstanding the appropriate context for and limitations of the solution.

4.2.5.3. E-3. Misunderstanding how to apply the solution.

4.2.5.4. E-4. Inappropriate handling of adoption, diffusion, and organizational implementation.

4.2.6. F. Knowledge additions: Risks arising from publishing new design artefacts and design theories or knowledge and adding them to the body of knowledge.

4.2.6.1. F-1. Inability to publish or present research results

4.2.6.2. F-2. Publication of low significance research

4.2.6.3. F-3. Publication of incorrect research

4.2.6.4. F-4. Design artefacts prove too unique to disseminate

4.3. 3.3 Analyse Risks

4.4. 3.4 Prioritise Risks

4.4.1. High

4.4.2. Medium

4.4.3. Low

4.5. 3.5 Determine Risk Treatments

4.6. 3. 6 Enact Risk Treatments

5. 1. Explicate Problem

5.1. 1.1 Set Problem Statement

5.1.1. Model the Whole of Government Service Delivery Landscape to show the relationship between entities and things

5.2. 1.2 Analyse Stakeholders

5.2.1. 1.2.1 Identify Stakeholders

5.2.1.1. Add Client(s)

5.2.1.1.1. Political parties

5.2.1.1.2. Member of the Australian Government Ministry

5.2.1.1.3. Department Ministers

5.2.1.1.4. Government Departments

5.2.1.1.5. Programme Administrators

5.2.1.1.6. Solution Developers

5.2.1.1.7. Service Consumers

5.2.1.1.8. Policy makers

5.2.1.2. Add Decision Maker(s)

5.2.1.2.1. The Department of Human Services

5.2.1.2.2. Other Government Departments

5.2.1.2.3. The Digital Transformation Agency (DTA)

5.2.1.3. Add Professional(s)

5.2.1.3.1. Rosetta Romano

5.2.1.4. Add Witness(es)

5.2.1.4.1. Data61

5.2.1.4.2. AGLDWG

5.2.1.4.3. DTA

5.2.1.4.4. Department of Finance

5.2.2. 1.2.2The Department of Human Services

5.2.2.1. Assess Stakeholder Power

5.2.2.1.1. Assess Level of Coercive Power

5.2.2.1.2. Assess Level of Normative Power

5.2.2.1.3. Assess Level of Utilitarian Power

5.2.2.2. Assess Stakeholder Legitimacy

5.2.2.2.1. Assess Level of Legal Legitimacy

5.2.2.2.2. Assess Level of Contractual Legitimacy

5.2.2.2.3. Assess Level of Customary Legitimacy

5.2.2.2.4. Assess Level of Moral Legitimacy

5.2.2.3. Assess Stakeholder Urgency

5.2.2.3.1. Assess the Time Sensitivity

5.2.2.3.2. Assess the Criticality

5.2.3. 1.2.3 Other Government Departments

5.2.3.1. Assess Stakeholder Power

5.2.3.1.1. Assess Level of Coercive Power

5.2.3.1.2. Assess Level of Normative Power

5.2.3.1.3. Assess Level of Utilitarian Power

5.2.3.2. Assess Stakeholder Legitimacy

5.2.3.2.1. Assess Level of Legal Legitimacy

5.2.3.2.2. Assess Level of Contractual Legitimacy

5.2.3.2.3. Assess Level of Customary Legitimacy

5.2.3.2.4. Assess Level of Moral Legitimacy

5.2.3.3. Assess Stakeholder Urgency

5.2.3.3.1. Assess the Time Sensitivity

5.2.3.3.2. Assess the Criticality

5.2.4. 1.2.4 Member of the Australian Government Ministry

5.2.4.1. Assess Stakeholder Power

5.2.4.1.1. Assess Level of Coercive Power

5.2.4.1.2. Assess Level of Normative Power

5.2.4.1.3. Assess Level of Utilitarian Power

5.2.4.2. Assess Stakeholder Legitimacy

5.2.4.2.1. Assess Level of Legal Legitimacy

5.2.4.2.2. Assess Level of Contractual Legitimacy

5.2.4.2.3. Assess Level of Customary Legitimacy

5.2.4.2.4. Assess Level of Moral Legitimacy

5.2.4.3. Assess Stakeholder Urgency

5.2.4.3.1. Assess the Time Sensitivity

5.2.4.3.2. Assess the Criticality

5.2.5. 1.2.5 Department Ministers

5.2.5.1. Assess Stakeholder Power

5.2.5.1.1. Assess Level of Coercive Power

5.2.5.1.2. Assess Level of Normative Power

5.2.5.1.3. Assess Level of Utilitarian Power

5.2.5.2. Assess Stakeholder Legitimacy

5.2.5.2.1. Assess Level of Legal Legitimacy

5.2.5.2.2. Assess Level of Contractual Legitimacy

5.2.5.2.3. Assess Level of Customary Legitimacy

5.2.5.2.4. Assess Level of Moral Legitimacy

5.2.5.3. Assess Stakeholder Urgency

5.2.5.3.1. Assess the Time Sensitivity

5.2.5.3.2. Assess the Criticality

5.2.6. 1.2.6 Government Departments

5.2.6.1. Assess Stakeholder Power

5.2.6.1.1. Assess Level of Coercive Power

5.2.6.1.2. Assess Level of Normative Power

5.2.6.1.3. Assess Level of Utilitarian Power

5.2.6.2. Assess Stakeholder Legitimacy

5.2.6.2.1. Assess Level of Legal Legitimacy

5.2.6.2.2. Assess Level of Contractual Legitimacy

5.2.6.2.3. Assess Level of Customary Legitimacy

5.2.6.2.4. Assess Level of Moral Legitimacy

5.2.6.3. Assess Stakeholder Urgency

5.2.6.3.1. Assess the Time Sensitivity

5.2.6.3.2. Assess the Criticality

5.2.7. 1.2.7 Programme Administrators

5.2.7.1. Assess Stakeholder Power

5.2.7.1.1. Assess Level of Coercive Power

5.2.7.1.2. Assess Level of Normative Power

5.2.7.1.3. Assess Level of Utilitarian Power

5.2.7.2. Assess Stakeholder Legitimacy

5.2.7.2.1. Assess Level of Legal Legitimacy

5.2.7.2.2. Assess Level of Contractual Legitimacy

5.2.7.2.3. Assess Level of Customary Legitimacy

5.2.7.2.4. Assess Level of Moral Legitimacy

5.2.7.3. Assess Stakeholder Urgency

5.2.7.3.1. Assess the Time Sensitivity

5.2.7.3.2. Assess the Criticality

5.2.8. 1.2.8 Solution Developers

5.2.8.1. Assess Stakeholder Power

5.2.8.1.1. Assess Level of Coercive Power

5.2.8.1.2. Assess Level of Normative Power

5.2.8.1.3. Assess Level of Utilitarian Power

5.2.8.2. Assess Stakeholder Legitimacy

5.2.8.2.1. Assess Level of Legal Legitimacy

5.2.8.2.2. Assess Level of Contractual Legitimacy

5.2.8.2.3. Assess Level of Customary Legitimacy

5.2.8.2.4. Assess Level of Moral Legitimacy

5.2.8.3. Assess Stakeholder Urgency

5.2.8.3.1. Assess the Time Sensitivity

5.2.8.3.2. Assess the Criticality

5.2.9. 1.2.9 Service Consumers

5.2.9.1. Assess Stakeholder Power

5.2.9.1.1. Assess Level of Coercive Power

5.2.9.1.2. Assess Level of Normative Power

5.2.9.1.3. Assess Level of Utilitarian Power

5.2.9.2. Assess Stakeholder Legitimacy

5.2.9.2.1. Assess Level of Legal Legitimacy

5.2.9.2.2. Assess Level of Contractual Legitimacy

5.2.9.2.3. Assess Level of Customary Legitimacy

5.2.9.2.4. Assess Level of Moral Legitimacy

5.2.9.3. Assess Stakeholder Urgency

5.2.9.3.1. Assess the Time Sensitivity

5.2.9.3.2. Assess the Criticality

5.2.10. 1.2.10 Simon Thompson

5.2.10.1. Assess Stakeholder Power

5.2.10.1.1. Assess Level of Coercive Power

5.2.10.1.2. Assess Level of Normative Power

5.2.10.1.3. Assess Level of Utilitarian Power

5.2.10.2. Assess Stakeholder Legitimacy

5.2.10.2.1. Assess Level of Legal Legitimacy

5.2.10.2.2. Assess Level of Contractual Legitimacy

5.2.10.2.3. Assess Level of Customary Legitimacy

5.2.10.2.4. Assess Level of Moral Legitimacy

5.2.10.3. Assess Stakeholder Urgency

5.2.10.3.1. Assess the Time Sensitivity

5.2.10.3.2. Assess the Criticality

5.2.11. 1.2.11 Michael Sutherland

5.2.11.1. Assess Stakeholder Power

5.2.11.1.1. Assess Level of Coercive Power

5.2.11.1.2. Assess Level of Normative Power

5.2.11.1.3. Assess Level of Utilitarian Power

5.2.11.2. Assess Stakeholder Legitimacy

5.2.11.2.1. Assess Level of Legal Legitimacy

5.2.11.2.2. Assess Level of Contractual Legitimacy

5.2.11.2.3. Assess Level of Customary Legitimacy

5.2.11.2.4. Assess Level of Moral Legitimacy

5.2.11.3. Assess Stakeholder Urgency

5.2.11.3.1. Assess the Time Sensitivity

5.2.11.3.2. Assess the Criticality

5.2.12. 1.2.12 Data61

5.2.12.1. Assess Stakeholder Power

5.2.12.1.1. Assess Level of Coercive Power

5.2.12.1.2. Assess Level of Normative Power

5.2.12.1.3. Assess Level of Utilitarian Power

5.2.12.2. Assess Stakeholder Legitimacy

5.2.12.2.1. Assess Level of Legal Legitimacy

5.2.12.2.2. Assess Level of Contractual Legitimacy

5.2.12.2.3. Assess Level of Customary Legitimacy

5.2.12.2.4. Assess Level of Moral Legitimacy

5.2.12.3. Assess Stakeholder Urgency

5.2.12.3.1. Assess the Time Sensitivity

5.2.12.3.2. Assess the Criticality

5.2.13. 1.2.13 Policy makers

5.2.13.1. Assess Stakeholder Power

5.2.13.1.1. Assess Level of Coercive Power

5.2.13.1.2. Assess Level of Normative Power

5.2.13.1.3. Assess Level of Utilitarian Power

5.2.13.2. Assess Stakeholder Legitimacy

5.2.13.2.1. Assess Level of Legal Legitimacy

5.2.13.2.2. Assess Level of Contractual Legitimacy

5.2.13.2.3. Assess Level of Customary Legitimacy

5.2.13.2.4. Assess Level of Moral Legitimacy

5.2.13.3. Assess Stakeholder Urgency

5.2.13.3.1. Assess the Time Sensitivity

5.2.13.3.2. Assess the Criticality

5.2.14. 1.2.14 Rosetta Romano

5.2.14.1. Assess Stakeholder Power

5.2.14.1.1. Assess Level of Coercive Power

5.2.14.1.2. Assess Level of Normative Power

5.2.14.1.3. Assess Level of Utilitarian Power

5.2.14.2. Assess Stakeholder Legitimacy

5.2.14.2.1. Assess Level of Legal Legitimacy

5.2.14.2.2. Assess Level of Contractual Legitimacy

5.2.14.2.3. Assess Level of Customary Legitimacy

5.2.14.2.4. Assess Level of Moral Legitimacy

5.2.14.3. Assess Stakeholder Urgency

5.2.14.3.1. Assess the Time Sensitivity

5.2.14.3.2. Assess the Criticality

5.2.15. 1.2.3 The Digital Transformation Agency (DTA)

5.2.15.1. Assess Stakeholder Power

5.2.15.1.1. Assess Level of Coercive Power

5.2.15.1.2. Assess Level of Normative Power

5.2.15.1.3. Assess Level of Utilitarian Power

5.2.15.2. Assess Stakeholder Legitimacy

5.2.15.2.1. Assess Level of Legal Legitimacy

5.2.15.2.2. Assess Level of Contractual Legitimacy

5.2.15.2.3. Assess Level of Customary Legitimacy

5.2.15.2.4. Assess Level of Moral Legitimacy

5.2.15.3. Assess Stakeholder Urgency

5.2.15.3.1. Assess the Time Sensitivity

5.2.15.3.2. Assess the Criticality

5.3. 1.3 Assess Problem as Difficulties

5.3.1. 1.3.1 Ascertain Consequences

5.3.1.1. Difficult to understand the impact of change across the whole of Government

5.3.1.1.1. What follows from ...?

5.3.2. 1.3.2 Ascertain Causes

5.3.2.1. A single department was responsible for service delivery

5.3.2.1.1. ...leads to...

5.4. 1.4 Assess Problem as Solutions

5.4.1. 1.4.1Alleviate Consequences

5.4.1.1. No longer Difficult to understand the impact of change across the whole of Government

5.4.2. 1.4.2 Lessen Causes

5.4.2.1. No longer A single department being solely responsible for service delivery

5.4.2.1.1. ...leads to...

5.5. There is no single whole of Government model that describes the relationship between entities and things in the domain of Government service delivery

6. 5. Design Purposeful Artefact whole of Government Service Delivery Domain Model

6.1. 5.1 Description

6.1.1. A model depicting the entities and things involved in Australian Government service delivery, a responsibility assigned to the Minister of the Department of Human Services in the Administrative Arrangements Order

6.2. 5.2 Artefact inputs

6.2.1. The artefact provides a conceptual understanding of a domain, and as such requires no specific inputs.

6.3. 5.3 Artefact outputs

6.3.1. The artefact provides a model of the Australian Whole of Government Service Delivery domain

6.4. 5.4 Intended user(s)

6.4.1. 5.4.1 Political Party Policy Makers

6.4.1.1. To track the suggestion and the implementation of government policies over time

6.4.2. 5.4.2 Member of the Australian Government Ministry

6.4.2.1. Department Ministers

6.4.2.1.1. To identify the minister of a department over time

6.4.2.2. To identify the make up of different government ministries over time

6.4.3. 5.4.3 Government Departments

6.4.3.1. To monitor the name changes of a department over time

6.4.3.2. The Department of Human Services

6.4.3.2.1. To understand how data is being used to deliver services to Australian welfare recipients

6.4.3.2.2. Solution Developers

6.4.3.2.3. Department of Human Services Legal Area

6.4.3.2.4. Programme Administrators

6.4.4. 5.4.4. Service Consumers

6.4.4.1. To identify the service consumer groups that may be impacted by a program or service over time

6.4.5. 5.4.5 Other Australian Government Organisations

6.4.5.1. To assess whether the model satisfies their contribution to government service delivery

6.4.5.2. Data61

6.4.5.2.1. To contribute an ontology for service delivery that connects different parts of government in a logical and a systematic way

6.4.5.3. The Digital Transformation Agency

6.4.5.3.1. To understand whether this model is representative of a whole of government service delivery model, or has limited use for the Department of Human Services

6.4.6. 5.4.6 Researcher

6.4.6.1. Contribution to practice

6.4.6.2. Contribution to literature

6.5. Drag&Drop the "hows" from the requirements and design ideas above to the components you add below that will have responsibility or carry out that "how".

6.5.1. By proposing things and entities at the highest level and allowing for further compartments later

6.5.2. Testing the narrative with different audiences

6.5.3. By using Resource Description Framework Triples

6.5.4. skype and in person

6.5.5. Delivering the model

6.5.6. Develop a supporting document that describes the narrative

6.5.7. Ensuring that the narrative is simple

6.5.8. Develop a standard Powerpoint presentation using the narrative

6.5.9. By proposing things and entities at the highest level

6.5.10. Artefact is to be base lined and version controlled

6.5.11. The artefact is only being used in the Department of Human Services, but modularising parts of it for other Departments is being considered at the moment

6.5.12. At present the only owner is Rosetta Romano but once it is published it will be baselined

6.5.13. Ensuring that the narrative is simple

6.5.14. If it is found to require customisation for different users then this model could be renamed to the DHS Service Delivery Model

6.5.15. Ensuring that the narrative is simple

6.5.16. Will be widely available after it has been published

6.5.17. Will be widely available after it has been published

6.5.18. Graphic designer will be used

6.5.19. e.g. Political party policy is sourced from Liberal, or other website

6.5.20. Entities are allocated a broad name

6.5.21. Connected relationships are modelled

6.5.22. e.g. Political party policy is sourced from Liberal, or other website

6.6. Enumerate top level components

7. Finish Here!

8. 6. Evaluate Purposeful Artefact (and Its Design Theory)

8.1. 6.1 Identify Evaluation Purpose & Goal Priorities

8.1.1. Determine and characterise the artefact(s) to be evaluated

8.1.2. Determine evaluation purpose(s)

8.1.2.1. Develop evidence supporting my artefact's utility and design theory

8.1.2.2. Develop evidence my artefact has better utility for its purpose than other artefacts do

8.1.2.3. Identify side effects of the use of my artefact

8.1.2.4. Identify weaknesses and ways of improving my artefact

8.1.3. Determine evaluation goal(s)

8.1.3.1. Develop rigorous evidence of the efficacy of my artefact for achieving its purpose(s)

8.1.3.2. Develop rigorous evidence of the effectiveness of my artefact for achieving its purposes(s)

8.1.3.3. Evaluate my artefact efficiently and within resource constraints

8.1.3.4. Conduct my evaluations ethically

8.1.4. Prioritise evaluation purposes, goals, constraints, and uncertainties, so you can address higher priorities as early as possible

8.1.4.1. Evaluation Goals

8.1.4.2. Evaluation Purposes

8.1.4.3. Research Constraints

8.1.4.4. Feasibility Uncertainties

8.1.4.4.1. Ensure 'Technical feasibility'

8.2. 6.2 Choose Evaluation Strategy/Trajectory

8.2.1. DSR Evaluation Strategy Selection Framework

8.2.2. One or more strategies are suggested (ticked) below. Revise as appropriate.

8.2.3. Human Risk and Effectiveness Strategy

8.2.4. Technological Risk and Efficacy Strategy

8.2.5. Purely Technical Strategy

8.2.6. Quick and Simple Strategy

8.3. 6.3 Define theoretical constructs and measures of requirements to be evaluated

8.3.1. A clear model that includes all things and entities involved in Australian Government service delivery

8.3.1.1. What theoretical construct represents this?

8.3.2. Broadly applicable

8.3.2.1. The finding that candidate ontology is suitable for re-use (Cross & Pal, 2008).

8.3.2.2. The extent to which architectural principles or criteria involve the organization of the system and its suitability for further development (Gomez-Perez A. , 1994) or for knowledge sharing among different user groups (Kwan, 2003) (Burton -Jones, Storey, Sugumaran, & Ahluwalia, 2004).

8.3.2.3. What theoretical construct represents this?

8.3.3. Coherence

8.3.3.1. The extent to which the function of the sub-system nodes contribute to the understanding of the sub-system i.e. strong cohesion (Wand & Weber ,1997), or the degree to which module components belong together (Kang & Bieman, 1996).

8.3.3.2. What theoretical construct represents this?

8.3.3.3. Logical or natural connection or consistency (Wilkes & Krebs, 1982)

8.3.4. Consistency

8.3.4.1. What theoretical construct represents this?

8.3.5. Modularity

8.3.5.1. The extent to which the modules represent the knowledge being represented (Pinto & Martins, 2001)

8.3.5.2. What theoretical construct represents this?

8.3.6. Conciseness

8.3.6.1. The extent to which the domain is compactly represented (Pipino, Lee, & Wang, 2002).

8.3.6.2. What theoretical construct represents this?

8.3.7. Usability

8.3.7.1. The extent to which the reliability and speed could be increased by using an existing ontology when developing a knowledge-based information system (Abu-Hanna & Jansweijer, 1994).

8.3.7.2. (Uschold M. , 1996), (Burton-Jones, Storey, Sugumaran, & Ahluwalia, 2004)

8.3.7.3. It has different generality levels for different levels of reusability (Studer, Benjamins, & Fensel, 1998).

8.3.7.4. What theoretical construct represents this?

8.3.8. Comprehensibility

8.3.8.1. (Burton-Jones, Storey, Sugumaran, & Ahluwalia, 2004), (Jarke, Jeusfeld, Quix, & Vassiliadis, 1999), (Redman, 1996), (Cappiello, 2005), (Soares, da Silva, & Simoes, 2010)

8.3.8.2. The extent to which domain experts and others agree about the comprehensiveness of the domain (Soares, da Silva, & Simoes, 2010).

8.3.8.3. What theoretical construct represents this?

8.3.9. Maintainability

8.3.9.1. The extent to which precision, accuracy and recall are maintained for multiple users (Gangemi, Catenacci, Ciaramita, & Lehmann, 2006).

8.3.9.2. What theoretical construct represents this?

8.3.10. Flexibility

8.3.10.1. The extent to which the system viewer is flexible and can be easily adapted to suit multiple views (Gangemi, Catenacci, Ciaramita, & Lehmann, 2006).

8.3.10.2. What theoretical construct represents this?

8.3.11. Accountability

8.3.11.1. What theoretical construct represents this?

8.3.12. Customisability

8.3.12.1. Understanding the chosen design development method (individual specific, general, partial or multiple perspective) (Holsapple C. W., 2001).

8.3.12.2. The extent to which the conceptualization of the ontology is specified at the knowledge level and independent symbol -level encoding i.e. adopts minimal encoding bias to allow others to easily adopt or re-use (Gruber T. , 1993).

8.3.12.3. What theoretical construct represents this?

8.3.13. Learnability

8.3.13.1. The extent to which the data / information including terms / signs in the ontology can be read (Burton-Jones, Storey, Sugumaran, & Ahluwalia, 2004) or comments / labels / captions appearing in the ontology are human-readable (Tatir, Arpinar, Moore, Aleman-Meza, & Sheth, 2005)

8.3.13.2. The extent to which designers' requirements are recorded, can be seen, reviewed, traceable and understood (Burton -Jones, Storey, Sugumaran, & Ahluwalia, 2004).

8.3.13.3. The extent to which the concepts are represented in the modules are understood (Soares, da Silva, & Simoes, 2010).

8.3.13.4. What theoretical construct represents this?

8.3.14. Accessibility

8.3.14.1. The extent to which ontology is available or easily or quickly retrievable (Pipino, Lee, & Wang, 2002), (Soares, da Silva, & Simoes, 2010), (Cappiello, 2005), (Redman, 1996)

8.3.14.2. The extent to which the ontology can be found using a Google search (Studer, Benjamins, & Fensel, 1998).

8.3.14.3. (Wang & Strong, 1996), (Cappiello, 2005), (Jarke, Jeusfeld, Quix, & Vassiliadis, 1999), (Naumann & Rolker, 2000), (Pipino, Lee, & Wang, 2002), (Soares, da Silva, & Simoes, 2010)

8.3.14.4. What theoretical construct represents this?

8.4. 6.4 Choose and Design Evaluation Episodes

8.4.1. Copy&Paste the requirements to be evaluated above into an evaluation episode in one of the four quadrants

8.4.1.1. A clear model that includes all things and entities involved in Australian Government service delivery

8.4.1.2. Broadly applicable

8.4.1.3. Coherence

8.4.1.4. Consistency

8.4.1.5. Modularity

8.4.1.6. Conciseness

8.4.1.7. Usability

8.4.1.8. Comprehensibility

8.4.1.9. Maintainability

8.4.1.10. Flexibility

8.4.1.11. Accountability

8.4.1.12. Expresiveness

8.4.1.13. Customisability

8.4.1.14. Learnability

8.4.1.15. Accessibility

8.4.1.16. Entities that are people can be identified

8.4.1.17. Entities that are'things' can be identified

8.4.1.18. Relationships or 'properties' can be clearly explained

8.4.1.19. Relationships that are 'connected' can be identified

8.4.2. Identify requirements to be evaluated early (formatively) and artificially (lower left quadrant)

8.4.2.1. Formative Artificial Evaluation Episode 1

8.4.2.1.1. Paste property(ies) to be evaluated in this episode here

8.4.2.1.2. Choose evaluation method

8.4.2.1.3. Research Method Literature for Chosen Evaluation Method(s)

8.4.2.1.4. What do you want to learn from the evaluation?

8.4.2.1.5. Record details of evaluation design here (add nodes or include a link to a file)

8.4.2.1.6. Enact this Evaluation Episode

8.4.2.1.7. Record Learning from the Evaluation here (e.g. link to files)

8.4.2.1.8. Reconsider and Revise Evaluation Plan as DSR Progresses

8.4.3. Identify requirements to be evaluated early (formatively) and naturalistically (upper left quadrant)

8.4.3.1. Formative Naturalistic Evaluation Episode 1

8.4.3.1.1. Paste property(ies) to be evaluated in this episode here

8.4.3.1.2. Choose evaluation method

8.4.3.1.3. Research Method Literature for Chosen Evaluation Method(s)

8.4.3.1.4. What do you want to learn from the evaluation?

8.4.3.1.5. Record details of evaluation design here (add nodes or include a link to a file)

8.4.3.1.6. Enact this Evaluation Episode

8.4.3.1.7. Record Learning from the Evaluation here (e.g. link to files)

8.4.3.1.8. Reconsider and Revise Evaluation Plan as DSR Progresses

8.4.4. Identify requirements to be evaluated late (summatively) and artificially (lower right quadrant)

8.4.4.1. Summative Artificial Evaluation Episode 1

8.4.4.1.1. Paste property(ies) to be evaluated in this episode here

8.4.4.1.2. Choose evaluation method

8.4.4.1.3. Research Method Literature for Chosen Evaluation Method(s)

8.4.4.1.4. What do you want to learn from the evaluation?

8.4.4.1.5. Record details of evaluation design here (add nodes or include a link to a file)

8.4.4.1.6. Enact this Evaluation Episode

8.4.4.1.7. Record Learning from the Evaluation here (e.g. link to files)

8.4.4.1.8. Reconsider and Revise Evaluation Plan as DSR Progresses

8.4.5. Identify requirements to be evaluated late (summatively) and naturalistically (upper right quadrant)

8.4.5.1. Summative Naturalistic Evaluation Episode 1

8.4.5.1.1. Paste property(ies) to be evaluated in this episode here

8.4.5.1.2. Choose evaluation method

8.4.5.1.3. Research Method Literature for Chosen Evaluation Method(s)

8.4.5.1.4. What do you want to learn from the evaluation?

8.4.5.1.5. Record details of evaluation design here (add nodes or include a link to a file)

8.4.5.1.6. Enact this Evaluation Episode

8.4.5.1.7. Record Learning from the Evaluation here (e.g. link to files)

8.4.5.1.8. Reconsider and Revise Evaluation Plan as DSR Progresses

9. 4. Decide Requirements and Capture Design Ideas

9.1. 4.1 Functional Requirements

9.1.1. 4.1.1. Requirements for Achieving purpose and benefits

9.1.1.1. A clear model that includes all things and entities involved in Australian Government service delivery

9.1.1.1.1. How?

9.1.1.2. Entities that are 'individuals or groups' can be identified

9.1.1.2.1. How?

9.1.1.3. Entities that are'things' can be identified

9.1.1.3.1. How?

9.1.1.4. Relationships or 'properties' can be identified

9.1.1.4.1. How?

9.1.1.5. Relationships that are 'connected' can be identified

9.1.1.5.1. How?

9.1.2. 4.1.2 Requirements for Reducing causes of the problem

9.1.2.1. Engaging other government departments

9.2. 4.2 Non-functional Requirements

9.2.1. In the checklists below, tick any non-functional requirements that are relevant to your artefact. Where alleviating causes from your problem as opportunities above can help, Copy&Paste the causes to be alleviated onto the how nodes for the relevant non-functional requirement below.

9.2.2. 4.2.1 Structural

9.2.2.1. Coherence

9.2.2.1.1. How?

9.2.2.2. Consistency

9.2.2.2.1. How?

9.2.2.3. Modularity

9.2.2.3.1. How?

9.2.2.4. Conciseness

9.2.2.4.1. How?

9.2.2.5. Add your own

9.2.2.5.1. provide a name

9.2.2.5.2. provide a description

9.2.3. Usage

9.2.3.1. Usability

9.2.3.1.1. How?

9.2.3.2. Comprehensibility

9.2.3.2.1. How?

9.2.3.3. Learnability

9.2.3.3.1. How?

9.2.3.4. Customisability

9.2.3.4.1. How?

9.2.3.5. Suitability

9.2.3.5.1. Graphic designer will be used

9.2.3.6. Accessibility

9.2.3.6.1. How?

9.2.3.7. Elegance

9.2.3.7.1. How?

9.2.3.8. Fun

9.2.3.9. Traceability

9.2.3.10. Add your own

9.2.3.10.1. provide a name

9.2.3.10.2. provide a description

9.2.4. Management

9.2.4.1. Maintainability

9.2.4.1.1. How?

9.2.4.2. Flexibility

9.2.4.2.1. How?

9.2.4.3. Accountability

9.2.4.3.1. How?

9.2.4.4. Add your own

9.2.4.4.1. provide a name

9.2.4.4.2. provide a description

9.2.5. Environmental

9.2.5.1. Expresiveness

9.2.5.2. Correctness

9.2.5.3. Generality

9.2.5.4. Interoperability

9.2.5.5. Autonomy

9.2.5.6. Proximity

9.2.5.7. Completeness

9.2.5.8. Effectiveness

9.2.5.9. Efficiency

9.2.5.10. Robustness

9.2.5.11. Resilience

9.2.5.12. Add your own

9.2.5.12.1. provide a name

9.2.5.12.2. provide a description