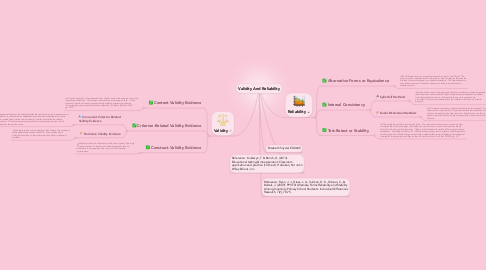

1. Validity

1.1. Content Validity Evidence

1.1.1. A visual inspection of test questions that checks to see if the question covers the instructional objectives. This ensures that educators are staying on task. "In the context of classroom testing, content validity evidence answers the question "does the test measure the instructional objectives" (Kubiszyn & Borich, 2013, pg. 327)?"

1.2. Criterion-Related Validity Evidence

1.2.1. Concurrent Criterion-Related Validity Evidence

1.2.1.1. Measurements that can be administered at the same time, as the measurement to be validated. In other words an established test can be administered at the same time that another test needs to be reviewed for validity, and shows the relation between both. This helps teachers ensure that the new assessment is as valid if not better than the previous ones.

1.2.2. Predictive Validity Evidence

1.2.2.1. The test predicts some behaviors of the testers, this works well with aptitude tests such as the SAT's. It can predict who is doing well and why so that instructors can adjust to better aid students.

1.3. Construct Validity Evidence

1.3.1. Makes sure that the relationship to the test is equal to the topic. This ensures that if a teacher is building assessment then its questions are a large part of the topic, and will measure improvement.

2. Reference: Kubiszyn, T. & Borich, G. (2013). Educational testing & measurement: Classroom application and practice (10th ed.). Hoboken, NJ: John Wiley & Sons, Inc.

3. Reliability

3.1. Alternative Forms or Equivalence

3.1.1. Two Different forms of a test are given such as test A, and Test B. The scores are then compared and if one version of the test was far less than the first then the low scoring test is considered unreliable. This helps educators ensure that the versions they have created are reliable and relevant to material covered.

3.2. Internal Consistency

3.2.1. Split-Half Methods

3.2.1.1. A single test is used, by being split in half and divided by several test groups (also known ass odd or even) and then solidly answered questions are added to the shorter, better version of the test while poor test questions are eliminated. This ensures educators that the material on the test is a reliable question.

3.2.2. Kuder-Richardson Methods

3.2.2.1. This internal consistency method that estimates the strength of reliability measures the commonality of of questions within the assessment as well as the commonality in the other test form. Teachers can use this to measure the reliability of a single concept within a lesson, such as dividing fractions.

3.3. Test-Retest or Stability

3.3.1. This simply means that a test is given twice. This can be after a few hours to even a week or more after the initial test is given. Educators can use this tool to ensure that tests are reliable and valid to learning in the classroom. There is evidence that this works well for many education researchers. According to Ryan et all, "Although changes from test to retest on the average were small and positive, reliable change methodology indicated that some participants demonstrated meaningful improvement or decline as they moved from Form A to Form B (2009, pg. 74)."