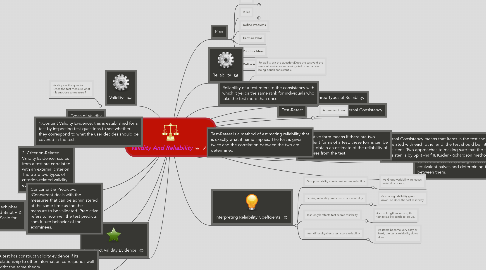

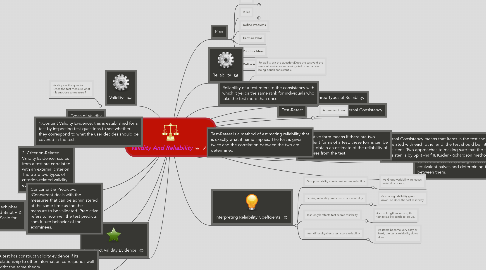

Validity And Reliability

por Chantrice Culbreath

1. Validity:

1.1. Validity ask the question :Does the test measure what it is suppose to measure?

2. Construct Validity Evidence

3. Types of Validity

4. 1.Content Validity Evidence: this is established for a test by inspecting test questions to see whether they correspond to what the user decides should be covered in the test.

5. 2. Criterion-Related Validity Evidence: scores from a test are correlated with an external criterion. There are two types of criterion-related validity evidence.

6. Concurrent & Predictive :Concurrent deals with the measures that can be administered at the same time as the the measure to be validated. Predictive refers to how well the test predicts som future behavior of the examinees.

7. Some test used while trying to dechipher concurrent validity are: Standford-Binet V & (WISC-IV) Wechsler Intelligence Scale for Children-IV

8. A test has construct validity evidence if its relationship to other information corresponds well witht the same theory.

9. Split halfs involves splitting the test into two equivalent halves and determining the correlation between them.

9.1. Kuder-Richardson involves measuring the extent to which items within one form of the test have as much in common with one another as do the items in that one form with corresponding items.

10. Internal Consistency means that items in the test should be correlated with each other and ithe test should be internally consistent. Two approaches to making sure that the test is internally consisten is by Split -half & Kuder -Richardson methods.

11. Alternative form means if there are two equivalent forms of a test, these forms can be used to obtain an estimate of the reliability of the scores from the test.

11.1. New node

12. Test-Retest is a method of estimating reliability that is exactly what it name implies. The test is given twice and the correlation between the two are determined.

13. Internal Consistency

14. Test-Retest

14.1. Alternative Form

15. Three Types of Reliablity:

16. Plan

16.1. Goals

16.1.1. Goal 1

16.1.2. Goal 2

16.2. Rules

16.2.1. Session Rule 1

16.2.2. Session Rule 2

16.3. Define Problems

16.4. Capture Ideas

16.5. Prioritize Ideas

16.6. Define Action Points

17. Reliability

17.1. Reliability ask the question:Does the test yeid the same of similar score rankings (all other factors being equal) consistently?

18. Interpreting Reliability Coeffcients

18.1. Group variability affects test scores reliability

18.1.1. As Group variability increases, reliability goes up.

18.2. Scoring reliability limits test score relaibility.

18.2.1. As scoring relaibility goes down, so does the test reliability

18.3. Test length affects test scores reliability.

18.3.1. As test length increases, the test reliability tends to go up.

18.4. Item diffucultyaffects test score reliability.

18.4.1. As items become very easy or hard, the test's relaibility dows down.