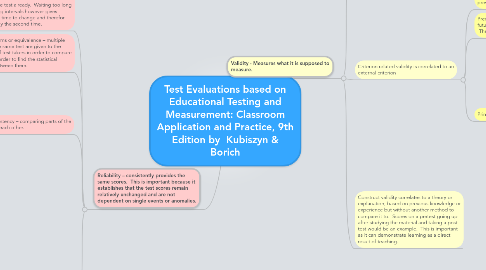

1. Reliability – consistently provides the same scores. This is important because it establishes that the test scores remain relatively unchanged and are not dependent on single events or anomalies.

1.1. Test-retest or stability – is providing test takers with the same test and finding results that are statistically the same. Problems can occur if students remember the material on the first test, and use it to learn and therefore score higher on the second test as a function of having seen the test already. Waiting too long between testing intervals however gives students much time to change and therefor score differently the second time.

1.2. Alternative forms or equivalence – multiple versions of the same test are given to the same group of test takers in order to compare the results in order to find the statistical correlation between them.

1.3. Internal consistency – comparing parts of the same test to each other.

1.3.1. Split-half method – dividing the test questions into equal groups and comparing the two. Odd-even reliability is better than dividing a test into the first and second halves because it overcomes the issue of easier questions being at the beginning of a test and more difficult questions towards the end.

1.3.2. Kuder-Richardson methods – compares test items within a test and towards alternative versions of that test. This is a complicated process that teachers are unlikely to perform, but may read about when researching standardized test programs.

1.4. Principles for interpreting reliability coefficients

1.4.1. 1. Heterogeneous groups produce higher coefficients than homogenous groups. This is important because a mixed ability group is more representative of the typical classroom.

1.4.2. 2. Scoring of the test questions affects the reliability of the test. If mistakes are made when marking the questions it will affect the reliability of the overall comparisons. This is important because introducing error into the calculation will not provide an accurate numerical value. A mistake early in the calculation will magnify as the numbers are further manipulated.

1.4.3. 3. The greater the number of items included in the analysis the more reliable the outcome will be, if all other factors are equal. Fewer items are more greatly affected by an outlying score or anomaly when making the computations. This is import because larger sample sizes will be more accurate then smaller sizes; this is why large testing companies are assumed to have better reliability then smaller scale organizations.

1.4.4. 4. Reliability of scores decreases if tests are outside of the limits of the test takers. Tests that are too easy or too difficult score distributions become homogenized, yielding lower correlation coefficients. This is important because if the test is too easy, the results will be skewed. If the test is too difficult many students will guess, which is in and of itself an unreliable way to yield consistent answers.

2. Validity - Measures what it is supposed to measure.

2.1. Content validity checks for alignment with teaching objectives or standards. It cannot assess the appropriateness, such as reading level. It works best with achievement tests. It is judged subjectively, there are no correlation computations. This is important because we want to be sure that we are testing that which we set out to test, and not another set of skills or abilities.

2.2. Criterion related validity is correlated to an external criterion

2.2.1. Concurrent criterion related validity – compares the results of an already established test and a new test to determine if the new test is also valid. This is important because the research done with existing tests can then be used to develop new tests that for one reason or another are easier to use or better than the previous test.

2.2.2. Predictive validity – predicts the likelihood of a future event based on the scores from the test. The SAT is an example of this kind of test.

2.2.3. Principles of concurrent and predictive validity

2.2.3.1. 1 - Concurrent validity coefficients are generally higher than predictive validity coefficients. (Kubiszyn 335) This is important to remember because a lower predictive coefficient will not necessarily mean the test is unusable.

2.2.3.2. 2 – Homogenous groups of test takers will result in lower validity coefficients. Heterogeneous, or mixed ability groups, will result in higher validity coefficients. This is important because if the group scores used to calculate the values is too similar to each other the results will reflect that homogeneity.

2.2.3.3. 3 – Interpretation is situational for validity coefficients. The relevance and reliability of the criteria need to be taken into consideration. This is important because tests for different purposes, in different situations, will be usable at varying coefficients.