1. Fundamentals of Testing

1.1. What is testing

1.1.1. Findin defects

1.1.2. Gaining confidence about the level of quality and providing information.

1.1.3. Preventing defects

1.2. Fundamental test process

1.2.1. Planning and control

1.2.2. Analysis and design

1.2.3. Implementation and execution

1.2.4. Evaluating exist criteria and reporting

1.2.5. Test closure activities

1.3. Viewpoints in testing

1.3.1. development testing

1.3.1.1. to cause as many failures as possible so that defects in the software are identified and can be fixed

1.3.2. Acceptance testing

1.3.2.1. to confirm that the system works as expected, to gain confidence that it has met the requirements.

1.3.3. Testing

1.3.3.1. to assess the quality of the software, to give information to stakeholders of the risk of releasing the system at a given time.

1.3.4. Maintenance testing

1.3.4.1. no new defects have been introduced during dev of the changes.

1.3.5. Operational testing

1.3.5.1. assess system characteristics such as reliability or availability

1.4. Debugging V.S. Testing

1.4.1. Dynamic testing

1.4.1.1. shows failures that are caused by defects

1.4.2. Debugging

1.4.2.1. The development activity that finds, analyse and removes the cause of failure

1.4.3. Subsequent re-testing

1.4.3.1. ensures that the fix does resolve the failure

1.5. Seven Testing Principles

1.5.1. Testing shows presence of defects

1.5.1.1. Testing can show that defects are present but cannot guarantee the correctness of the system.

1.5.2. Exhaustive testing is impossible

1.5.2.1. Testing everything is not feasible except for trivial cases. risk analysis and priorities should be used.

1.5.3. Early testing

1.5.3.1. Start early

1.5.4. Defect clustering

1.5.4.1. means that the majority of the defects are caused by a small number of modules, i.e. the distribution of defects are not across the application but rather centralized in limited sections of the application.

1.5.5. Pesticide paradox

1.5.5.1. test cases need to be regularly reviewed and revised, and new and different tests need to be written to test different parts of the software.

1.5.6. Testing is context dependent

1.5.6.1. Testing is done differently in different contexts.

1.5.7. Absence-of-errors fallacy

1.5.7.1. Finding and fixing defects does not help if the system built is unusable and does not fulfill the users' needs and expectations.

1.6. Test Process

1.6.1. Test Planning and Control

1.6.1.1. Test Planning:Defining the objectives of testing and the specification of test activities in order to meet the objectives and mission. Test Control: ongoing activity of comparing actual progress against the plan, and reporting the status, including deviations from the plan. the testing activities should be monitored throughout the project.

1.6.2. Test Analysis and design

1.6.2.1. Reviewing the test basis

1.6.2.2. Evaluating testability of the test basis and test objects

1.6.2.3. Identifying and prioritizing test conditions based on analysis of test items, the specification, behavior and structure of the software

1.6.2.4. Designing and prioritizing high level test cases

1.6.2.5. Identifying necessary test data to support the test conditions and test cases

1.6.2.6. Designing the test environment setup and identifying any required infrastructure and tools

1.6.2.7. Creating bi-directional traceability between test basis and test cases

1.6.3. Test implementation and execution

1.6.3.1. Finalizing, implementing and priotizing test cases

1.6.3.2. Developing and prioritizing test procedures, creeating test data and writting automated test scripts.

1.6.4. Evaluating exit criteria and reporting

1.6.5. Test closures activities

2. Testing Throughout the Software Life Cycle

2.1. Software Development Life Cycle

2.1.1. Requirement

2.1.2. Design

2.1.3. Develop

2.1.4. Test (Validate)

2.1.5. Deploy (Implement)

2.1.6. Support (Maintain)

2.2. Software Development Models

2.2.1. V-model (Sequential Development Model)

2.2.1.1. Component (unit) testing

2.2.1.1.1. Test basis

2.2.1.1.2. Test objects

2.2.1.1.3. tested in isolation

2.2.1.1.4. most thorough look at detail ERROR HANDLING INTERFACES

2.2.1.2. Integration testing

2.2.1.2.1. Test basis

2.2.1.2.2. Test objects

2.2.1.2.3. Component integration testing

2.2.1.2.4. System integration testing

2.2.1.2.5. more than one tested component

2.2.1.2.6. communication between components

2.2.1.2.7. what the set can perform that is not possible individually

2.2.1.2.8. non-functional aspects if possible

2.2.1.2.9. Big-Bang integration

2.2.1.2.10. Incremental Integration

2.2.1.2.11. Top-Down Integration

2.2.1.2.12. Bottom-up Integration

2.2.1.2.13. Thread integration

2.2.1.3. System testing

2.2.1.3.1. last integration step

2.2.1.3.2. functional

2.2.1.3.3. non-functional

2.2.1.3.4. Integration testing in the large

2.2.1.4. Acceptance testing

2.2.1.4.1. User acceptance testing

2.2.1.4.2. operational (acceptance testing

2.2.1.4.3. Contract and regulation acceptance testing

2.2.1.4.4. Alpha and beta testing

2.2.2. Iterative-incremental Development Models

2.2.2.1. The process of establishing requirements, designing, building and testing a system in a series of short development cycles.

2.2.3. Waterfall model

2.2.4. Testing within a Life Cycle Model

2.3. Test Types

2.3.1. Functional Testing

2.3.1.1. Black Box Testing

2.3.2. Non-functional Testing

2.3.2.1. Black Box Testing

2.3.3. Structural Testing

2.3.3.1. White Box Testing

2.3.4. Re-testing and Regression Testing

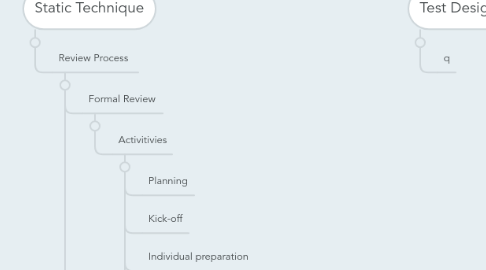

3. Static Technique

3.1. Review Process

3.1.1. Formal Review

3.1.1.1. Activitivies

3.1.1.1.1. Planning

3.1.1.1.2. Kick-off

3.1.1.1.3. Individual preparation

3.1.1.1.4. Examination/evaluation/recording of results

3.1.1.1.5. Rework

3.1.1.1.6. Follow-up

3.1.2. Informal Review

3.1.2.1. No formal process

3.1.2.2. May take the form of pair programming or a technical lead reviering designs and code

3.1.2.3. Results may be documented

3.1.2.4. Varies in usefulness depending on the reviewers

3.1.2.5. Main purpose: inexpensive way to get some benefit

3.1.3. Walkthrough

3.1.3.1. meeting led by author

3.1.3.2. may take the form of scenarios, dry runs, peer group participation

3.1.3.3. Open-ended sessions

3.1.3.3.1. optional pre-meeting preparation of reviewers

3.1.3.3.2. Optional preparation of a review report including list of findings.

3.1.3.4. Optional scribe

3.1.3.5. May vary in practice from quite informal to very formal

3.1.3.6. Main purposes: learning, gaining understanding, finding defects.

3.1.4. Technical Review

3.1.4.1. Documented. defined defect-detection process that includes peers and technical experts with optional management participation

3.1.4.2. May be performed as a peer review without management participation

3.1.4.3. Ideally led by trained moderator

3.1.4.4. Pre-meeting preparation by reviewers

3.1.4.5. Optional use of checklists

3.1.4.6. preparation of a review report which includes the list of findings, the verdict whether the software product meets its req and. where appropriate, recommendations related to findings

3.1.4.7. may vary in practice from quite informal to very formal

3.1.4.8. Main purpose: discussing,

3.1.5. Inspection

3.1.6. Roles and Responsibilities

3.1.6.1. Manager

3.1.6.1.1. decides on the execution of reviews

3.1.6.1.2. allocates time in project schedules

3.1.6.1.3. determines if the review objectives have been met.

3.1.6.2. Moderator

3.1.6.2.1. Leads the review of the document

3.1.6.2.2. planning the review

3.1.6.2.3. running the meeting

3.1.6.2.4. following-up after the meeting

3.1.6.3. Author

3.1.6.3.1. The writer or person with chief resp. for the documents to be reviewed.

3.1.6.4. Reviewers

3.1.6.4.1. identify and describe defects

3.1.6.5. Scribe

3.1.6.5.1. Documents all the issues, problems and open points that were identified during the meeting.