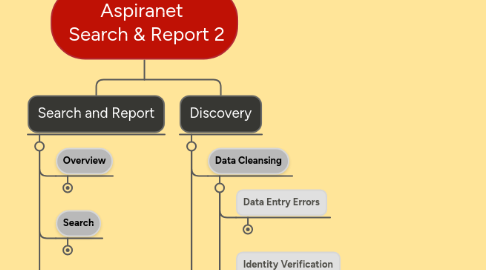

1. Search and Report

1.1. Overview

1.1.1. Our efforts in meeting the short-term goals for phase 1 of this project can be divided into two sections: "Search", the back-end engine which is responsible for searching all the data and retrieving all the relevant matches to the user's requests, and "Views & Reports" which is the front-end interface that can also generate reports and perform basic analytics on the search results.

1.2. Search

1.2.1. Introduction

1.2.1.1. This component represents the back-end functionality of the application, namely "Search". Below, we examine the search requirements of the Internet versus Enterprise, and why GSA was chosen as the search platform of choice.

1.2.1.2. When we refer to "unstructured vs. structured" data below, we are making a distinction between "free-form, written, language documents and files vs. precisely and rigidly defined data containers such as spreadsheets, grids, database tables, etc."

1.2.2. Two Different Search Environments

1.2.2.1. Internet Search (unstructured)

1.2.2.1.1. Google = Internet Search

1.2.2.1.2. Crawls and Indexes Trillions of Websites

1.2.2.1.3. Experts at Free-form, Natural Language-based Documents

1.2.2.2. Enterprise Search (structured and/or unstructured)

1.2.2.2.1. Introduction

1.2.2.2.2. Both Structured & Unstructured Data

1.2.2.2.3. High-level Security

1.2.2.2.4. Privacy & Confidentiality

1.2.2.2.5. Dozens of File and Database Formats

1.2.2.2.6. Extract Data from Images/ Graphics

1.2.2.2.7. Multi-lingual

1.2.3. The Ultimate Search Solution: GSA (Google Search Appliance)

1.2.3.1. Introduction

1.2.3.1.1. In order to be able to service the Enterprise market, Google needed to address all the challenges and issues described above. The result is a hardware/software solution called GSA, and is often referred to as Google in a box. Below is a brief discussion of how they chose to answer the various challenges:

1.2.3.2. Interfaces to almost all current-day databases as well as popular applications such as SharePoint

1.2.3.2.1. Recognizing the need to interface to a multitude of databases, protocols, and applications, GSA designed a solution using different software "connectors" in order to communicate with the different existing systems in the market.

1.2.3.3. Can be deployed inside or outside the client's network, depending upon security constraints.

1.2.3.3.1. Because of the high security requirements of many organizations and agencies, GSA was created as a hardware and software solution that could be installed on either side of the clients firewall, depending on their situation and requirements. This allowed great flexibility in such a security-conscious marketplace

1.2.3.4. Controls the exposure and visibility of data at all levels, according to the user's roles and permissions (from individual table cells to entire databases)

1.2.3.4.1. To accommodate all the different idiosyncrasies and nuances involved with determining who can see what data and under what circumstances, GSA provides an extremely flexible system that can configure different roles and permissions at every level of the data, from the individual cell of a particular table, row, and column to the entire database itself.

1.2.3.5. indexes and searches over 220 file formats, including text within images

1.2.3.5.1. Having very little choice, Google needed to be able to open, read, index and ultimately search over 220 different file formats in order to be effective and comprehensive as a generic search engine for an organization's data.

1.2.3.6. Operates in over 40 languages

1.2.3.6.1. When it comes to language translation, Google is second to none. So, it comes as no surprise that they offer search in 40 different languages.

1.2.3.7. Has no inherent limitation on its scope, so that a single search can span across the multitude of data "silos" that have become all too common in large organizations

1.2.3.7.1. Using traditional SQL queries in order to search an organization's data, can be daunting when trying to integrate different data sources from different locations with different restrictions and requirements, and therefore more often than not the organization would settle for just being able to search within their controlled domain. However, with GSA, since the paradigm is so different, it becomes much easier to work in distributed, heterogeneous data networks, which sometimes makes all the difference.

1.2.3.8. Relies strictly on Google Partners like Stewards of Change to create and deploy a software interface to GSA so that users can input search words and get back formatted, easy-to-understand results.

1.2.3.8.1. GSA, however, is not a complete turnkey solution for an organization since it purposefully does not include a customized front-end interface directly, but rather works through its partners, like Stewards of Change, who can configure and customize any type of interface that the client needs.

1.2.4. How can we use this technology in Aspiranet?

1.2.4.1. GSA's ability to instantly and accurately search and retrieve both documents/files and database records makes it a great match for "Options Database" as well as the enormous repository of files located on the "P Drive" that are not being utilized for anything currently. Lastly, it can be connected to the Aspiranet.org website and offer an even higher-level search functionality. In fact, there is a demo GSA unit that is currently doing just that which you are free to explore.

1.3. Views & Reports

1.3.1. Introduction

1.3.1.1. This component represents the front-end functionality of this program, namely "Views and Reports". In addition to being the interface to the program, it also generates reports and layouts as well as basic data analytics on the "Search" results.

1.3.2. Step 1: Unify the Data Silos

1.3.2.1. Use SQL to join and union the related yet separate data sets and tables into as extensive and comprehensive a record as possible, which GSA can then "crawl and index" so that when it finds a data record that it matches, it returns all the relevant and related information for that record automatically as well.

1.3.2.1.1. "Crawling" is the process by which Google and all the other search engines travel through all the links/URLs that they can find so that they can create an extensive "Index" like in the back of a textbook, which gives the exact locations of all the important terms.

1.3.2.2. If necessary also incorporate a "Fuzzy" matching or neural net-like capability at the table-cell and/or record comparison level when key identifying data is missing or inconsistent across data systems and you're not able to match up records that are referring to the same "identity"

1.3.3. Step 2: Slice and Dice the Data

1.3.3.1. After unifying the data as best we can, using software tools such as our very own "DataLab", we can easily filter any of the SQL views previously discussed to just those records that interest us at the time. Or, alternatively, we can use GSA to search and retrieve all the relevant records, and then run our filters on these results instead

1.3.3.2. DataLab can then instantly organize and sort the filtered data into expandable and collapsible groups with all their corresponding subtotals, totals, and statistics automatically tabulated and available

1.3.4. Step 3: Perform Basic Analytics

1.3.4.1. Using a simple drag-and-drop interface, we can then take any subset of properties, like name, address, ethnicity, etc. and have them compared to any other subset in order to identify trends, patterns, or suspicious data

1.3.5. Step 4: Visualize Data Using 2D and 3D Charts

1.3.5.1. A variety of charts and graphs have been very tightly integrated with the spreadsheet-like, tabular data and analytics so that while we're selecting the data we are interested in, the chart representation of that data is simultaneously generated and displayed dynamically, all in real time

1.3.6. Step 5: Export the Data / Analytics into Reports

1.3.6.1. A simple right-click on any of the tables, grids, or charts allows us to save them as independent files in various formats, which we can then combine into one or more reports

2. Discovery

2.1. Data Cleansing

2.1.1. Data Entry Errors

2.1.1.1. Typos, Spelling, Misinformation, Lack of coordination & integration, that leads to duplication and inconsistencies ...

2.1.2. Identity Verification

2.1.2.1. Huge, $16 billion industry centered around undoing the damage of centralized databases disintegrating into independent silos

2.1.3. Data Validation Tools and Solutions

2.1.3.1. Address Check

2.1.3.2. Email Validation

2.1.3.3. First and Last Name Lookups

2.1.4. Data Standardization

2.1.4.1. Procedures and policies need to be put in place in order to ensure sustainability, otherwise it will deteriorate over time until the data again becomes independent silos

2.1.4.2. If not implemented, it would very likely jeopardize efforts in identity matching and verification

2.1.5. Where do we stand?

2.1.5.1. Have started to look into the range of errors and their corresponding proposed solutions as alluded to previously

2.2. Search www.aspiranet.org using GSA

2.2.1. We have configured and deployed a demo GSA unit to point to the company website and to continuously crawl and index it. We are running different tests on it to see how successful it is and then adjusting its configuration accordingly, if needed. Feel free to experiment with it and compare the results to the site's current search box.

2.3. Create New Views of the Data

2.3.1. Combine data from various tables into one consolidated view, including outside data (from different organizations, Open Data, etc.)

2.3.2. Where do we stand?

2.3.2.1. Joe has created 4 major views of the Options database that span the different facets of the data's meaning and the stories they are trying to convey. While there are still other views that can be generated, this is an excellent start and represents a broad, well-balanced perspective of the most common and important activities for the organization: Clients, Referrals, Activities, and Training..

2.4. Slice and Dice the Views

2.4.1. Utilize various data manipulation, visualization, and statistical tools to explore the data, looking for patterns, trends, and anomalies

2.4.2. Where do we stand?

2.4.2.1. We have been leveraging various data processing tools and modules that we have written over the past 2 years to quickly and easily learn about the state and nature of the data as well as the information and knowledge that it offers us about the organization and its industry.

2.4.2.2. Which tool(s) Aspiranet will end up using depends on many factors. But what is less controversial is the fact that such an intuitive, simple-to-use, search and ad hoc reporting analysis tool will empower many of Aspiranet's employees to be substantially more self-sufficient, to have access to a potentially game-changing level of data analysis, and to profoundly improve the efficiency and therefore the labor-savings of the organization.

2.4.2.3. This has proven to be very helpful in organizing our thoughts around the present-day architecture and how to transition into the next-generation platform that will undoubtedly be transformative in nature. The lightbulb was not invented by improving the candle. It requires a paradigm shift in thinking that starts with a comprehensive understanding and appreciation of the current schema within the context of where we want to be (sooner rather than later).