1. Answers

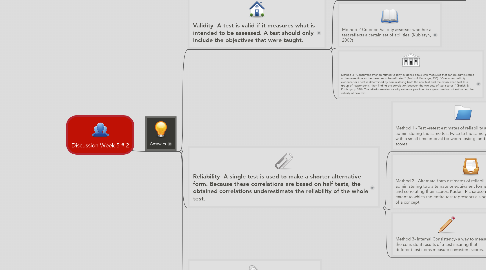

1.1. Validity- A test is valid if it measures what is intended to be assessed. A test should only include the objectives that were taught.

1.1.1. Method 1-Internal validity is a measure which ensures that the experiment design closely follows the principle of cause and effect. The test validity is a determinate what meaning can be placed on the test results.

1.1.2. Method 2 Criterion Validity assesses whether a test reflects a certain set of abilities. (Kubiszyn, 2009)

1.1.3. Method 3 “Concurrent criterion-related validity evidence deals with measures that can be administered at the same time as the measure to be validated” (Borich & Kubiszyn, 330). “Concurrent validity evidence for a test is determined by administering both the new test and the established test to a group of respondents, then finding the correlation between the two sets of test scores.” (Borich & Kubiszyn, p.330). The criterion-related validity evidence provides for a good method of estimating the validity of new test.

1.2. Reliability- A single test is used to make a shorter alternative form. Because these correlations are based on half tests, the obtained correlations underestimate the reliability of the whole test.

1.2.1. Method 1 - Test –retest estimates of reliability are obtained by administering the same test twice to the same group of individuals, with a small time interval between testing, and correlating the scores.

1.2.2. Method 2 - Alternate-form estimates of reliability are obtained by administering two alternate or equivalent forms of a test to the same group and correlating their scores. Kuder –Richardson methods determine the extent to which the entire test represents a single, fairly consistent measure of a concept.

1.2.3. Method 3- Internal Consistency- a way to measure the consistent results of a test insuring that different test items measure consistent scores.