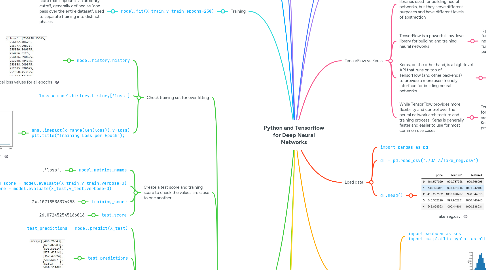

1. Test/Train split

1.1. Convert Pandas to Numpy for Keras

1.1.1. Features

1.1.1.1. X = df[['feature1','feature2']].values

1.1.2. Label

1.1.2.1. y = df['price'].values

1.1.3. Split

1.1.3.1. X_train, X_test, y_train, y_test = train_test_split(X,y,test_size=0.3,random_state=42)

1.2. from sklearn.model_selection import train_test_split

1.3. Check the shapes of the features and labels split into training and test sets

1.3.1. X_train.shape

1.3.2. X_test.shape

1.3.3. y_train.shape

1.3.4. y_test.shape

2. Normalize/Scale data

2.1. We only need to scale the feature data (i.e. make all the numeric values fall between 0 and 1)

2.2. from sklearn.preprocessing import MinMaxScaler

2.2.1. You can get help on MinMaxScaler

2.2.1.1. help(MinMaxScaler)

2.3. Create scaler and fit feature data to it so that values are normalized (i.e. all lie between 0 and 1)

2.3.1. scaler = MinMaxScaler()

2.3.2. scaler.fit(X_train)

2.3.3. X_train = scaler.transform(X_train) X_test = scaler.transform(X_test)

2.4. Verify that feature data is now normalized for both training and test sets

2.4.1. X_train

2.4.1.1. Normalized values

2.4.2. X_test

2.4.2.1. Normalized values

3. Create model

3.1. from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Activation

3.2. model = Sequential() model.add(Dense(4,activation='relu')) model.add(Dense(4,activation='relu')) model.add(Dense(4,activation='relu'))

3.2.1. This creates a forward processing, fully connected neural network with 3 hidden layers consisting of 4 neurons each layer, using the rectified linear unit function as the activation function

3.3. model.add(Dense(1))

3.3.1. This adds the final output layer of a single neuron (no need for an activation function because it's the output layer)

3.4. model.compile(optimizer='rmsprop',loss='mse')

3.4.1. When choosing an optimizer and a loss, keep in mind what kind of problem you are trying to solve

3.4.1.1. # For a multi-class classification problem model.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=['accuracy'])

3.4.1.2. # For a binary classification problem model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

3.4.1.3. # For a mean squared error regression problem model.compile(optimizer='rmsprop', loss='mse')

4. Training

4.1. model.fit(X_train,y_train,epochs=250)

4.1.1. Note that 1 epoch is an arbitrary cutoff, generally defined as "one pass over the entire dataset", used to separate training into distinct phases

5. Evaluation

5.1. Check training set for over fitting

5.1.1. model.history.history

5.1.1.1. Model loss values for all epochs

5.1.2. loss = model.history.history['loss']

5.1.3. sns.lineplot(x=range(len(loss)),y=loss) plt.title("Training Loss per Epoch");

5.1.3.1. Training losses per epoch

5.1.3.1.1. We can see that optimal loss value achieved somewhere between 60 and 70 epochs

5.2. Create a test score and training score to check the values are close to one another

5.2.1. model.metrics_names

5.2.1.1. ['loss']

5.2.2. training_score = model.evaluate(X_train,y_train,verbose=0) test_score = model.evaluate(X_test,y_test,verbose=0)

5.2.3. training_score

5.2.3.1. 24.10718536376953

5.2.4. test_score

5.2.4.1. 26.072452545166016

5.3. Feed test features into trained model and compare results to test labels (note: label is a price in dollars in this example)

5.3.1. test_predictions = model.predict(X_test)

5.3.2. test_predictions

5.3.2.1. test prediction array

5.3.3. pred_df = pd.DataFrame(y_test,columns=['Test Y'])

5.3.4. pred_df

5.3.4.1. test label dataframe

5.3.5. test_predictions = pd.Series(test_predictions.reshape(300,))

5.3.5.1. Reshaping the test predictions so we can concatenate to the test label dataframe (i.e. to have the labels and predictions side by side in a dataframe)

5.3.6. test_predictions

5.3.6.1. test prediction series

5.3.7. pred_df = pd.concat([pred_df,test_predictions],axis=1)

5.3.7.1. Combines the test labels (actual test prices in dollars) and the test predictions (prices in dollars that the model predicted)

5.3.8. pred_df.columns = ['Test Y','Model Predictions']

5.3.8.1. Sets column headings

5.3.9. pred_df

5.3.9.1. predictions result dataframe

5.3.10. sns.scatterplot(x='Test Y',y='Model Predictions',data=pred_df)

5.3.10.1. Scatterplot of predictions

5.3.10.1.1. This is a near perfect line, which indicates the model has performed very well with its predictions

6. Predicting on brand new data

6.1. # [[Feature1, Feature2]] new_gem = [[998,1000]]

6.2. # Don't forget to scale! scaler.transform(new_gem)

6.2.1. array([[0.14117652, 0.53968792]])

6.3. new_gem = scaler.transform(new_gem)

6.4. model.predict(new_gem)

6.4.1. array([[420.40567]], dtype=float32)

6.4.1.1. Given feature 1 = 998 and feature 2 = 1000, the model has predicted a price (i.e. the label) of 420 dollars approx.

7. Saving and loading a model

7.1. from tensorflow.keras.models import load_model

7.2. model.save('my_model.h5')

7.2.1. creates a HDF5 file 'my_model.h5'

7.3. later_model = load_model('my_model.h5')

7.4. later_model.predict(new_gem)

7.4.1. array([[420.40567]], dtype=float32)

8. Theory

8.1. See Neural Networks and Deep Learning Theory notebook

9. Installation

9.1. Run this from your Anaconda PowerShell Prompt:

9.1.1. pip install tensorflow

10. TensorFlow vs Keras

10.1. TensorFlow and Keras are both popular open-source Python libraries used for building neural networks, but they serve different purposes and have different levels of abstraction

10.2. TensorFlow is a powerful, low-level library for building and training neural networks

10.2.1. It provides a wide range of functions for building neural network architectures, defining cost functions, and optimizing model parameters using gradient descent

10.2.1.1. TensorFlow also supports distributed computing and can be used for training large-scale neural networks

10.3. Keras, on the other hand, is a high-level API that runs on top of TensorFlow (and other backends) to provide a more user-friendly interface for building neural networks

10.3.1. Keras abstracts away many of the details of low-level TensorFlow code, making it easier to build and train neural networks without sacrificing flexibility or performance

10.3.1.1. It provides a simple and intuitive syntax for defining neural network architectures and training them on data

10.4. While TensorFlow provides more flexibility and control over the neural network architecture and training process, Keras is generally faster and easier to use for most common use cases

10.4.1. TensorFlow can be more suitable for more complex and custom neural network architectures, while Keras is a good choice for rapid prototyping and experimentation

11. Load data

11.1. import pandas as pd

11.2. df = pd.read_csv('./DATA/fake_reg.csv')

11.3. df.head()

11.3.1. fake_reg.csv

12. Exploratory data analysis

12.1. import seaborn as sns import matplotlib.pyplot as plt

12.2. sns.pairplot(df)

12.2.1. fake_reg pairplot