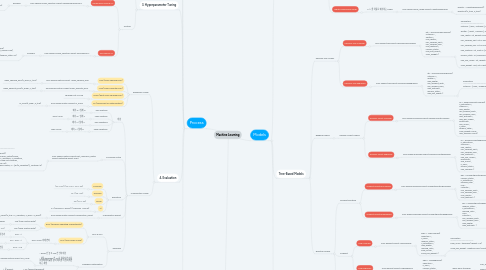

1. Process

1.1. 1. Reading Data

1.1.1. EDA

1.1.1.1. pip install pandas_profiling

1.1.1.1.1. import pandas_profiling

1.1.2. Target 분포 확인

1.1.2.1. Regression Model

1.1.2.1.1. Check Skewness

1.1.2.1.2. Log Transform

1.1.2.2. Classification Model

1.1.2.2.1. Imbalanced Target

1.1.3. Data Wrangling

1.1.3.1. Cleaning Data

1.1.3.2. Gathering Data

1.1.4. Data Preprocessing

1.1.4.1. Data Manipulation

1.1.4.2. Feature Engineering

1.1.5. Preprocessing Methods

1.1.5.1. Remove Outliers

1.1.5.1.1. 0.05% <= values <= 99.5%

1.1.5.2. Encoders

1.1.5.2.1. pip install category_encoders

1.1.5.3. Imputers

1.1.5.3.1. Simple Imputer

1.1.5.3.2. Iterative Imputer

1.1.5.4. Feature Selection

1.1.5.4.1. SelectKBest

1.2. 2. Splitting Data

1.2.1. Hold-Out Validation

1.2.1.1. Train / Validation / Test Data Split

1.2.1.1.1. from sklearn.model_selection import train_test_split

1.2.2. K-Fold Cross Validation

1.2.2.1. Train / Test Data Split

1.2.2.1.1. from sklearn.model_selection import train_test_split

1.2.2.1.2. from sklearn.model_selection import cross_val_score

1.3. 3. Hyperparameter Tuning

1.3.1. Problem

1.3.1.1. https://i.stack.imgur.com/rpqa6.jpg

1.3.2. Solution

1.3.2.1. Randomized Search CV

1.3.2.1.1. from sklearn.model_selection import RandomizedSearchCV

1.3.2.2. Grid Search CV

1.3.2.2.1. from sklearn.model_selection import GridSearchCV

1.4. 4. Evaluation

1.4.1. Regression Model

1.4.1.1. MSE (Mean Squared Error)

1.4.1.1.1. from sklearn.metrics import mean_squared_error

1.4.1.2. MAE (Mean Absolute Error)

1.4.1.2.1. from sklearn.metrics import mean_absolute_error

1.4.1.3. RMSE (Root Mean Squared Error)

1.4.1.3.1. squared root of MSE

1.4.1.4. R2 (Coefficient of Determination)

1.4.1.4.1. from sklearn.metrics import r2_score

1.4.2. Classification Model

1.4.2.1. 개념

1.4.2.1.1. True Positives

1.4.2.1.2. False Positives

1.4.2.1.3. True Negatives

1.4.2.1.4. False Negatives

1.4.2.2. Confusion Matrix

1.4.2.2.1. from sklearn.metrics import plot_confusion_matrix import matplotlib.pyplot as plt

1.4.2.3. Evaluation

1.4.2.3.1. Accuracy

1.4.2.3.2. Precision

1.4.2.3.3. Recall

1.4.2.3.4. F1

1.4.2.4. Classification Report

1.4.2.4.1. from sklearn.metrics import classification_report

1.4.2.5. Threshold

1.4.2.5.1. ROC & AUC

1.4.2.5.2. Threshold Optimization

1.5. 5. Feature & Target Analysis

1.5.1. Bias / Variance Tradeoff

1.5.1.1. Low Bias Train Data (Overfitting)

1.5.1.1.1. High Variance Test Data

1.5.1.2. High Bias Train Data (Underfitting)

1.5.1.2.1. Low Variance Test Data

1.5.2. Check Leakage

1.5.2.1. Train / Test Contamination

1.5.2.1.1. test 에 관한 정보가 train data에 섞여 있는지?

1.5.2.2. Target Leakage

1.5.2.2.1. 해당 feature가 target을 예측하는데 1대1 관계인지?

1.5.3. 전반적인 Importances

1.5.3.1. Feature Importance

1.5.3.1.1. Mean Impurity Decrease

1.5.3.2. Drop Column Importance

1.5.3.2.1. Feature 한개씩 제외하고 나서의 성능 V.S 전체 Feature 에 관한 성능

1.5.3.3. Permutation Importance

1.5.3.3.1. pip install eli5

1.5.4. 개별적인 Importances

1.5.4.1. PDP (Partial Dependence Plots)

1.5.4.1.1. 1 Feature vs Target

1.5.4.1.2. 2 Features vs Target

1.5.4.2. Shapley Values

1.5.4.2.1. pip install shap

2. sklearn.linear_model.Ridge — scikit-learn 0.24.1 documentation

3. Models

3.1. Linear Models

3.1.1. Baseline Model

3.1.1.1. Classification

3.1.1.1.1. Most Frequent Target

3.1.1.2. Regression

3.1.1.2.1. Average Target

3.1.1.3. Time Series

3.1.1.3.1. 당일 예측값 = 전일 Target 값

3.1.2. Predictive Model

3.1.2.1. Simple Regression Model

3.1.2.1.1. 1 Feature vs 1 Target

3.1.2.2. Multiple Regression Model

3.1.2.2.1. Multiple Features vs 1 Target

3.1.2.3. Ridge Regression Model

3.1.2.3.1. Multiple Regression 에 기울기를 조정해서 일반화가 더 잘되게끔 하는 Model

3.1.2.4. Logistic Regression Model

3.1.2.4.1. 0 / 1 을 확률로 예측하는 Model

3.2. Tree-Based Models

3.2.1. Decision Tree Models

3.2.1.1. Decision Tree Classifier

3.2.1.1.1. from sklearn.tree import DecisionTreeClassifier

3.2.1.2. Decision Tree Regressor

3.2.1.2.1. from sklearn.tree import DecisionTreeRegressor

3.2.2. Bagging Models

3.2.2.1. Random Forest Models

3.2.2.1.1. Random Forest Classifier

3.2.2.1.2. Random Forest Regressor

3.2.3. Boosting Models

3.2.3.1. Gradient Boosting

3.2.3.1.1. Gradient Boosting Classifier

3.2.3.1.2. Gradient Boosting Regressor

3.2.3.2. XGBoost

3.2.3.2.1. XGB Classifier

3.2.3.2.2. XGB Regressor

3.2.3.3. Light GBM

3.2.3.3.1. LGBM Classifier

3.2.3.3.2. LGBM Regressor

3.3. Pipeline Modeling

3.3.1. from sklearn.pipeline import make_pipeline

3.3.1.1. pipe = make_pipeline( OneHotEncoder(), SimpleImputer(), LogisticRegression(n_jobs=-1) ) pipe.fit(X_train, y_train)