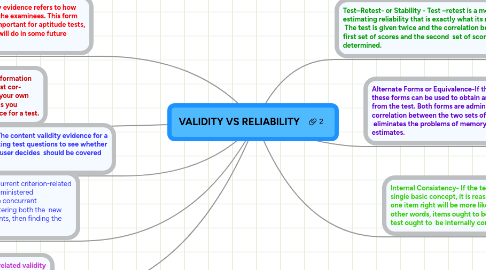

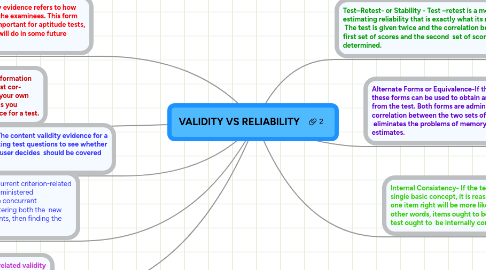

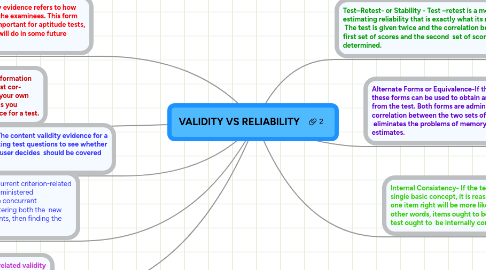

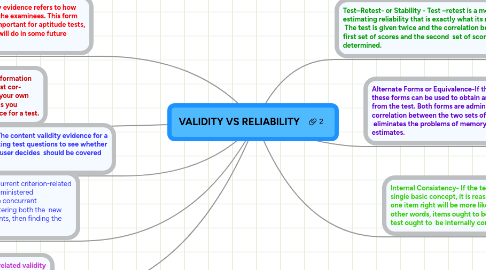

VALIDITY VS RELIABILITY

by Deidra Robinson

1. Concurrent criterion-related validity evidence- Concurrent criterion-related validity evidence deals with measures that can be administered at the same time as the measure to be validated. The concurrent validity evidence for a test is determined by administering both the new test and the established test to a group of respondents, then finding the correlation between the two sets of test scores.

2. Criterion-Related Validity Evidence- criterion-related validity evidence, scores from a test are correlated with an external criterion.

3. Content Validity Evidence - The content validity evidence for a test is established by inspecting test questions to see whether they correspond to what the user decides should be covered by the test.

4. Predictive validity evidence - Predictive validity evidence refers to how well the test predicts some future behavior of the examinees. This form of validity evidence is particularly useful and important for aptitude tests, which attempt to predict how well test takers will do in some future setting.

5. Construct Validity Evidence -In general, any information that lets you know whether results from the test cor- respond to what you would expect (based on your own knowledge about what is being measured) tells you something about the construct validity evidence for a test.

6. Test–Retest- or Stability - Test –retest is a method of estimating reliability that is exactly what its name implies. The test is given twice and the correlation between the first set of scores and the second set of scores is determined.

6.1. New node

7. Alternate Forms or Equivalence-If there are two equivalent forms of a test, these forms can be used to obtain an estimate of the reliability of the scores from the test. Both forms are administered to a group of students, and the correlation between the two sets of scores is determined. This estimate eliminates the problems of memory and practice involved in test –retest estimates.

8. Internal Consistency- If the test in question is designed to measure a single basic concept, it is reasonable to assume that people who get one item right will be more likely to get other, similar items right. In other words, items ought to be correlated with each other, and the test ought to be internally consistent.