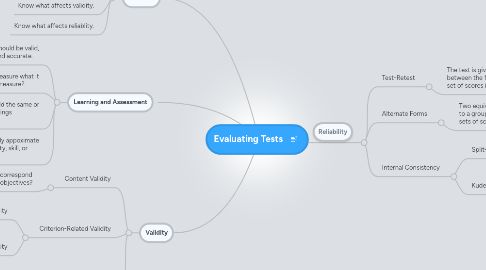

1. Validity

1.1. Content Validity

1.1.1. Do the test items correspond with instructional objectives?

1.2. Criterion-Related Validity

1.2.1. Concurrent criterion-related validity

1.2.1.1. Administer a new test and an established test, then find the correlation between the two sets of scores.

1.2.2. Predictive validity

1.2.2.1. Administer the test to a group, then measure the subjects on whatever the test is supposed to predict after a period of time has elapsed.

1.3. Construct Validity

1.3.1. Do the test results correspond with scores on other variables as predicted by some rationale or theory?

2. Learning and Assessment

2.1. All tests should be valid, reliable, and accurate.

2.2. Does the test measure what it is supposed to measure?

2.3. Does the test yield the same or similar score rankings consistently?

2.4. Does the test score fairly closely appoximate an individual's true level of ability, skill, or aptitude?

3. Teachers

3.1. Use test results that are valid, reliable, and accurate to make important decisions.

3.2. Know what affects validity.

3.3. Know what affects reliablity.

4. Reliability

4.1. Test-Retest

4.1.1. The test is given twice and the correlation between the first set of scores and the second set of scores is determined.

4.2. Alternate Forms

4.2.1. Two equivalent forms of a test are administered to a group, and the correlation between the two sets of scores is determined.

4.3. Internal Consistency

4.3.1. Split-half methods

4.3.1.1. Divide a test into halves and correlate the halves with one another.

4.3.2. Kuder-Richardson methods

4.3.2.1. Determine the extent to which the entire test represents a single, fairly consistent measure of a concept.