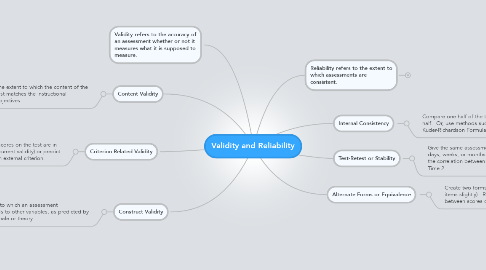

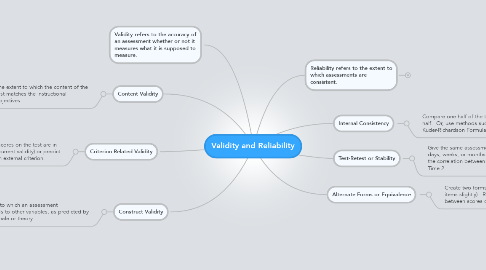

Validity and Reliability

by Meggan Henry

1. Validity refers to the accuracy of an assessment whether or not it measures what it is supposed to measure.

2. Content Validity

2.1. The extent to which the content of the test matches the instructional objectives.

3. Criterion Related Validity

3.1. The extent to which scores on the test are in agreement with (concurrent validity) or predict (predictive validity) an external criterion.

3.1.1. Concurrent validity is determined by correlating test scores with a criterion measure collected at the same time.

3.1.2. Predictive validity is determined by correlating test school with a criterion measure collected after a period of time has passed.

4. Construct Validity

4.1. The extent to which an assessment corresponds to other variables, as predicted by some rationale or theory.

5. Reliability refers to the extent to which assessments are consistent.

5.1. New node

6. Alternate Forms or Equivalence

6.1. Create two forms of the same test (vary the items slightly). Reliability is stated as correlation between scores of Test 1 and Test 2.

7. Test-Retest or Stability

7.1. Give the same assessment twice, separated by days, weeks, or months. Reliability is stated as the correlation between scores at Time 1 and Time 2.

8. Internal Consistency

8.1. Compare one half of the test to the other half. Or, use methods such as Kuder-Richardson Formula 20 (KR20)

8.1.1. Kuder-Richardson methods determine the extent to which the entire test represents a single, fairly consistent measure of concept.