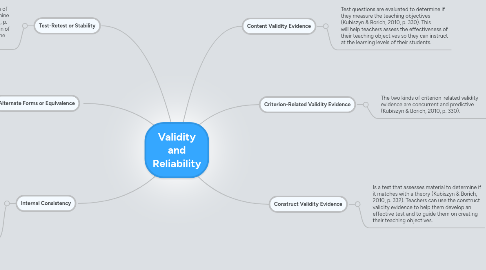

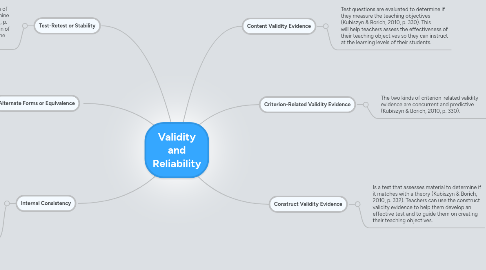

Validity and Reliability

by Saul Tena

1. Test-Retest or Stability

1.1. Students take a test twice and the results of each test are evaluated in order to determine their correlation (Kubiszyn & Borich, 2010, p. 341). Teachers can evaluate the correlation of the two test results in order to measure the effectiveness of the test.

2. Alternate Forms or Equivalence

2.1. A test is developed in two similar constructions and is given to the class in order to measure the approximate level of reliability of the test results. The time between when the two tests are given should be brief. The results of the tests are examined to find their correlation (Kubiszyn & Borich, 2010, p. 343). The importance of this is teachers can determine the reliability of the test by comparing the results. The test results can be used to help teachers instruct at the level of their students because they will know the reliability of their tests.

3. Internal Consistency

3.1. The test is divided into two similar sections. The test questions should relate to each other in content level. The correlation between the two tests sections is then found (Kubiszyn & Borich, 2010, p. 343). The significance of this is teachers will be able to evaluate the strengths and reliability of their test. Teachers can then make the adjustments they need to accurately assess their students and improve their instruction.

4. Construct Validity Evidence

4.1. Is a test that assesses material to determine if it matches with a theory (Kubiszyn & Borich, 2010, p. 332). Teachers can use the construct validity evidence to help them develop an effective test and to guide them on creating their teaching objectives.

5. Content Validity Evidence

5.1. Test questions are evaluated to determine if they measure the teaching objectives (Kubiszyn & Borich, 2010, p. 330). This will help teachers assess the effectiveness of their teaching objectives so they can instruct at the learning levels of their students.

6. Criterion-Related Validity Evidence

6.1. The two kinds of criterion-related validity evidence are concurrent and predictive (Kubiszyn & Borich, 2010, p. 330).

6.1.1. Concurrent criterion-related validity evidence

6.1.1.1. The results of a “new” test and “established” test are assessed to determine their correlation (Kubiszyn & Borich, 2010, p. 330). The significance of this is teachers can refer to the established test to determine the strength of the new test.

6.1.2. Predictive validity evidence

6.1.2.1. The accuracy of the test predictions is measured by testing the students at a later time on the particular area being predicted (Kubiszyn & Borich, 2010, p. 331). The test predictions if accurate can help teachers prepare learning objectives that will help their students meet their academic potential.