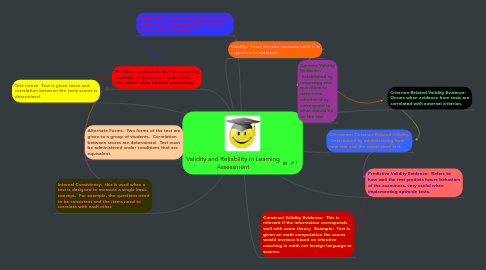

Validity and Reliability in Learning Assessment

by Connie Rehkamp

1. Validity: Does the test measure what it is supposed to measure?

2. Reliability: Does the test yield the same or similar score rankings (all other factors being equal) consistently?

3. The three basic methods for estimating reliability of scores are: test-retest, alternative form, internal consistency.

3.1. New node

4. Internal Consistency: this is used when a test is designed to measure a single basic concept. For example, the questions need to be consistent and the items need to correlate with each other.

5. Test-retest: Test is given twice and correlation between the tests scores is determined.

6. Alternate Forms: Two forms of the test are given to a group of students. Correlation between scores are determined. Test must be administered under conditions that are equivalent.

7. Content Validity Evidence: Established by inspecting test questions to determine whether they correspond to what should be on the test

8. Criterion-Related Validity Evidence: Occurs when evidence from tests are correlated with external criterion.

9. Concurrent Criterion-Related Validity: Determined by administering both new test and the established test.

10. Predictive Validity Evidence: Refers to how well the test predicts future behaviors of the examinees, very useful when implementing aptitude tests.

11. Construct Validity Evidence: This is relevant if the information corresponds well with some theory. Example: Test is given on math computation the scores would increase based on intensive coaching in math not foreign language or science.