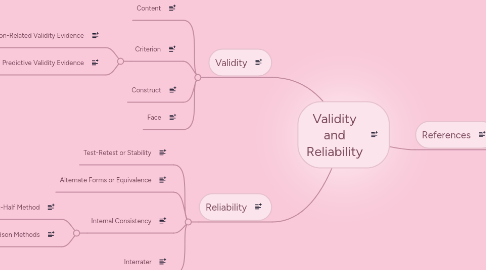

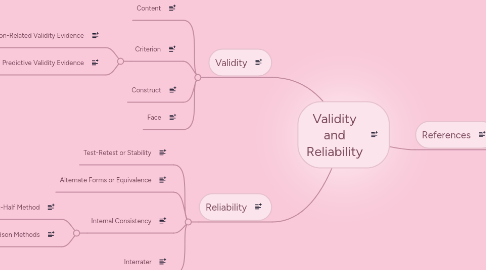

Validity and Reliability

by Crystal English

1. Reliability

1.1. Test-Retest or Stability

1.2. Alternate Forms or Equivalence

1.3. Internal Consistency

1.3.1. Split-Half Method

1.3.2. Kuder-Richardson Methods

1.4. Interrater

2. Validity

2.1. Content

2.2. Criterion

2.2.1. Concurrent Criterion-Related Validity Evidence

2.2.2. Predictive Validity Evidence