Assessment Analysis

by nancy schumacher

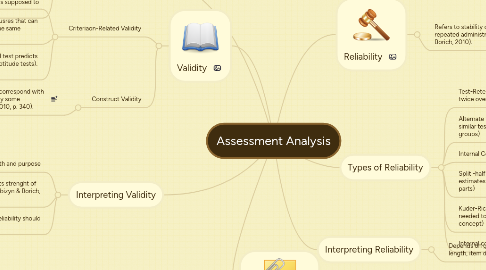

1. Content Validity

1.1. Assessed by comparing a test item with objectives

1.2. Does not produce a numerical estimate

1.3. Used to figure out if a test measures what it is supposed to measure.

2. Validity

2.1. Criteriaon-Related Validity

2.1.1. Correlates test scores with an external standard or criterion (Kubizyn & Borich, 2010, p. 340).

2.1.2. Concurrent - meausres that can be administered at the same time

2.1.3. Predictive - referes to how well test predicts some future behavior (good for aptitude tests). (Kubizyn & Borich, 2010, p. 340).

2.2. Construct Validity

2.2.1. Determined by finding if test results correspond with socres on other vairables as predicted by some rationale or theory. (Kubizyn & Borich, 2010, p. 340).

3. References:

3.1. Kubiszyn, T. & Borich, G. (2010). Educational testing & measurement: Classroom application and practice (9th ed.). John Wiley & Sons, Inc., Hoboken, NJ.

4. Interpreting Validity

4.1. Adequacy depends on strength and purpose

4.2. Group variability affects strenght of validity coefficient (Kubizyn & Borich, 2010).

4.3. Relevance and reliability should be considered

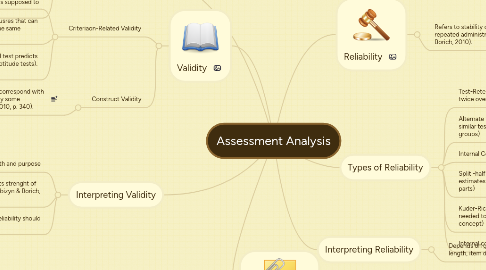

5. Types of Reliability

5.1. Test-Retest (same test given twice over period of time)

5.2. Alternate form estimates (two similar tests given to similar groups)

5.3. Internal Consistency (see below)

5.4. Split -half and odd-even estimates (tet divided into two parts)

5.5. Kuder-Richarson (only one test needed to estimate measure of concept)

5.6. Internal consistency (speeded tests)

6. Reliability

6.1. Refers to stability of test score over repeated administrations (Kubizyn & Borich, 2010).

7. Interpreting Reliability

7.1. Depends on group size, test length, item difficulty