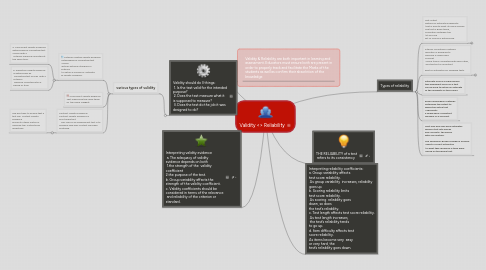

1. Validity should do 3 things: 1. Is the test valid for the intended purpose? 2. Does the test measure what it is supposed to measure? 3. Does the test do the job it was designed to do?

1.1. various types of validity

1.1.1. Criterion-related validity evidence Established by correlating test scores with an external standard or criterion to obtain a numerical estimate of validity evidence.

1.1.1.1. a. Concurrent validity evidence determined by correlating test scores with a criterion measure collected at the same time.

1.1.1.2. b. Predictive validity evidence is determined by correlating test scores with a criterion measure collected after a period of time.

1.1.2. Concurrent validity evidence Test measuring the same thing as the name suggest.

1.1.3. Content Validity Evidence: Content validity evidence is most important The “job”of an achievement test is to measure how well content has been mastered.

1.1.3.1. The best way to ensure that a test has content validity evidence ensure its items match or measure the instructional objectives.

2. Interpreting validity evidence a. The adequacy of validity evidence depends on both 1.the strength of the validity coefficient 2.the purpose of the test. b. Group variability affects the strength of the validity coefficient. c. Validity coefficients should be considered in terms of the relevance and reliability of the criterion or standard.

3. Validity & Reliability are both important in learning and assessment. Educators must ensure both are present in order to properly track and facilitate the Marks of the students as well as confirm their absorbtion of the knowledge

4. Types of reliability

4.1. Test-retest method of estimating reliability that is exactly what its name implies. The test is given twice, correlation between the 1st and 2nd set of scores is determined.

4.1.1. Goal 1

4.1.2. Goal 2

4.2. Internal consistency method Question is designed to measure a single basic concept similar items correlated with each other, and the test is consistent Best for estimates for speeded tests.

4.2.1. Session Rule 1

4.2.2. Session Rule 2