1. IAM

1.1. Users & Groups

1.1.1. IAM = Identity and Access Management, Global Service

1.1.2. Root account created by default shouldn't be used or shared

1.1.3. Users are people within your organization and can be grouped

1.1.4. Groups can only container users, not other groups

1.1.5. Users don't have to belong to a group and user can belong to multiple groups

1.2. Permissions

1.2.1. Users or Groups can be assigned JSON documents called policies

1.2.2. These policies define the permissions of the users

1.2.3. In AWS you apply the least privilege principle, don't give more permissions than a user needs

1.3. How can users access AWS?

1.3.1. AWS Management Console - protected by password +MFA

1.3.2. AWS Comand Line Interface - protectec by access keys

1.3.3. AWS Software Development Kit - protected by access keys

1.3.4. Access Keys are generated through the AWS Console

1.3.5. Users manage their own access keys

1.3.6. Access Keys are secret just like a password

1.4. IAM Roles for Services

1.4.1. Some AWS service will need to perform actions on your behalf

1.4.2. To do so, we will assign permissions to AWS services with IAM Roles

1.4.3. Common Roles

1.4.3.1. EC2 Instance Roles

1.4.3.2. Lambda Functino Roles

1.4.3.3. Roles for CloudFormation

1.4.4. Security Tools

1.4.4.1. IAM Credentials Report (account-level)

1.4.4.1.1. A report that lists all your account's users and the status of their various credentials

1.4.4.2. IAM Access Advisor (user-level)

1.4.4.2.1. Access advisor shows the service permissions granted to a user an when those services were last accessed

1.4.4.2.2. You can use this information to revise your policies

1.5. Guide lines & Best Practices

1.5.1. Don't use the root account except for AWS account setup

1.5.2. One physical user = One AWS user

1.5.3. Assign users to groups and assign permissions to groups

1.5.4. Create a strong password policy

1.5.5. Use and enforce the user of MFA

1.5.6. Create and use Roles for giving permissions to AWS services

1.5.7. Use Access Keys for Programmatic Access

1.5.8. Audit permissions of your account using IAM Credentials Report & IAM Access Advisor

1.5.9. Never share IAM users & Access Keys

2. KMS

3. EC2

3.1. EC2 User Data

3.1.1. It is possible to bootstrap our instances using an EC2 User data script

3.1.2. bootstrapping means launching commands when a machine starts

3.1.3. That script is only run once at the instance first start

3.1.4. EC2 user data is used to automate boot tasks

3.1.5. The EC2 User Data Script runs with the root user

3.2. Instace Types

3.2.1. General Purpose

3.2.1.1. Great for a diversity of workloads such as web servers

3.2.1.2. Balance between

3.2.1.2.1. Compute

3.2.1.2.2. Memory

3.2.1.2.3. Networking

3.2.2. Compute Optimized

3.2.2.1. Great for compute-intensive tasks that require high performance processors

3.2.2.1.1. Batch processing workloads

3.2.2.1.2. Media transcoding

3.2.2.1.3. High performance web servers

3.2.2.1.4. High performance computing

3.2.2.1.5. Scientific modeling & machine learning

3.2.2.1.6. Dedicated gaming servers

3.2.3. Memory Optimized

3.2.3.1. Fast performance for workloads that process large data sets in memory

3.2.3.2. Use cases

3.2.3.2.1. High performance, relational/non-relational databases

3.2.3.2.2. Distributed web scale cache stores

3.2.3.2.3. In-memory databases optimized for Business Intelligence

3.2.3.2.4. Applications performing real-time processing of big unstructured data

3.2.4. Storage Optimized

3.2.4.1. Great for storage-intensive tasks that require high, sequential read and write access to large data sets on local storagre

3.2.4.2. Use cases

3.2.4.2.1. High frequency online transaction processing (OLTP) systems

3.2.4.2.2. RElational & NoSQL databases

3.2.4.2.3. Cache for in-memory databases (Redis)

3.2.4.2.4. Data warehousing applications

3.2.4.2.5. Distributed file systems

3.3. Security Groups

3.3.1. Control how traffic is allowed into or out of our EC2 instances

3.3.2. Only contain allow rules

3.3.3. Rules an reference by IP or by security group

3.3.4. Act as a firewall on EC2 instances

3.3.5. They regulate

3.3.5.1. Access to ports

3.3.5.2. Authorized IP ranges - IPv4, IPv6

3.3.5.3. Control of inbound network (from other to the instance)

3.3.5.4. Control of outbound network (from the instance to other)

3.3.6. Good to know

3.3.6.1. Can be attached to multiple instances

3.3.6.2. Locked down to a region /VPC combination

3.3.6.3. Does live "outside" the EC2 - if traffic is blocked the EC2 instance won't see it

3.3.6.4. It's good to maintain one separate security group for SSH access

3.3.6.5. If your application is not accessible (time out) then it's a security group issue

3.3.6.6. If your application gives a "connection refused" error, then it's an application error or it's not launched

3.3.6.7. All inbound traffic is blocked by default

3.3.6.8. All outbound traffic is authorized by default

3.4. EC2 Instances Purchasing Options

3.4.1. On-Demand Instances - short workload, predictable pricing, pay by second

3.4.1.1. Pay for what you use

3.4.1.2. Has the highest cost but no upfront payment

3.4.1.3. No long-term commitment

3.4.1.4. Recommended for short-term and un-interrupted workloads, where you can't predict how the application will behave

3.4.2. Reserved (1 & 3 years)

3.4.2.1. Reserved Instances - long workloads

3.4.2.2. Convertible Reserved Instances - long workloads with flexible instances

3.4.2.3. Up to 72% discount compared to On-demand

3.4.2.4. You reserve a specific instance attributes (instance Type, Region, Tenancy, OS)

3.4.2.5. Reservation Period - 1 year or 3 years

3.4.2.6. Payment Options - No Upfront, Partial Upfront, All Upfront

3.4.2.7. Reserved Instance's Scope - Regional or Zonal (reserve capacity in an AZ)

3.4.2.8. Recommneded for stead-state usage applications (think database)

3.4.2.9. You can buy and sell in the reserved instance marketplace

3.4.2.10. Convertible Reserved Instance

3.4.2.10.1. Can change the EC2 instance type, instance family, OS, scope and tenancy

3.4.2.10.2. Up to 66% discount

3.4.3. Savings Plans (1 & 3 years) - commitment to an amount of usage, long workload

3.4.3.1. Get a discount based on long-term usage (Up to 72% - same as RIs) Commit to a certain type of usage ($10/hour for 1 or 3 years)

3.4.3.2. Usage beyong EC2 Savings Plans is billed at the On-Demand prices

3.4.3.3. Locker to a specific instance family & AWS region (eg M5 in us-east-1)

3.4.3.4. Flexible across

3.4.3.4.1. Instance Size

3.4.3.4.2. OS

3.4.3.4.3. Tenancy

3.4.4. Spot Instances - short workloads, cheap, can lose instances (less reliable)

3.4.4.1. Can get a discount of up to 90% compare to On-demand

3.4.4.2. Instances that you can "lose" at any point of time if yoru max prices is less than the current spot price

3.4.4.3. The most cost-efficient instances in AWS

3.4.4.4. Useful for workloads that are resilient to failure

3.4.4.4.1. Batch jobs

3.4.4.4.2. Data analysis

3.4.4.4.3. Image processing

3.4.4.4.4. Any distributed workloads

3.4.4.4.5. Workloads with a flexible start and end time

3.4.4.5. Not suitable for critical jobs or databases

3.4.5. Dedicated Hosts - book an entire physical server, control instance placement

3.4.5.1. A physical server with EC2 instance capacity fully dedicated to your use

3.4.5.2. Allows you address compliance requirements and use your existing server-bound software licenses

3.4.5.3. Purchasing Options

3.4.5.3.1. On-demand - pay per second for active dedicated host

3.4.5.3.2. Reserved - 1 or 3 years (no Upfron, Partial Upfront, All Upfront)

3.4.5.4. The most expensive options

3.4.5.5. Useful for software that have complicated licensing model (Bring your own license)

3.4.5.6. Or for companies that have strong regulator or compliance needs

3.4.6. Dedicated Instances - no other customers will share your hardware

3.4.6.1. Instances run on hardware that's dedicated to you

3.4.6.2. May share hardware with other instances in same account

3.4.6.3. No control over instance placement (can move hardware after stop / start)

3.4.7. Capacity Reservations - reserve capacity in a specific AZ for any duration

3.4.7.1. Reserve On-Demand instances capacity in a specific AZ for any duration

3.4.7.2. You always have access to EC2 capacity when you need it

3.4.7.3. No time commitment (creat/cancel anytime), no billing discounts

3.4.7.4. Combine with Regional Reserved Instances and Savings Plans to benefit from billing discounts

3.4.7.5. You're charged at On-Demand rate whether you run instances or not

3.4.7.6. Suitable for short-term, uninterrupted workloads that needs to be in a specific AZ

3.4.8. Which purchasing options is right?

3.4.8.1. On Demand - coming and staying in resort whenever we like, we pay the full prices

3.4.8.2. Reserved - like planning ahead and if we plan to stay for a long time, we may get a good discount

3.4.8.3. Savings Plans - pay a certain amount per hour for certain period and stay in any room type

3.4.8.4. Spot Instances - the hotel allows people to bid for the empty rooms and the highest bidder keeps the rooms. You can get kicked out at any time.

3.4.8.5. Dedicated Hosts - we book an entire building of the resort

3.4.8.6. Capacity Reservations - you book a room for a period with full prices even you don't stay in it

3.5. EC2 Spot Instance Requests

3.5.1. Can get a discount of up to 90% compared to ON-demand

3.5.2. Define max spot prices and get the isntance while current spot price < maz

3.5.2.1. The hourly spot price varies based on offer and capcity

3.5.2.2. If the current spot price > your max price you can choose to stop or terminate your instance with a 2 minutes grace period.

3.5.3. Other strategy - Spot Blcok

3.5.3.1. "block spot instance during a specified time famr (1 to 6 hours without interruptions

3.5.3.2. In rare situations, the instance may be reclaimed

3.5.4. Used for batch jobs, data analysis, or workloads that are resilient to failures

3.5.5. Not great for critical jobs or databases

3.6. Spot Fleets - set of Spot Instances + (optional) On-Demand Instances

3.6.1. The Spot Fleet will try to meet the target capacity with prices constraints

3.6.1.1. Define possible launch pools - instance type, OS, AZ

3.6.1.2. Can have multiple launch pools, so that the fleet can choose

3.6.1.3. Spot Fleet stops launching instances when reaching capacity or max cost

3.6.2. Strategies to allocate Spot Instances

3.6.2.1. lowestPrices - from the pool with the lowest prices (cost optimization, short workload)

3.6.2.2. diversified - distributed across all pools (great for availability, long workloads)

3.6.2.3. capacityOptimized - pool with the optimal capacity for the number of instances

3.6.2.4. priceCapacityOptimized (recommended) - pools with highest capacity available, then select the pool with the lowest price (best choice for most workloads)

3.6.2.5. Spot Fleets allow us to automatically request Spot Instances with the lowest price

3.7. Elastic IPs

3.7.1. If you need to have a fixed public IP for your instance, you need an Elastic IP

3.7.2. An Elastic IP is a public IPv4 IP you own as long as you don't delete it

3.7.3. You can attach it to one instance at a time

3.7.4. With and Elastic IP address, you can mask the failure of an instance or software by rapidly remapping the address to another instance in your account

3.7.5. You can only have 5 Elastic IP in your account (you can ask AWS to increase that)

3.7.6. Overall try to aboid using Elastic IP

3.7.6.1. The often reflec poor architectural decisions

3.7.6.2. Instead use a random public IP and register a DNS name to it

3.7.6.3. or as we';; see later use a Load Balancer and don't use a public IP

3.8. Placement Groups

3.8.1. Cluster - clusters instnces into a low-latency group in a single AZ

3.8.1.1. Pros - Great network (10Gbps bandwidth between instances with Enhances Networking enabled - recommended)

3.8.1.2. Cons - if the AZ fails, all instances fails at the same time

3.8.1.3. Use case

3.8.1.3.1. Big Data job that needs to complete fast

3.8.1.3.2. Application that needs extremely low latency and high network throughput

3.8.2. Spread - spreads intances across undelying hardware (max 7 instances per group per AZ)

3.8.2.1. Pros

3.8.2.1.1. Can span across AZ

3.8.2.1.2. Reduced Risk is simultaneous failure

3.8.2.1.3. EC2 instances are on different physical hardware

3.8.2.2. Cons

3.8.2.2.1. Limited to 7 instances per AZ per palacment group

3.8.2.3. Use Case

3.8.2.3.1. Application that needsto maximize high availability

3.8.2.3.2. Critical Applications where each instance must be isolated from failure from each other

3.8.3. Partition - spreads instances across many different partitions (which rely on different sets of racks) within an AZ. Scales to 100s of EC2 instances per group (Hadoop, Cassandra, Kafka)

3.8.3.1. Up to 7 partition per AZ

3.8.3.2. Can span across multiple AZs in the same region

3.8.3.3. Up to 100x of EC2 instances

3.8.3.4. The instances in a partition do no share racks with the instances in the other patitions

3.8.3.5. A partition failure can affect many EC2 but won' affect other partitions

3.8.3.6. EC2 instances get access to the partition information as metadata

3.8.3.7. Use Cases

3.8.3.7.1. HDFS, HBase, Cassandra, Kafka

3.9. Elastic Network Interfaces

3.9.1. Logical component in a VPC that represents a virtual network card

3.9.2. The ENI can have the following attributes

3.9.2.1. Primary private IPv4, one or more secondary IPv4

3.9.2.2. One Elastic IP per private IPv4

3.9.2.3. One Public IPv4

3.9.2.4. One or more security groups

3.9.2.5. A MAX address

3.9.3. You can create ENI independently and attach them on the fly on EC2 instances for failover

3.9.4. Bound to a specific AZ

3.10. EC2 Hibernate

3.10.1. In-memory state is preserved

3.10.2. The isntance boot is much faster

3.10.3. Under the hood the RAM state is written to a file in the root EBS volume

3.10.4. The root EBS volumet must be encrypted

3.10.5. Use cases

3.10.5.1. Long-running processing

3.10.5.2. Saving the RAM state

3.10.5.3. Services that take time to initialize

3.10.6. Good to know

3.10.6.1. Supported Instance Families - C3, C4, C5, I3, M3, M4, R3, R4, T2,T3

3.10.6.2. Instance RAM Size - must be less than 150 GB

3.10.6.3. Instance Size - not supported for bare metal instances

3.10.6.4. AMI - Amazon Linux 2, Linux AMI, Ubuntu, RhEL, CentOS & Windows

3.10.6.5. Root Volume - must be EBS, encrypted, not instance store, and large

3.10.6.6. Available for On-Demand, Reserved and Spot Instances

3.10.6.7. An instance can not be hibernated more than 60 days

4. EBS & EFS

4.1. EBS

4.1.1. An Elastic Block Store Volume is a network drive you can attach to your instances while they run

4.1.2. It allows your instances to persist data, even after their termination

4.1.3. They can only be mounted to one instance at a time

4.1.4. They are bound to a specific AZ

4.1.5. It uses network to communicate the instance, which means there might be a bit of latency

4.1.6. It can be detached from an EC2 instance and attached to another one quickly

4.1.7. It's locked to an AZ

4.1.7.1. To move a volume across, you first need to snapshot it

4.1.8. Have provisioned capacity (size in GBs, and IOPS)

4.1.8.1. You get billed for all provisioned capcity

4.1.8.2. You can increase the capacity of the drive over time

4.1.9. Delete on Termination attribute

4.1.9.1. Controls the EBS behaviour when an EC2 instance terminates

4.1.9.1.1. By default, the root EBS volume is deleted (attribute enabled)

4.1.9.1.2. By default, any other attached EBS volume is not deleted (attribute disabled)

4.1.9.2. this can be controlled by the AWS console / AWS CLI

4.1.9.3. Use case - preserve root volume when instance is termianted

4.1.10. Snapshots

4.1.10.1. Make a backup of your EBS volume at apoint in time

4.1.10.2. Not necessary to detach volume to do snaphot, but recommended

4.1.10.3. Can copy snapshots across AZ or Region

4.1.10.4. Features

4.1.10.4.1. EBS Snapshot Archive

4.1.10.4.2. Recycle Bin for EBS snapshots

4.1.10.4.3. Fast Snapshot Restore (FSR)

4.1.11. Volume Types

4.1.11.1. gp2 / gp3 - General purpose SSD volume tahat balances prices and performance for a wide variety of workloads

4.1.11.1.1. Cost effectorive storage, low latency

4.1.11.1.2. System boot volumes, virtual desktop, development and test environments

4.1.11.1.3. 1 GB - 16 TB

4.1.11.1.4. gp3

4.1.11.1.5. gp2

4.1.11.2. io1 / io2 Block Express - highest performance SSD volume for mission-critical low-latency or high-throughput workloads

4.1.11.2.1. Critical business applications with sustained IOPS performance

4.1.11.2.2. Or applications that need more than 16k IOPS

4.1.11.2.3. Great for databases workloads (sensistive to storage perf and consistency)

4.1.11.2.4. io1 (4GB - 16TB)

4.1.11.2.5. io2 Block Express (4GB - 64GB)

4.1.11.2.6. Supports EBS multi-attach

4.1.11.3. st1 - low cost HDD volume designed for frequently accessed, throughput intensive workloads

4.1.11.3.1. Big Data, Data warehouses, log processing

4.1.11.3.2. Mac throughput 500MB/s - max IOPS 500

4.1.11.3.3. 125 GB to 16 TB

4.1.11.4. sc1 - lowest cost HDD volume designed for less frequently accessed workloads

4.1.11.4.1. For data that is infrequently accessed

4.1.11.4.2. Scenarios where lowest cost is important

4.1.11.4.3. Max throughput 250 MB/s - max IOPS 250

4.1.11.4.4. 125 GB to 16 TB

4.1.12. EBS Multi-Attach - io1/io2 family

4.1.12.1. Attach the same EBS volume to multiple EC@ instances in the same AZ

4.1.12.2. Each instance has full read & write permissions to the high-performance volume

4.1.12.3. Use case

4.1.12.3.1. Achieve higher application availability in clustered applications

4.1.12.3.2. Appli8cation must manage concurrent write operations

4.1.12.3.3. Up to 16 EC2 instances at a time

4.1.12.3.4. Must use a file system that's cluster aware (not XFS, EXT4, etc)

4.1.13. EBS Volumes are characterized in Size | throughtput | IOPS (I/O Ops Per Sec)

4.1.14. Only gp2/gp3 and io1/io2 Block Express can be used as boot volumes

4.1.15. EBS Encryption

4.1.15.1. When you create an encrupted EBS volume, you get the following

4.1.15.1.1. Data at rest is encrypted inside the volume

4.1.15.1.2. All the data in flight moving between the instance and the volume is encrupted

4.1.15.1.3. All snapshots are encrupted

4.1.15.1.4. All volumes created from the snapshot

4.1.15.2. Encryption and decryption are handled transparently

4.1.15.3. Encryption has minimal impact on latency

4.1.15.4. EBS Encription leverages keys from KMS(AES-256)

4.1.15.5. Copying an unencrypted snapshots allows encryption

4.1.15.6. Snapshots of encrypted volumes are encrypted

4.1.15.7. Encryption - encrypt an unencrypted EBS volume

4.1.15.7.1. Create an EBS snapshot of the volume

4.1.15.7.2. Encrypt the EBS snapshot (using copy)

4.1.15.7.3. Create a new EBS volume from the snpashot the volume will also be encrypted)

4.1.15.7.4. Now attach the encrypted volume to the original instance

4.2. AMI

4.2.1. AMI is a customization of an EC2 instance

4.2.2. You add your own software, configuration, operating system, monitoring

4.2.3. Faster boot / configuration time because all your software is pre-packaged

4.2.4. AMI is build for a specific region (and can be copied across regions)

4.2.5. You can launch EC2 instances from

4.2.5.1. A public AMI - AWS provided

4.2.5.2. You own AMI - you make and maintain yourself

4.2.5.3. An AWS Marketplace AMI - an AMI someone else made (and potentially sells)

4.3. EC2 Instance Store

4.3.1. EBS volumes are nework drives with goo but imited performance

4.3.2. If you need a high-performance hardware disk, use EC@ Instance Store

4.3.3. Better I/O performance

4.3.4. EC2 Instnce STore lose their storage if they're stopped (ephemeral)

4.3.5. Good for buffer / cache /scratch data / temporary content

4.3.6. Risk of data loss if hardware. fails

4.3.7. Backups and Replication are your responsibility

4.4. EFS

4.4.1. Uses NFSv4.1 protocol

4.4.2. Uses security grtoup to control access to EFS

4.4.3. Compatible with Linux based AMI (not windows)

4.4.4. Encryption at rest using KMS

4.4.5. POSIX file system that has standard file API

4.4.6. File system scales automatically, pay-per-use, no capacity planning!

4.4.7. Performance & Storage Classes

4.4.7.1. EFS Scale

4.4.7.1.1. Grow to Petabute-scale network file system, automatically

4.4.7.1.2. 1000 of concurrent NFS clients, 10 GB/s throughput

4.4.7.2. Performance Mode (set at EFS creation time)

4.4.7.2.1. General purpose (default) - latency-sensitive use cases (web server, CMS, etc)

4.4.7.2.2. Max I/O - higher latency, throughput, higly parallel (big data, media processing)

4.4.7.3. Throughput Mode

4.4.7.3.1. Bursting - 1 TB = 50MB/s + burst of up to 100 MB/s

4.4.7.3.2. Provisioned - set your throughput regardless of storage size: ec 1 GB/s for 1 TB storage

4.4.7.3.3. Elastic - automatically scales throughput up or down based on your workload

4.4.8. Storage Classes

4.4.8.1. Storage Tiers (lifecycle management feature - move file after N days)

4.4.8.1.1. Standard - for frequently accessed files

4.4.8.1.2. Infrequent access (EFS-IA) - cost to retrieve files, lower price to store

4.4.8.1.3. Archive - rarely accessed data (few times each year), 50% cheaper

4.4.8.1.4. mplement lifecycle policies to move files between storage tiers

4.4.8.2. Availability and durability

4.4.8.2.1. Standard - multi-az great for prod

4.4.8.2.2. One Zone - one AZ, great for dev, backup enabled by default, compatible with IA (EFS One zone-IA)

4.4.8.3. Over 90% in cost savings

4.5. EBS vs EFS

4.5.1. EBS volumes

4.5.1.1. one instance (except multi-attach io1/io2)

4.5.1.2. are locket at the AZ level

4.5.1.3. gp2 - IO increases if the disk size increaes

4.5.1.4. gp3 & io1 - can increase IO independently

4.5.1.5. To migrate an EBS volume across AZ

4.5.1.5.1. Take a snpahot

4.5.1.5.2. Restore the snapshot to another AZ

4.5.1.5.3. EBS backups use IO and you shouldn't run them while your application is handling a lot of traffic

4.5.1.6. Root EBS Volumes of instances get terminate by default if the EC2 instance gets terminated (you can disable that)

4.5.2. EFS

4.5.2.1. Mounting 100s of instances across AZ

4.5.2.2. EFS share website files (WordPress)

4.5.2.3. Only for Linux instances (POSIX)

4.5.2.4. EFS has a higher prices point than EBS

4.5.2.5. Can leverage Storage Tiers for cost savings

4.5.3. Remember EFS vs EBS vs Instance Store

5. Elastic Load Balancers

5.1. Why use a load balancer

5.1.1. Spread load across multiple downstream instances

5.1.2. Expose a single point of access to your application

5.1.3. Seamlessly handle failures of downstream instances

5.1.4. Do regular health checks to your instances

5.1.5. Provide SSL termination for your websites

5.1.6. Enforce stickiness with cookis

5.1.7. High availability across zones

5.1.8. Separate public traffic from private traffic

5.1.9. AWS guarantees that it will be working

5.1.10. AWS takes care of upgrades, maintenance, high availability

5.1.11. AWS provides only a few configuration knobs

5.1.12. It costs less to setup your own load balancer but it will be a lot more effort on your end

5.2. Types of load balancer on AWS

5.2.1. Classic Load Balancer - v1 old generation - 2009 - CLB

5.2.1.1. HTTP, HTTPS, TCP, SSL, (secure TCP)

5.2.1.2. Health chesk are TCP or HTTP based

5.2.1.3. Fixed hostname xxx.region.elb.amazonaws.com

5.2.2. Application Load Balancer - v2 - new generation - 2016 - ALB

5.2.2.1. HTTP, GTTPS, WebSocket

5.2.2.2. Load Balancing to multiple HTTP applications across machines

5.2.2.3. Load balancing to multiple applications on the same machine

5.2.2.4. Support redirects( HTTP to HTTPS for example)

5.2.2.5. Routing tables to different target groups

5.2.2.5.1. Routing based on path in URL

5.2.2.5.2. Routing based on hostname in URL

5.2.2.5.3. Routing based on Query string headers

5.2.2.6. ALB are a great fit for microservices & container-based application

5.2.2.7. Has a port mapping feature to redirect to a dynamic port in ECS

5.2.2.8. In comparison, we'd need multiple Classic Load Balancer per application

5.2.2.9. Target Groups

5.2.2.9.1. EC2 instances (can be managed by an Auto Scaling Group) - HTTP

5.2.2.9.2. ECS tasks (managed by ECS itself) - HTTP

5.2.2.9.3. Lambda functions - HTTP request is translated into a JSON event

5.2.2.9.4. IP Addresses - must be private IPs

5.2.2.9.5. ALB can rout to multiple target groups

5.2.2.9.6. Health checks are at the target group level

5.2.2.10. Good to know

5.2.2.10.1. Fixed hostname xxx.region.elb.amazonaws.com

5.2.2.10.2. The application servers don't see the IP of the client directly

5.2.3. Network Load Balancer - v2 - new generation - 2017 - NLB

5.2.3.1. TCP, TLS (secure TCP), UDP

5.2.3.2. Allow to

5.2.3.2.1. Forward TCP & UDP traffic to your instances

5.2.3.2.2. Handle millions of request per seconds

5.2.3.2.3. Ultra-low latency

5.2.3.3. NLB has one static IP per AZ and supports assigning Elastic IP

5.2.3.4. NLB are used for extreme performance, TCP or UDP traffic

5.2.3.5. Not included in the AWS free tier

5.2.3.6. Target Groups

5.2.3.6.1. EC2 instances

5.2.3.6.2. IP Addresses - must be private IPs

5.2.3.6.3. Application Load Balancer

5.2.3.6.4. Health Checks support the TCP, HTTP and HTTPS procotols

5.2.4. Gateway Load Balancer - 2020 - GWLB

5.2.4.1. Operates at layer 3 (Network Layer) - IP Protocol

5.2.4.2. Deploy, scale and manage a fleet of 3rd party network virtual applicances in AWS

5.2.4.2.1. Firewall, Intrusion Detection and Prevention Systems, Deep Packet Inspection Systems, payload manipulation

5.2.4.3. Combines the following functions

5.2.4.3.1. Transparent Network Gateway - single entry / exit for all traffic

5.2.4.3.2. Load Balancer- distributes traffic to your virtual appliances

5.2.4.4. Uses the GENEVE protocol on port 6091

5.2.4.5. Target Groups

5.2.4.5.1. EC2 Instances

5.2.4.5.2. IP Addresses - must be private IPs

5.2.5. Overall it is recommended to use the newer generation load balancers as they provide more features

5.2.6. Some load balancers can be setup as internal or external ELBs

5.3. It is integrated with many AWS offerings / services

5.3.1. EC2, EC2 Auto Scaling Groups, Amazon ECS

5.3.2. AWS Certificate Manager, CloudWatch

5.3.3. Route 53, AWS WAG, AWS Global Accelerator

5.4. Sticky Sessions (Session Affinity)

5.4.1. It is possbile to implement stickiness so that the same client is always redireted to the same isntance behind a load balancer

5.4.2. This works for CLB, ALB, NLB

5.4.3. For CLB and ALB the cookie is used for stickiness has an expiration date you control

5.4.4. Use case: make sure the user doesn't lose his session data

5.4.5. Enabling stickiness may bring imbalance to the load over the backend EC2 instances

5.4.6. Cookie Names

5.4.6.1. Application-based Cookies

5.4.6.1.1. Custom cookie

5.4.6.1.2. Application cookie

5.4.6.2. Duration-based Cookies

5.4.6.2.1. Cookie generated by the load balancer

5.4.6.2.2. Cookie name is AWSALB for ALB, AWSELB for CLB

5.5. Cross-Zone Load Balancing

5.5.1. with - each load balancer instance distributes evenly across all registered instances in all AZ

5.5.2. without - request are dsitributed in the instances of the node of the ELB

5.5.3. ALB

5.5.3.1. Enabled by default (can be disabled at the Target Group level)

5.5.3.2. No charges for inter AZ data

5.5.4. NLB & GLB

5.5.4.1. Disabled by default

5.5.4.2. You pay charges ($) for itner AZ data if enabled

5.5.5. CLB

5.5.5.1. Disabled by default

5.5.5.2. No charges for inter AZ data if enabled

5.6. SSL Certificates

5.6.1. The load balancer uses andX.509 certificate (SSL/TLS server certificate)

5.6.2. You can manage certificates using ACM (AWS Certificate Manager)

5.6.3. You can create upload your own certificates alternatively

5.6.4. HTTPS listener

5.6.4.1. You must specify a default certificate

5.6.4.2. You can add an optional list of certs to support multiple domains

5.6.4.3. Clients can use SNI (Server Naming Indication) to specify the hostname they reach

5.6.4.4. Ability to specify a security policy to support older versions of SSL / TLS

5.6.5. SNI

5.6.5.1. SNI solves the problem of loading multiple SSL certificates on one web server

5.6.5.2. It's a newer protocol and requires the client to indicate the hostname of the target server in the initial SSL handshake

5.6.5.3. The server will the find the correct certificate, or return the default one

5.6.5.4. Only works for ALB & NlB, CloudFront

5.6.5.5. Does not work for CLB

5.6.6. CLB

5.6.6.1. Support only one SSL certificate

5.6.6.2. Must use multiple CLB for multiple hostname with multiple SSL certificates

5.6.7. ALB

5.6.7.1. Supports multiple listeners with multiple SSL certificates

5.6.7.2. Uses SNI to make it work

5.6.8. NLB

5.6.8.1. Supports multiple listeners with multiple SSL certificates

5.6.8.2. Uses SNI to make it work

5.7. Connection Draining

5.7.1. Feature Naming

5.7.1.1. Connection Draining - for CLB

5.7.1.2. Deregristration Delay - for ALB & NlB

5.7.2. Time to complete "in-flight requests" while the instance is de-registering or unhealthy

5.7.3. Stops sendin new requests to the EC2 isntance which is de-registering

5.7.4. Between 1 to 3600 seconds (default 300)

5.7.5. Can be sidabled set value to 0

5.7.6. Set to a low value if your requests are short

6. Lambda

7. Auto Scaling Groups

7.1. The goal of an ASG is

7.1.1. Scale out (add EC2 instancess) to match an increased load

7.1.2. Scale in (remove EC2 instances) to match a decreased load

7.1.3. Ensure we have a minimum and a maximum number of EC2 instances running

7.1.4. Automatically register new isntances to a load balancer

7.1.5. Re-create an EC2 instance in case a previous one is terminated

7.2. ASG are free (you only pay for the underlying EC2 instances)

7.3. Attributes

7.3.1. A Launch Template (older Launch Configurations are deprecated)

7.3.1.1. AMI + Instance Type

7.3.1.2. EC2 user data

7.3.1.3. EBS Volumes

7.3.1.4. Security Groups

7.3.1.5. SSH Key Pair

7.3.1.6. IAM Roles for your EC2 Instances

7.3.1.7. Network _ Subnets Information

7.3.1.8. Load Balancer Information

7.3.2. Min Size / Max Size / Initial Capacity

7.4. Scaling policies

7.4.1. Dynamic Scaling

7.4.1.1. Target Tracking Scaling

7.4.1.1.1. Simple to setup

7.4.1.1.2. Example - I want the average ASG CPU to stay at around 40%

7.4.2. Simple / Step Scaling

7.4.2.1. When a CloudWatch alarm is triggered (example CPU >70%) then add 2 units

7.4.2.2. When a CloudWatch alarm is triggered (example CPU < 30%) then remove 1

7.4.3. Scheduled Scaling

7.4.3.1. Anticipate a scaling based on known usage patterns

7.4.3.2. Example: increase the min capacity to 10 at 5 pm on Fridays

7.4.4. Predictive Scaling - continuously forecast load and schedule scaling ahead

7.4.5. Good metrics to scale on

7.4.5.1. CPUUtilization - average CPU utilization across your instances

7.4.5.2. RequestCountPerTarget - to make sure the number of requests per EC2 instances is stable

7.4.5.3. Average Network In/Out - if your application is network bound)

7.4.5.4. Any custom metric - that you push using CloudWatch

7.4.6. Scaling Cooldowns

7.4.6.1. After a scaling activity happens, you are in the cooldown period (default 300 seconds)

7.4.6.2. During the cooldown period, the ASH will not launch or terminate additional instances (to allow for metrics to stabilize)

7.4.6.3. Advice - use a ready-to-use AMI to reduce configuration time in order to be serving request faster and reduce the cooldown period

8. RDS

9. S3

9.1. Amazon S3 alows people to store objects in buckets

9.2. Buckets must habe a globally unique name

9.3. Buckets are defined at the region level

9.4. S3 looks like a global service but buckets are created in a region

9.5. There's no concept of directories within buckets (although the UI will trick you to think otherwise)

9.6. Object values are the content of the body

9.6.1. Max object size is 5 TB

9.6.2. If uploading more than 5 GB, must use multi-part upload

9.6.3. Metadata

9.6.4. Tags

9.6.5. Version ID

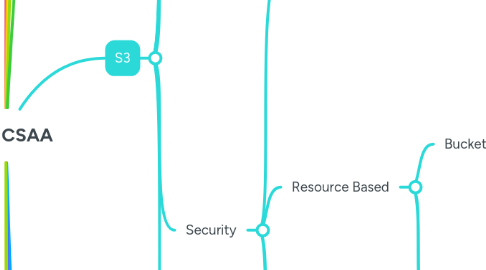

9.7. Security

9.7.1. User-Based

9.7.1.1. IAM Policies - which API calls should be allowed for a specific user from IAM

9.7.2. Resource Based

9.7.2.1. Bucket Policies - bucket wide rules from the S3 console - allows cross account

9.7.2.1.1. JSON based policies

9.7.2.1.2. Use

9.7.2.2. Object Access Control List - finer grain (can be disabled)

9.7.2.3. Bucket Access Control List - less common (can be disabled)

9.7.3. Note - an IAM principal can access an S3 object if

9.7.3.1. The user IAM permissions allow it or the resource policy allows it

9.7.3.2. and there's not explicit deny

9.7.4. Encryption - encrypt objects in Amazon S3 using encryption keys

9.8. Static Website Host

9.8.1. website url

9.8.1.1. bucket-name.s3-website-aws-region.amazonaws.com

9.8.1.2. bucket-name.s3-website.aws-region.amazonaws.com

10. SNS

10.1. The "event producer" only sends message to one SNS topic

10.2. As many "event receivers" (subscriptions) as we want to listen to the SNS topic notifications

10.3. Each subscriber to the topic will get all the messages (note: new feature to filter messages)

10.4. Up to 12,500,000 subscriptions per topic

10.5. 100,000 topics limit

10.6. How to publish

10.6.1. Topic Publish (using the SDK)

10.6.1.1. Create a topic

10.6.1.2. Create a subscriptions (or many)

10.6.1.3. Publish to the topic

10.6.2. Direct Publish (for mobile apps SDK)

10.6.2.1. Create a platform application

10.6.2.2. Create a platform endpoint

10.6.2.3. Publish to the platform endpoitn

10.6.2.4. Works with Google GCM, Apple APNS, Amazon ADM

10.7. Security

10.7.1. Encryption

10.7.1.1. In-flight encryption using HTTPS API

10.7.1.2. At-rest encryption using KMS keys

10.7.1.3. Client-side encryption if the client wants to perform encryption/decryption itself

10.7.2. Access Controls - IAM policies to regulate access to the SNS API

10.7.3. SNS Access Policies - similar to S3 bucket policies

10.7.3.1. Useful for cross-account access to SNS topics

10.7.3.2. Useful for allowing other services to write to an SNS topic

10.8. SNS + SQS = Fan Out

10.8.1. Push once in SNS, receive in all SQS queues that are subscribers

10.8.2. Fully decoupled, no data loss

10.8.3. SQS allows for: data persistence, delayed processing and retries of work

10.8.4. Ability to add more SQS subscribers over time

10.8.5. Make sure your SQS queue access policy allows for SNS to write

10.8.6. Cross-Region Delivery - works with SQS Queues in other regions

10.9. S3 Events to multiple queues

10.9.1. For the combination of event type and prefix you can only havne one S3 Event rule

10.9.2. If you want to send the same S3 event to many SQS queues, use fan-out

10.10. SNS FIFO Topic

10.10.1. Similar features as SQS FIFO

10.10.2. Limited throughput (same throughput as SQS FIFO)

10.10.3. Can have SQS Standard and FIFO queues as subscribers

10.11. Message Filtering

10.11.1. JSON policy used to filter messages sent to SNS topic's subscriptions

10.11.2. If a subscription doesn't have a filter policy, it receives every message

11. SQS

11.1. Attributes

11.1.1. Unlimited throughput unlmited number of messages in queue

11.1.2. Default retention of mesages: 4 days, maximum of 14 days

11.1.3. Low latency (< 10ms on publish and receive)

11.1.4. Limitation of 256KB per message sent

11.2. Can have duplicate messages (at least once delivery, occasionally

11.3. Can have out of order messages (best effort ordering)

11.4. Producing Messages

11.4.1. Produced to SQS using the SDK (SendMessage API)

11.4.2. The message is persisted in SQS until a consumer deltes it

11.4.3. Message retention: default 4 days, up to 14 days

11.5. Consuming Messages

11.5.1. Consumers (running on EC2 instances, servers, or AWS Lambda)

11.5.2. Poll SQS for messages (receive up to 10 messages at a time)

11.5.3. Process the messages (example: insert the message into an RDS database)

11.5.4. Delete the messages usning the DeleteMessage API

11.6. Multiple EC2 Instances

11.6.1. consumers receive and process messages in parallel

11.6.2. AT least once delivery

11.6.3. Best-effort message ordering

11.6.4. Consumers delete messages after processing them

11.6.5. We can scale consumers horizontally to improve throughput of processing

11.7. Security

11.7.1. Encryption

11.7.1.1. In-flight encryption using HTTPS API

11.7.1.2. At-rest encryption using KMS keys

11.7.1.3. Client-side encryption if the client wants to peform encryption/decryption itself

11.7.2. Access Controls - IAM policies to regulate access to the SQS API

11.7.3. SQS Access Policies - similar to S3 bucket policies

11.7.3.1. Useful for cross-accounts access to SQS queues

11.7.3.2. Useful for allowing other services to write to an SQS queue

11.8. Message Visibility Timeout

11.8.1. After a message is polled by a consumer, it becomes invisible to other consumers

11.8.2. By default, the "message visibility timeout" is 30 seconds

11.8.3. That means the message has 30 seconds to be processed

11.8.4. After the message visibility timeout is over, the message is "visible" in SQS

11.8.5. If a message is not processed within the visibility timeout, it will be processed twice

11.8.6. A consumer could call the changeMessageVisibility API to get more time

11.8.7. If visibility timeout is high and consumer crashes, re-processing will take time

11.8.8. If visibility timeout is too low, we may get duplicates

11.9. Long Polling

11.9.1. When a consumer requests messages from the queue, it can optionally "wait" for messages to arrive if there are none in the queue

11.9.2. This is called Long Polling

11.9.3. LongPolling decreases the number of API calls made to SQS while increasing the fficiency and reducing latency of your application

11.9.4. The wait time can be between 1 sec to 20 sec

11.9.5. Long Polling is preferable to Short Polling

11.9.6. Long polling can be enabled at the queue level or at the API level using WaitTimeSeconds

11.10. FIFO Queue

11.10.1. Limited throughput: 300 msg/s without batching, 3,000 msg/s with batching

11.10.2. Exactly-once send capability (by removing duplicates using Deduplication ID)

11.10.3. Messages are processed in order by the consumer

11.10.4. Ordering by Messge Group Id (all messages in the same group are ordered) mandatory parameter

12. Kinesis

12.1. Kinesis Data Streams

12.1.1. Collect and store streaming data in real-time

12.1.2. Retention between up to 365 days

12.1.3. Ability to reprocess (replay) data by consumers

12.1.4. Data can't be deleted from Kinesis (until it expires)

12.1.5. Data up to 1 MB(typical use case is lot of "small" real-time data)

12.1.6. Data ordering guarantee for data with the same "Partition ID"

12.1.7. At-rest KMS encryption, in-flight HTTPS encryption

12.1.8. Kinesis Producer Library (KPL) to write an optimized producer application

12.1.9. Kinesis Client Library (KCL) to write an optimized consumer application

12.1.10. Capacity Modes

12.1.10.1. Provisioned mode

12.1.10.1.1. Choose number of shards

12.1.10.1.2. Each shard gets 1 MB/s in (or 1000 records per second)

12.1.10.1.3. Each shard gets 2 MB/s out

12.1.10.1.4. Scale manually to increase or decrease the number of shards

12.1.10.1.5. You pay per shard provisioned per hour

12.1.10.2. On-demand mode

12.1.10.2.1. No need to provision or manage the capacity

12.1.10.2.2. Default capacity provisioned (4 MB/s in or 4000 records per second)

12.1.10.2.3. Scales automaticaly based on observed throughput peak during the last 30 days

12.1.10.2.4. Pay per stream per hour & data in/out per GB

12.2. Amazon Data Firehose (Kinesis Data Firehose)

12.2.1. Fully managed Service

12.2.1.1. Amazon Redshift / Amazon S3 / Amazon OpenSearch Service

12.2.1.2. 3rd Party: Splunk / MongoDB / Datadog / NewRelic / ...

12.2.1.3. Custom HTTP Endpoint

12.2.2. Automatic scaling, serverless, pay for what you use

12.2.3. Near real-time with buffering capability based on size / time

12.2.4. Supports CSV, JSON, Parquet, Avro, Raw Text, Binary data

12.2.5. Conversions to Parquet / ORC, compressions with gzip / snappy

12.2.6. Custom data transformations using AWS Lambda (ex: CSV to JSON)

12.3. Kinesis Data Streams vs Firehose

12.3.1. Kinesis Data Streams

12.3.1.1. Streaming data collection

12.3.1.2. Producer & Consumer code

12.3.1.3. Real-Time

12.3.1.4. Provisioned / On-Demand mode

12.3.1.5. Data Storage up to 365 days

12.3.1.6. Replay Capability

12.4. Amazon Data Firehose

12.4.1. Load Streaming data into S3 / Redshift / OpenSearch / 3rd Party / custom HTTP

12.4.2. Fully managed

12.4.3. Near real-time

12.4.4. Automatic scaling

12.4.5. No data storage

12.4.6. Doesn't support replay capability

12.5. SQS vs SNS vs Kinesis

12.5.1. SQS

12.5.1.1. Consumer "pull data"

12.5.1.2. Data is deleted after being consumed

12.5.1.3. Can have as many workers (consumers) as we want

12.5.1.4. No need to provision throughput

12.5.1.5. Ordering guarantees only FIFO queues

12.5.1.6. Individual message delay capability

12.5.2. SNS

12.5.2.1. Push data to many subscribers

12.5.2.2. Up to 12,500,00 subscribers

12.5.2.3. Data is not persisted (lost if not delivered)

12.5.2.4. Pub / Sub

12.5.2.5. Up to 100,000 topics

12.5.2.6. No need to provision throughput

12.5.2.7. Integrates with SQS for fanout architecture

12.5.2.8. FIFO capability for SQS FIFO

12.5.3. Kinesis

12.5.3.1. Standard pull data

12.5.3.1.1. 2 MB per shard

12.5.3.2. Enhanced fanout - push data

12.5.3.2.1. 2 MB per shard per consumer

12.5.3.3. Possibility to replay data

12.5.3.4. Meant for real-time big data, analytics and ETL

12.5.3.5. Ordering at the shard level

12.5.3.6. Data expires after X days

12.5.3.7. Provisioned mode or on-demand capacity mode

13. Global Accelerator

13.1. Leverage the AWS internal network to route to your application

13.2. 2 Anycast IP are created for your application

13.3. The Anycast IP send traffic directly to Edge Locations

13.4. The Edge Locations send the traffic to your application

13.5. Works with Elastic IP, EC2 instances, ALB, NLB, public or private

13.6. Consisten Performance

13.6.1. Intelligent routint to lowest latency and fast regional failover

13.6.2. No issue with client cache (because the IP doesn't change)

13.6.3. Internal AWS network

13.7. Health checks

13.7.1. Global accelerator performs a health check of yoru applications

13.7.2. Helps make your application global (failover less than 1 minute for unhealthy)

13.7.3. Great for disaster recovery (thanks to the health checks)

13.8. Security

13.8.1. Only 2 external IP need to be whitelisted

13.8.2. DDoS protection thanks to AWS Shield

13.9. Global Accelerator vs CloudFront

13.9.1. They both use the AWS global network and its edge locations around the world

13.9.2. Both services integrate with AWS Shield for DDoS protection

13.9.2.1. CloudFront

13.9.2.1.1. Improves performance for both cacheable content(such as images and videos)

13.9.2.1.2. Dynamic content (such as API acceleration and dynamic site delivery)

13.9.2.1.3. Content is served at the edge

13.9.2.2. Global Accelerator

13.9.2.2.1. Improves performance for a wide range of applications over TCP or UDP

13.9.2.2.2. Proxying packets at the edge to applications running in one or more AWS REgions

13.9.2.2.3. Good fit for non-HTTP use cases, such as gaming (UDP) IoT(MQTT) or VoIP

13.9.2.2.4. Good for HTTP use cases that requrie static IP addresses

13.9.2.2.5. Good for HTTP use cases taht requries deterministic, fast regional failover

14. Route 53

15. CloudFront

15.1. CDN

15.2. Improves read performance, content is cached at the edge

15.3. Improves user experience

15.4. 216 Point of Presence globally (edge locations)

15.5. DDoS protection (because worldwide), integration with Shield, AWS Web Application Firewall

15.6. Origins

15.6.1. S3 Bucket

15.6.1.1. For distributing files and caching them at the edge

15.6.1.2. For uploading files to S3 through cloudFront

15.6.1.3. SEcured using Origin Access Control (OAC)

15.6.2. VPC Origin

15.6.2.1. For applications hosted in VPC private subnets

15.6.2.2. Application Load Balancer / Network Load Balancer / EC2 Instances

15.6.2.2.1. Alllows you to deliver content from your applications hosted in your VPC private subnets (no need to expose them on the Internet)

15.6.2.3. Deliver traffic to private

15.6.2.3.1. ALB

15.6.2.3.2. NLB

15.6.2.3.3. EC2 Instances

15.6.3. Custom Origin (HTTP)

15.6.3.1. S3 website (must first enable the bucket as a static website)

15.6.3.2. Any public HTTP backend you want

15.7. CloudFront vs S3 Cross Region Replication

15.7.1. CloudFront

15.7.1.1. Global Edge Network

15.7.1.2. Files are cached for a TTL (maybe a day)

15.7.1.3. Great for static content that must be available everywhere

15.7.2. S3 Cross Region Replication

15.7.2.1. Must be setup for each region you want replication to happen

15.7.2.2. Files are updated in near real-time

15.7.2.3. Read only

15.7.2.4. Great for dynamic content that needs to be available at low-latency in few regions

15.8. Geo Restriction

15.8.1. You can restrict who can access your distribution

15.8.1.1. Allowlist - allow your users to access your content only if they're in one of the countries on a list of approved countries

15.8.1.2. Blocklist - prevent your users from accessing your content if they're in one of the countries on a list of banned countries

15.8.2. The country is determined using a 3rd party geo-ip database

15.8.3. Use case - Copyright Laws to control access to content

15.9. Pricing

15.9.1. CloudFront Edge locations are all around the world

15.9.2. The cost of data out per edge location varies

15.9.3. Price classes

15.9.3.1. You can reduce the number of edge locations for cost reduction

15.9.3.1.1. Three price classes

15.10. Cache Invalidations

15.10.1. In case you update teh backend origin. cloudFront doesn't know about it and will only get the refreshed content after the TTL has expired

15.10.2. However, you can force an entire or partial cache refresh (thus bypassing the TTL) by performaning a CloudFront Invalidation

15.10.3. You can invalidate all files (*) or a special path (/images/*)

16. VPC

16.1. General

16.1.1. All new AWS accounts have a default VPC

16.1.2. Default VPC has Internet connectivity and all EC2 instances inside it have public IPv4 addresses

16.1.3. New EC2 instances are launched int othe default VPC if no subnet is specified

16.1.4. We also get a public and a private IPv4 DNS names

16.1.5. You can have multiple VPCs in an AWS region (mac 5 per region - soft limit)

16.1.6. You VPC CIDR should not overlap with your other networks

16.2. Max CIDR per VPC is 5, for each CIDR

16.2.1. Min size is /28 (16 IP addresses)

16.2.2. Max size is /16 (65536 IP addresses)

16.3. Because VPC is private, only the Private IPv4 ranges are allowed

16.3.1. 10.0.0.0 - 10.255.255.255

16.3.2. 172.16.0.0 - 172.31.255.255

16.3.3. 192.168.0.0 - 192.168.255.255

16.4. Subnets

16.4.1. AWS reserves 5 IP addressed (first 4 and last 1) in each subnet)

16.4.2. These 5 IP addresses are not available for use and can't be assigned to an EC2 instance

16.4.2.1. 10.0.0.0 - Network Address

16.4.2.2. 10.0.0.1 - reserved by AWS for the VPC router

16.4.2.3. 10.0.0.2 - reserved by AWS to Amazon provided DNS

16.4.2.4. 10.0.0.3 - reserved by AWS for future use

16.4.2.5. 10.0.0.255 - Network Broadcast Address. AWS does not support broadcast in a VPC there the address is reserved.

16.5. Internet Gateway

16.5.1. Allows resourcese in a VPC connect to the internet

16.5.2. It scales horizontally and is highly available and reduntant

16.5.3. Must be created separately from a VPC

16.5.4. One VPC can only be attached to one IGW and vice versa

16.5.5. Internet Gateways on their own do not allow Internet access

16.5.6. Route tables must also be edited

16.6. NAT Instance

16.6.1. Network Address Translation

16.6.2. Alows EC2 instances in private subnets to connect to the Internet

16.6.3. Must be launched ina a public subnet

16.6.4. Must disable EC2 setting - source/ destination check

16.6.5. Must have Elastic IP attached to it

16.6.6. Comments

16.6.6.1. Pre-configured Amazon Linux AMi is available

16.6.6.2. Not highly available / resilient setup out of the box

16.6.6.2.1. You need to create an ASG in multi-AZ + resilient user-data script

16.6.6.3. Internet traffic bandwidth depends on EC2 instance type

16.6.6.4. You must manage Security Groups & Rules

16.6.6.4.1. Inbound

16.6.6.4.2. Outbound

16.7. NAT Gateway

16.7.1. AWS-managed NAT, higher bandwidthc, high availability, no administration

16.7.2. Pay per hour for usage and bandwidth

16.7.3. NATGW is created in a specific AZ uses an Elastic IP

16.7.4. Can't be used by EC2 instance inthe same subnet (only from other subnets)

16.7.5. REquires an IGW (Private subnet -> NATGW -> IGW)

16.7.6. 5 Gbps of bandwidth with automatic scaling up to 100 Gbps

16.7.7. No Security Groups to manage / required

16.7.8. is resilient within a single AZ

16.7.9. Must create multiple NAT Gateways in multiple AZs for fault-tolerance

16.7.10. There is no cross-AZ failover needed because if an AZ goes doesn't need NAT

16.8. Network Access Control List (NACL)

16.8.1. NACL are likea firewall which control traffic from adn to subnets

16.8.2. One NACL per subnet, new subnets are assigned the Default NACL

16.8.3. You define NACL Rules

16.8.3.1. Rules have a number (1-32766), higher precedence with a lowe number

16.8.3.2. First rule match will drive the decision

16.8.3.3. The last rul is an asterisk and denies a request in case of no rule match

16.8.3.4. AWS reccomendsd adding rules by increment of 100

16.8.4. Newly created NACLs will deny everything

16.8.5. NACL are a great way of blocking a specific IP address at the subnet level

16.8.6. Default NACL

16.8.6.1. Accepts everything inbound/outbound with the subnets it's associated with

16.8.6.2. Do not modify the Default NACL, instead create custom NACLs

16.9. Security Groups vs NACLs

16.9.1. Security Group

16.9.1.1. Operates at the instance level

16.9.1.2. Supports allow rules only

16.9.1.3. Stateful - return traffic is automatically allowed, regardless of any rules

16.9.1.4. All rules are evaluated before deciding whether to allow traffic

16.9.1.5. Applies to an EC2 instance when specified by someone

16.9.2. NACL

16.9.2.1. Operates at the subnet level

16.9.2.2. Supprts allow and deny rules

16.9.2.3. Stateless - return traffic must be explicitly allowed by rules (think of ephemeral ports)

16.9.2.4. Rules are evaluated in order (lowest to highest) when deciding whether to allow traffic, first match wins

16.9.2.5. Automatically applies to all EC2 instances in the subnet that it's associated with

16.10. VPC Peering

16.10.1. Privately connect two VPCs using AWS network

16.10.2. Make the mbehave as if they were in the same network

16.10.3. Must not have overlapping CIDRs

16.10.4. VPC Peering connection is not transitive (must be established for each VPC that need to communicate with one another)

16.10.5. You must update route tables in each VPC's subnets to ensure EC2 instances can communicate with each other

16.10.6. Good to know

16.10.6.1. You can create VPC Peering connection between VPCs in different AWS accounts / regions

16.10.6.2. You can reference a seecurity group in a peered VPC (works cross accounts - same region)

16.11. VPC Endpoints

16.11.1. Every service is publicly exposed (public URL)

16.11.2. VPC Endpoints (powered by AWS PrivateLink) allows you to connect to AWS services using a private network instead of using the public Intenet

16.11.3. They're redundant and scale horizontally

16.11.4. They remove the need of IGW, NATGW to access AWS Services

16.11.5. In case of issues

16.11.5.1. Check DNS Setting Resolution in your VPC

16.11.5.2. Check Route Tables

16.11.6. Types of endpoints

16.11.6.1. Interface Endpoints (powered byt PrivateLink)

16.11.6.1.1. Provisions an ENI (private IP address) as an entry point (must attach a Security Group)

16.11.6.1.2. Supports most AWS services

16.11.6.1.3. $ per hour + $ per GB of data processed

16.11.6.2. Gateway Endpoints

16.11.6.2.1. Provisions a gateway and must be used as a target in a route table (does not use security groups)

16.11.6.2.2. Supports both S3 and DynamoDB

16.11.6.2.3. Free

16.11.6.3. Gateway or Interface Endpoint for S3

16.11.6.3.1. Gateway is most likely going to be preferred all the time at the exam

16.11.6.3.2. Cost - free for Gateway, $ for interface endpoint

16.11.6.3.3. Interface Endpoint is preferred access is required from on-premises (Site to Site VPN or DirectConnect), a different VPC or a different region

16.11.7. Lambda in VPC accessing DynamoDB

16.11.7.1. DynamoDB is a public service from AWS

16.11.7.2. Option 1 - access from the public internet

16.11.7.2.1. Because Lambda is in a VPC, it needs a NAT Gateway in a public subnet and an internet gateway

16.11.7.3. Option 2 - (better & free) - Access from the private VPC network

16.11.7.3.1. Deploy a VPC Gateway endpoint for DynamoDB

16.11.7.3.2. Change the Route Tables

16.12. VPC Flow Logs

16.12.1. Capture information about IP traffic going into your interfaces

16.12.1.1. VPC Flow Logs

16.12.1.2. Subnet Flow Logs

16.12.1.3. Elastic Network Interface Flow Logs

16.12.2. Helps to monitor & troubleshoot connectivity issues

16.12.3. Flow logs data can go to S3, CoudWatch Logs and Kinesis Dta Firehose

16.12.4. Capture networks information from AWS managed interfaces too - ELB, RDS, ElastiCache, Redshift, WorkSpaces, NATGW, Transit Gateway

16.12.5. Architectures

16.12.5.1. VPC Flow Logs -> CloudWatch Logs -> CloudWatch Contributor Insights -> Top 10 IP addresses

16.12.5.2. VPC Flow Logs -> CloudWatch Logs -> CloudWatch Alarm -> Alert Amazon SNS

16.12.5.3. VPC Flow Logs -> S3 Bucket -> Amazon Athena -> Amazon QuickSight

16.13. AWS Site-to-Site VPN

16.13.1. Virtual Private Gateway (VGW)

16.13.1.1. VPN concentrator on the AWS side of the VPN connection

16.13.1.2. VGW is created and attached to the VPC from which you want to create the Site-to-Site VPN connection

16.13.1.3. Possibility to customize the ASN (Autonomous System Number)

16.13.2. Customer Gateway (CGW)

16.13.2.1. Software application or physical device on customer side of the VPN connection

16.13.2.2. What IP address to use?

16.13.2.2.1. Public Internet-routable IP address for your Customer Gateway device

16.13.2.2.2. If it's behing a NAT device that's enabled for NAT traversal (NAT-T), use the public IP address of the NAT

16.13.2.3. Important step - enable Route Propagation for the Virtual Private Gateway in the route table that is associated with your subnets

16.13.2.4. If you need to pint your EC2 instances from on-premises, make sure you add the ICMP protocol on the inbound of your security groups

16.14. AWS VPN CloudHub

16.14.1. Provide secure communication between multiple sites, if you have multiple VPN connections

16.14.2. Low-cost hub-and-spoke model for primary or secondary network connectivity between different locations (VPN only)

16.14.3. It's a VPN connection so it goes over the public Internet

16.14.4. To set it up, connect multiple VPN connections on the same VGQ, setup dynamic routing and configure route tables.

16.15. Direct Connect (DX)

16.15.1. Provides a dedicated private connectin from a remote network to your VPC

16.15.2. Dedicated connection must be setup between your DC and AWS Direct Connect locations

16.15.3. You need to setup a Virtual Private Gateway on your VPC

16.15.4. Access public resources and private on same connection

16.15.5. Use Cases

16.15.5.1. Increase bandwitdh throughput - working with large data sets - lower cost

16.15.5.2. More consisten tnetwork experience - applications using real-time data feeds

16.15.5.3. Hybid Environments (on prem + cloud)

16.15.6. Supports both IPv4 and IPv6

16.15.7. Direct Connect Gateway

16.15.7.1. If you want to setup a Direct Connect to one or more VPC in many different regions (same account), you must use a Direct Connect Gateway

16.15.8. Connection Types

16.15.8.1. Dedicated Connections - 1 Gbps, 10 Gbps and 100 Gbps capacity

16.15.8.1.1. Physical ethernet port dedicated to a customer

16.15.8.1.2. Request made to AWS first, then completed by AWS Direct Connect Partneres

16.15.8.2. Hosted Connections - 50 Mbps, 500 Mbps to 10 Gbps

16.15.8.2.1. Connection requests are made via AWS Direct Connect Partners

16.15.8.2.2. Capacity can be added or removed on demand

16.15.8.2.3. 1, 2, 5, 10 Gbps available at selct AWS Direct Connect Partners

16.15.8.2.4. Lead times are often longer than 1 month to establish a new connection

16.15.9. Encryption

16.15.9.1. Data in transit is not encrypted but is private

16.15.9.2. AWS Direct Connect + VPN provides a IPsec-encrypted private connection

16.15.9.3. Good for an extra level of security, but slightly more complex to put in place

16.15.10. Resiliency

16.15.10.1. Maximum resilience is achieved by separate connections terminating on separate devices in more than one location.

16.15.11. Site-to-Site VPN connection as a backup

16.15.11.1. In case Direct Connect fails you can setup a backup Direct Connect connection (expensivve) or a Site-to-Site VPN connection

16.16. Transit Gateway

16.16.1. For having transitive peering between thousands of VPC and on-premises, hub-and-spoke (star) connectin

16.16.2. Regional resource, can work cross-region

16.16.3. Share cross-account using Resource Access Manager(RAM)

16.16.4. You can peer Transit Gateways across regions

16.16.5. Route Tables - limit which VPC can talk with other VPC

16.16.6. Works with Direct Connect Gateway, VPN connections

16.16.7. Support IP Multicast (not supported by any other AWS service)

16.16.8. Site-to-Site VPN ECMP

16.16.8.1. ECMP - Equal-cost mult-path routing

16.16.8.2. Routing strategy to allow to forwards a packet over multiple best path

16.16.8.3. Use case - create multiple Site-to-Site VPN connections to increase the bandwidth of your connection to AWS

16.17. Traffic Mirroring

16.17.1. Allows you to capture and inspect network traffic in your VPC

16.17.2. Route the traffic to security appliances that you manage.

16.17.3. Capture the traffic

16.17.3.1. From (Source) - ENIs

16.17.3.2. To (Targets) - an ENI or a Network Load Balancer

16.17.4. Capture all packets or capture the packets of your interest (optionally, truncate packets)

16.17.5. Source and Target can be in the same VPC or different VPCs (VPC Peering)

16.17.6. Use cases - content inspection, threat monitoring, troubleshooting

16.18. IPv6 in VPC

16.18.1. IPv4 cannot be disabled for your VPC and subnets

16.18.2. You can enable IPv6 (they're public IP addresses) to operate in dual-stack mode

16.18.3. Your EC2 instances will get at least a private internal IPv4 and a public IPv6

16.18.4. They can communicate using either IPv4 or IPv6 to the internet through an Internet Gateway

16.19. IPv4 Troubleshooting

16.19.1. IPv4 cannot be disabled for your VPC and subnets

16.19.2. So if you cannot launch an EC2 instance in your subnet

16.19.2.1. It's not because it cannot acquire an IPv6 (the space is very large)

16.19.2.2. It's because there are no available IPv4 in your subnet

16.19.3. Solution - create a new IPv4 CIDR in your subnet

16.20. Egress-only Internet Gateway

16.20.1. Used for IPv6 only (similar to a NAT Gateway but for IPv6)

16.20.2. Allows instances in your VPC outbound connections over IPv6 while preventing the internet to initiate an IPv6 connection to your instances

16.20.3. You must update the Route Tables

16.21. VPC Summary

16.21.1. CIDR - IP Range

16.21.2. VPC - Virtual Private Cloud -> we define a list of IPv4 and IPv6 CIDR

16.21.3. Subnets - tied to an AZ, we define a CIDR

16.21.4. Internet Gateway - at the VPC level, provide IPv4 & IPv6 Internet Access

16.21.5. Route Tables - must be edited to add routes from subnets to the IGW, VPC Peering Connections, VPC Endpoints

16.21.6. Bastion Host - public EC2 instance to SSH into, that has SSH connectivity to EC2 instances in private subnets

16.21.7. NAT Instances - gives Internet access to EC2 instances in private subnets. Old, must be setup in a public subnet, disable Source / Destination check flag

16.21.8. NAT Gateway - managed by AWS, provides scalable Internet access to private EC2 instances, when the target is an IPv4 address

16.21.9. NACL - stateless, subnet rules for inbound and outbound, don't forget Ephemeral Ports

16.21.10. Security Groups - stateful, operate at the EC2 instance level

16.21.11. VPC Peering - connect two VPCs with non overlapping CIDR, non-transitive

16.21.12. VPC Endpoints - provide private access to AWS SErvices (S3, DynamoDB, CloudFormation, SSM) within a VPC

16.21.13. VPC Flow Logs - can be setup at the VPC / Subnet ? ENI Level for Accept and Reject traffic, helps identifying attacks, analyze using Athena or CloudWatch Logs Insights

16.21.14. Site-to-Site VPN - setup a Customer Gateway on DC, a Virtual Private Gateway on VPC and site-to-site VPN over public internet

16.21.15. AWS VPN CloudHub - hub-and-spoke VPN model to connect your sites

16.21.16. Direct Connect - setup a Virtual Private Gateway on VPC and establish a direct private connection to an AWS Direct Connect Location

16.21.17. Direct Connect Gateway - setup a Direct Connect to many VPCs in different AWS regions

16.21.18. AWS PrivateLink / VPC Endpint SErvices

16.21.18.1. Connect services privately from your service VPC to customers VPC

16.21.18.2. Doesn't need VPC Peering, public Internet, NAT Gateway, Route Tables

16.21.18.3. Must be used with Network Load Balancer & ENI

16.21.19. ClassicLink - connect EC2-Classic EC2 instances privately to your VPC

16.21.20. Transit Gateway - transitive peering connections for VPC, VPN & DX

16.21.21. Traffic Mirroring - copy network traffic from ENIs for further analysis

16.21.22. Egress-only Internet Gateway - like a NAT gateway but IPv6 targets

16.22. Network Costs in AWS per GB - Simplified

16.22.1. Use Private IP instead of Public IP for good savings and better network performance

16.22.2. Use same AZ for maximum savings (at the cost of high availability)

16.23. Minimizing egress traffic network cost

16.23.1. Egress traffic - outbound traffic (from AWS to outside)

16.23.2. Ingress traffic - inbound traffice from outside to AWS (typically free)

16.23.3. Try to keep as much internet traffic within AWS to minimize costs

16.23.4. Direct Connect location that are co-located in the same AWS REgion result in lower cost for egress network

16.24. Network Protection on AWS

16.24.1. Network Access Control Lists

16.24.2. Amazon VPC Security Groups

16.24.3. AWS WAF (protect against malicious requests)

16.24.4. AWS Shiled & AWS Shiled Advanced

16.24.5. AWS Firewall Manager (to manage them across accounts)

16.24.6. AWS Network Firewall

16.24.6.1. Protect your entire VPC

16.24.6.2. From Layer 3-7

16.24.6.3. Any direction you can inspect

16.24.6.3.1. VPC to VPC traffic

16.24.6.3.2. Outbound to internet

16.24.6.3.3. Inbound from internet

16.24.6.3.4. To / from Direct Connect & Site-to-Site VPN

16.24.6.3.5. Internally the AWS Network Firewall uses the AWS Gateway Load Balancer

16.24.6.3.6. Rules can be centrally managed cross-account by AWS Fireall Manager to apply to many VPCs

16.24.6.3.7. Fine Grained Controls

17. Common Scenarios

17.1. Domain 1 - Design Resilient Architectures

17.1.1. Set up asynchronous data replication to another RDS DB instances hosted in another AWS Region

17.1.1.1. Create a Read Replica

17.1.2. A parallel file system for "hot" (frequently) accessed dta

17.1.2.1. Amazon FSx for Lustre

17.1.3. Implement synchronous data replication across AZs with automatic failover in Amazon RDS

17.1.3.1. Enable Multi-AZ deployment in Amazon RDS

17.1.4. Needs a storage service to host "cold" (infrequently accessed) data

17.1.4.1. Amazon S3 Glacier

17.1.5. Set up a relational db and a disaster recovery plan with an RPO of 1 second and RTO of less than a minute

17.1.5.1. Use Amazon Aurora Global Database

17.1.6. Monitor database metrics and send email notifications if a specific threshold has been breached

17.1.6.1. Create an SNS topic and add the topic in the CloudWatch alarm

17.1.7. Setup a DNS failover to a static website

17.1.7.1. Use Route 53 with the failover option to a static S3 website bucket or CloudFront distribution

17.1.8. Implement an automated backup for all the EBS volumes

17.1.8.1. Use Amazon Data Lifecyle Manager to automate the creation of EBS snapshots

17.1.9. Monitor the available swap space of your EC2 isntances

17.1.9.1. Install the CloudWatch agent and monitor the SwapUtilization metric

17.1.10. Implement a 90-day backup retention policy on Amazon Aurora

17.1.10.1. Use AWS Backup

17.2. Domain 2 - Design High-Performing Architectures

17.2.1. Implement a fanout messaging

17.2.1.1. Create an SNS topic with a message filtering policy and configure multiple SQS queues to subscribe to the topic

17.2.2. A db that has a read replication latency of less than 1 second

17.2.2.1. Amazon Aurora cross region replicas

17.2.3. A specific type of ELB tthat uses UDP as the protocol for communication between clients and thousands of game servers around the world

17.2.3.1. Use NLB for TCP/UDP protocols

17.2.4. Monitor the memory and disk space utilization of an EC2 instance

17.2.4.1. Install Amazon CloudWatch agent on the instance

17.2.5. Retrieve a subset of data from a large CSV file stored in the S3 bucket

17.2.5.1. Perform an S3 Select operation based on the bucket's name and object's key

17.2.6. Upload a 1 TB file to an S3 bucket

17.2.6.1. Use Amazon S3 multipart upload API to upload large objects in parts

17.2.7. Improve performance of the application by reducing the response times from milliseconds to microseconds

17.2.7.1. Use Amazon DynamoDB Accelerator

17.2.8. Retrieve instance ID, public keys and public IP address of an EC2 instance

17.2.8.1. Access the URL 169.254.169.254/latest/meta-data/ using the EC2 instance

17.2.9. Route the traffic to the resources based on the location of the user

17.2.9.1. Use Route 53 Geolocation Routing policy

17.2.10. A fully managed ETL service provided by AWS

17.2.10.1. AWS Glue

17.2.11. A fully managed, petabyte-scale data warehouse service

17.2.11.1. Amazon Redshift

17.3. Domain 3 - Design Secure Applications and Architectures

17.3.1. Encrypt EBS volumes restores from unencrypted EBS snapshots

17.3.1.1. Copy the snapshot and enable encryption with a new symmetric CMK while creating an EBS volume using the snapshot

17.3.2. Limit the maximum number of requests from a single IP address

17.3.2.1. Create a rate-based rule in AWS WAF and set the rate limit

17.3.3. Grant the bucket owner full access to all uploaded objects in the S3 bucket

17.3.3.1. Create a bucket policy that requires users to set the object;s ACL to bucket-owner-full-control

17.3.4. Protect objects in the S3 bucket from accidental deleteion or overwrite

17.3.4.1. Enable versioning and MFA delete

17.3.5. Access resources on both on-premises and AWS using on-premises credentials that are stored in AD

17.3.5.1. Set up SAML 2.0 Based Federation by using a Microsoft AD Federtion Service

17.3.6. Secure the sensitive data stored in EBS volumes

17.3.6.1. Enable EBS encryption

17.3.7. Ensure that the data-in-transit and data-at-rest of the Amazon S3 bucket is always encrypted

17.3.7.1. Enable Amazon S3 Server-Side or use Client-Side Encryption

17.3.8. Secure the web application by allowing multiple domains to serve SSL traffic over the same IP address

17.3.8.1. Use AWS Certificate Manager to generate an SSL certificate. Associate the certificate to the CloudFront distribution and enable Server Name Indication (SNI)

17.3.9. Control the access for several S3 buckets by using a gateway endpoint to allow access to trusted buckets

17.3.9.1. Create an endpoint a policy for trusted S3 buckets

17.3.10. Enforce strict compliance by tracking all the configuration changes made to any AWS services

17.3.10.1. Setup a rule in AWS Config to identify compliant and non-compliant services

17.3.11. Provide short-lived access tokens that act as temporary security credentials to allow access to AWS resources

17.3.11.1. Use AWS Security Token Service

17.3.12. Encrypt and rotate all the db credentials, API Keys, and other secrets on a regular basis

17.3.12.1. Use AWS Secrets Manager and enable automatic rotation of credentials

17.4. Domain 4 - Design Cost-Optimized Architectures

17.4.1. A cost effective solution for over-provisioning of resources

17.4.1.1. Configure a target tracking scaling in ASG

17.4.2. The application data is stored in a tape backup solution. The backup data must be preserved for up to 10 years

17.4.2.1. Use AWS Storage Gateway to backup the data directly to S3 Glacier Deep Archive

17.4.3. Accelerate the transfer of historical records from on-premises to AWS over. the Internet in a cost-effective manner.

17.4.3.1. Use AWS DataSync and select Amazon S3 Glacier Deep Archive as the destination

17.4.4. Globally deliver the static contents and media files to customers around the world with low latency

17.4.4.1. Store the files in S3 and create a CloudFront distribution. Select S3 as the origin

17.4.5. An application must be hosted to two EC2 instances and should continuously run for three years. The CPU utilization of the EC2 instances is expected to be stable and predictable

17.4.5.1. Deploy the application to a Reserved instance.

17.4.6. Implement a cost-effective solution for S3 objects that are accessed less frequently

17.4.6.1. Create an Amazon S3 lifecycle policy to move the objects to Amazon S3 Standard IA

17.4.7. Minimize the data transfer costs between two EC2 instances

17.4.7.1. Deploy the EC2 instances in the same region

17.4.8. Import the SSL / TLS certificate of the application

17.4.8.1. Import the certificate into AWS Certificate Manager or upload it to AWS IAM