1. Transparent

1.1. Failures are notified immediately

1.2. Everybody on the team can look at failures and attempt fixes

2. Fast feedback

2.1. Whole test suite should run in > 10 mins

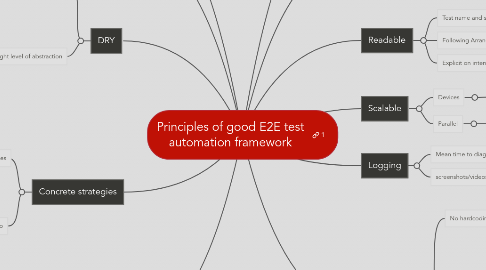

3. DRY

3.1. Minimal

3.1.1. Have only few high smoke tests for E2E scenarios

3.2. Right level of abstraction

3.2.1. Knowing what isn't and what is a good test for each kind of test

3.2.2. Shared understanding of

3.2.2.1. Unit tests

3.2.2.2. Functional tests

3.2.2.3. Partial integration tests

3.2.2.4. E2E/Smoke/Integration/UI tests

4. Concrete strategies

4.1. Integrated into build pipelines

4.1.1. PR jobs for code merge

4.1.2. Large daily jobs

4.1.3. Build validation tests

4.2. Same dev repo

4.2.1. Same set of coding standards as dev code

4.2.2. Contirbutions from devs

4.2.3. Re-use helpers and locators

5. Maintainable

5.1. Organized

5.1.1. By

5.1.1.1. Components

5.1.1.2. Time to run

5.1.1.3. Size of the tests

5.1.1.4. Ignored/Flaky

5.2. Flexible

5.2.1. Subsets of the tests can be run individually

5.2.2. Using various data arguments

6. Reliable

6.1. When a test fails, it should mean something inadvertant happened

6.2. Flakiness is the enemy

7. Repeatable

7.1. They can be run from

7.1.1. Local

7.1.2. Build machine

7.1.3. Cloud labs

7.2. Establish that flakiness won't happen

8. Readable

8.1. Test name and steps should be highlevel

8.2. Following Arrange/Act/Assert/Cleanup

8.3. Explicit on intent with expected and actual

9. Scalable

9.1. Devices

9.1.1. Same set of tests can be run on multiple devices, browser sizes etc

9.2. Parallel

9.2.1. Tests can be run in parallel

10. Logging

10.1. Mean time to diagnose

10.2. screenshots/videos/bug reporting

11. Data driven

11.1. No hardcoding

11.1.1. Locators

11.1.2. Strings

11.2. Same tests can run for different

11.2.1. User accounts

11.2.2. Environments

11.2.2.1. OTE

11.2.2.2. Stg

11.2.2.3. Prod

11.2.3. Browsers/devices

11.2.3.1. Chrome

11.2.3.2. Firefox

11.2.3.3. IE

11.2.3.4. devices if using appium