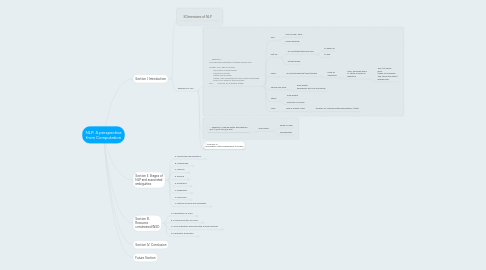

1. Section I. Introduction

1.1. 3 Dimensions of NLP

1.1.1. Languages

1.1.1.1. Hindi

1.1.1.2. English

1.1.1.3. ...

1.1.2. Algorithms

1.1.2.1. HMM

1.1.2.2. MEMM

1.1.2.3. CRF

1.1.3. Problems/ Foundational NLP Tasks

1.1.3.1. Morphology

1.1.3.2. POS tagging

1.1.3.3. Named Entity Recognition

1.1.3.4. Shallow Parsing

1.1.3.5. Deep Parsing

1.1.3.6. Semantics Extraction

1.1.3.7. Pragmatics Processing

1.1.3.8. Discourse Processing

1.2. Examples of NLP

1.2.1. Example 1: "Conversation between a mother and her son" Mother: Son, get up quickly. The school is open today. Should you bunk? Father will be angry. Father John complained to your father yesterday. Aren’t you afraid of the principal? Son: Mummy, it’s a holiday today!

1.2.1.1. Son:

1.2.1.1.1. Son of God: Jejus

1.2.1.1.2. Male offspring

1.2.1.2. Get up

1.2.1.2.1. as a multiword/phrasal verb

1.2.1.2.2. as two words

1.2.1.3. Open

1.2.1.3.1. as Noun/Adjective/Verb/Adverb

1.2.1.4. Should you bunk

1.2.1.4.1. Bunk what ? ambiguous wrt POS and sense

1.2.1.5. father

1.2.1.5.1. male parent

1.2.1.5.2. Principal of School

1.2.1.6. John

1.2.1.6.1. John is Proper Noun

1.2.2. Example 2: Named Entity Recognition: "पूजा ने पूजा के लिए फूल तोड़ा."

1.2.2.1. 2nd Pooja ?

1.2.2.1.1. name of a girl

1.2.2.1.2. Worshipping

1.2.3. Example 2.1: "Washington voted Washington to power"

2. Section II. Stages of NLP and associated ambiguities

2.1. A. Phonology and Phonetics

2.1.1. Processing of sound: 2 common problems

2.1.1.1. 1. Homophony: similar sound words

2.1.1.1.1. 1. Exact Homophony: examples

2.1.1.1.2. 2. near Homophony: examples

2.1.1.2. 2. word boundary recognition: A case of rapid speech

2.1.1.2.1. Example 3: आजायेंगे

2.1.1.2.2. Example 3.1: “I got [uhp] late"

2.2. B. Morphology

2.2.1. Processing of word forms

2.2.1.1. Example 4: जाई: "going" or "flower"

2.2.1.1.1. तो जाई पर्यंत मी राहील

2.2.1.1.2. मधूप जाई पर्यंत जाऊन परत आला

2.3. C. Lexicon

2.3.1. Storage of words and associated knowledge

2.3.2. Used for: *) question answering, *) information extraction *) information retrieval, detection etc. *) in situations of homography, polysemy ...

2.4. D. Parsing

2.4.1. Uncovering the hierarchical structure, Processing of structure

2.4.1.1. Example: "flight from Mumbai to Delhi via Jaipur on Air India"

2.4.1.1.1. NP4: "flight from Mumbai to Delhi via Jaipur on Air India"

2.4.1.2. Example 5 (scope ambiguity): Old men and women were taken to safe locations.

2.4.1.3. Example 6 (scope): No smoking areas will allow hookahs inside.

2.4.1.4. Example 7 (attachment ambiguity): I saw the boy with a telescope.

2.4.1.5. Example 8 (in Hindi): दूरबीन से लड़के को देखा

2.4.1.6. Example 9 (attachment ambiguity; phrase): I saw a tiger running across the field

2.4.1.7. Example 10 (attachment ambiguity; clause): I told the child that1 I liked that2 he came to the playground early.

2.4.2. Parsing rules of Grammar: S -> NP VP NP -> NP PP NP -> NN PP -> P NP VP -> VP PP VP -> V NP VP -> V

2.5. E. Semantics

2.5.1. Processing of meaning

2.5.1.1. Example 11: Visiting aunts can be trying

2.5.1.2. Example 12: आपको मुझे मिठाई खिलानी पड़ेगी.

2.5.1.2.1. 1. आपको मुझे मिठाई खिलानी पड़ेगी. 2. आपको मिठाई खिलानी पड़ेगी मुझे. 3. मुझे मिठाई खिलानी पड़ेगी आपको. 4. मुझे आपको मिठाई खिलानी पड़ेगी. 5. मिठाई खिलानी पड़ेगी आपको मुझे. 6. मिठाई खिलानी पड़ेगी मुझे आपको.

2.6. F. Pragmatics

2.6.1. Processing of user intention, modeling etc.

2.6.1.1. Example 12: Tourist Waiter Conversation

2.7. G. Discourse

2.7.1. Processing of connected text: Building a shared world

2.7.1.1. Example 13: Who is John ?

2.7.1.1.1. Sentence-1: John was coming dejected from the school (who is John: most likely a student?) Sentence-2: He could not control the class (who is John now? Most likely the teacher?) Sentence-3: Teacher should not have made him responsible (who is John now? Most likely a student again, albeit a special student- the monitor?) Sentence-4: After all he is just a janitor (all previous hypotheses are thrown away!).

2.8. H. Textual Humour and Ambiguity

2.8.1. Example 14 (Humour and lexical ambiguity): The Joke of "parking fine"

2.8.2. Example 15 (Humour and structural ambiguity): Teacher Student Conversation Joke

3. Section III. Resource constrained WSD

3.1. A. Parameters for WSD

3.1.1. Domain specific sense distributions

3.1.2. WordNet-dependent parameters

3.1.2.1. belongingness-to-dominant-concept

3.1.2.2. conceptual-distance

3.1.2.3. semantic-distance

3.1.3. Corpus-dependent parameters

3.1.3.1. corpus co-occurrences.

3.2. B. Scoring Function for WSD:

3.2.1. S*= arg max (θi*Vi + Σ(Wij*Vi*Uj))

3.2.1.1. arg max runs over i, and Σ runs over j ∈ J

3.2.1.2. θi*Vi : static corpus sense

3.2.1.3. Wij*Vi*Uj : score of a sense in sentential context

3.2.1.4. …… Equation (1)

3.3. C. WSD Algorithm employing the scoring function

3.3.1. Algorithm 1: performIterativeWSD(sentence) 1. Tag all monosemous words in the sentence. 2. Iteratively disambiguate, the remaining words in the sentence, in increasing order of their degree of polysemy. 3. At each stage, select that sense for a word, which maximizes the score given by Equation (1)

3.3.2. Our Work at CFILT IIT Bombay:

3.4. D. Parameter Projection

3.4.1. Questions: Can we avoid the efforts required in: 1. constructing multiple WordNets and 2. collecting sense marked corpora in multiple languages

3.4.2. Structure of MultiDict

3.4.3. Example 16: concept of “boy” in Multilingual IndoWordNet

3.4.4. Example 17: two senses of the Marathi word सागर {saagar}

3.4.5. Example 18: sense distribution of Marathi word ठिकान (thikaan)