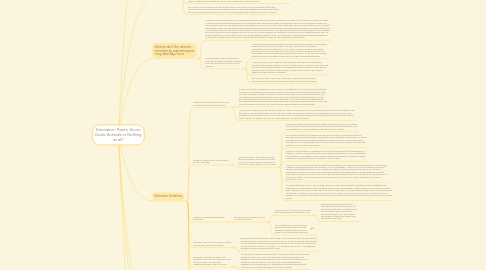

1. Intro

1.1. First question: why do we need estimates at all?

1.2. Here are some common arguments in favour of estimation:

1.2.1. Estimates allow us to predict when we expect to deliver something.

1.2.2. Estimates are a critical inputs for roadmaps/flightplans, i.e., an important part of capacity planning and stakeholder communication, and feeds into prioritisation process.

1.2.3. Estimates help us to de-risk work of uncertain size, complexity and risk, and work out if the work needs to be broken down further.

1.2.4. Estimated work can be traded in and out of focus for other work of similar size. Without estimates you can't trade with any accuracy.

1.2.5. The process of estimation provides clarity and builds confidence that the work has been thought through. When we estimate we discuss work in more detail, and gain a better and shared understanding of what is needed.

1.2.6. We can use the estimate to assess if the value of the outcomes of the work outweighs the cost of creating the value - and decide whether to proceed

1.3. And here are some common arguments against estimation:

1.3.1. Estimates are rarely accurate. All you are doing when you estimate a piece of work is to set false expectations.

1.3.2. In practice, estimation is seen as a commitment, not as a best guess. Every time you make an estimate, you make a rod for your own back.

1.3.3. Estimation is time consuming and wasteful – the time that a team spends estimating is time that could have spent on value delivery.

1.3.4. It's the actual time taken rather than the estimate that really matters – metrics for actual delivery are valuable; metrics for predicted delivery don't count for much (other than avenues for misinformation)

1.3.5. If we overinvest in estimating to gain a higher level of confidence we increase risk and waste by assuming we have more certainty and not creating value

1.4. My intention with posing the question above and detailing some pros and cons of estimation isn't to encourage you to stop estimating, but rather to point out that estimation is at the same time useful but also wasteful and misused.

1.5. In that direction and as the subject of this article, I want to discuss why we do estimation a bit differently in the Agile space.

2. The Agile Answer

2.1. relative estimation by the people actually doing the work with units that don't directly relate to time

2.2. It's all in the title, relative estimation is the basis for estimation in Agile (see also Reference class forecasting - Wikipedia as the basis for releative estimation) and we avoid using units that directly relate to time. These units come in all shapes and forms (as a quick google search will attest): points, t-shirt sizes, animals, flavours of gummy bears, etc – literally anything that tickles the fancy of the team. The key constraint is to avoid a direct relation to time. At Suncorp, most teams use points as the unit for story estimation and T-shirt sizes for features (this standardisation is useful to create a lingua franca and we do recommend that you start with that).

2.3. Ok, so that's all well and good, but the question then comes back to why relative estimation? why non-time units? and how does that help address some of the disadvantages of estimation mentioned above? But before we talk about that, let's talk about what estimation approach we don't like much...

3. What we don't like: absolute estimation by external experts using ideal days/hours

3.1. Typically when one estimates work using absolute estimation techniques, one considers the number of hours/days it will take. For a piece of work, we will decompose the problem into all possible tasks that we would need to do and sum the time that we expect for each one. This can take some time and thought and unfortunately is notorious for it's inaccuracy, even when we bring in the experts! In fact, we have many years of data from projects throughout many industries that tells the story that on average we can expect our estimates at the start of the project to be accurate to only within 400% (of actual time/cost that is). This led Steve McConnell to famously state "It isn't possible to be more accurate; it's only possible to be more lucky". So why do we persist with estimation in the face of such depressing news. Well, the answer's pretty obvious: we need to know how long something is going to take us for the sake of intentional planning and forecasting. In other words, we may be bad at it but we need at least something as it helps us make more informed decisions.

3.2. And the problem can be made worse when the estimates are made by people who don't actually do the work. This is because:

3.2.1. Productivity between different team members can be wildly different. Some studies suggest that it can be in the range in 200 fold in the domain of software development, just as an example. As such, even if someone is able to accurately estimate the time it would take them to do it, it's unlikely to be anywhere near the actual figure (for the people who will actually do the work). It's an ever too common practice to bring in the experts to ensure that we get 'competitive' estimates...

3.2.2. And following from that, imagine the person/people being held accountable to someone elses estimate, given the sort of variation there is; they are most likely to be on one extreme: feeling trapped or unchallenged (and more likely to be the first, as typically experts are in the upper echelon of the productivity curve and they are 'expected' to give optimistic estimates).

3.2.3. We know motivation comes from autonomy, mastery and purpose and estimating for someone else contravenes these three intrinsic motivators

4. Estimation Guidelines

4.1. Guideline 1: The people who do the work should be the ones who estimate it!

4.1.1. It should be simple: the people who do the work should estimate it. If you don't trust the estimates coming from the people doing the work enough (to drive you to externalise the estimation), then you have a deeper problem! The way you address the problem requires people leadership, not management and additional process. The cousin to this antipattern is to get the expert (or the most available person) in the team to do the estimates; this is still bad and only slighter better than getting someone external to the team estimating it, because at least the person on the inside will have greater context, but is still subject to the negative effects mentioned earlier.

4.1.2. Also, we encourage that you estimate as a team or at least those members who could contribute to the work. Why? Because the discussions around the estimate of a work item often leads to important insights into new dependencies, risks, etc and is more accurate due the 'wisdom of the crowd'. There are a bunch of techniques for estimating as a group like Planning Poker, Relative Mass/Affinity, etc (please check out our Work Estimation JIT for more details).

4.2. Guideline 2: Make use of non-time units for your estimates

4.2.1. The next guideline recommends that you divorce estimates from actual time units (i.e., use a unit that doesn't directly relate to time, like points, animals, etc.), because:

4.2.1.1. Stakeholders often confuse estimates in ideal hours/days to actual hours/days, which can lead to false expectations and inaccurate deadlines (admittedly such misconceptions can be managed, but why leave the door open?).

4.2.1.2. Hours/days are seen as an expense and hence the tendency is to want to reduce or eliminate them whenever possible. This tendency works against the importance of having estimates that are as honest and transparent as possible and also favours the view of cost reduction over maximising customer value (clearly we want to favour the latter over the former, not the other way around).

4.2.1.3. There is a natural reflex for managers to want to use the ideal day/hour estimates as a means to judge the productivity of individuals against their actuals. This is an antipattern – unfortunately, it's an effective way to reduce quality and demotivate a team by creating competition and time pressures on individuals within a team.

4.2.1.4. There is a natural reflex for domain/portfolio/project managers to want to use the ideal day/hour estimates as a means to compare the productivity of teams. This is an antipattern – even if you could compare the work that one team does against another (this is actually very difficult in practice because quantity of work doesn't necessarily mean more valuable work), generating competition between teams in a knowledge work domain where teams collaborate to deliver work has often stronger negative effects than positive ones (the subject of a whole other article...). NB: comparison can still be used with non-time units as well, but there is usually less inclination to do so.

4.2.1.5. As mentioned earlier, there is often a large variation in productivity between members and hence setting timing expectations on people doesn't help the team dynamic (e.g., some people in teams may play a hub role and support other members, which has a critical role in the health of the team, but in itself doesn't weigh nicely when looking at the time figures). Note that a comparison can obviously still happen with points, exotic birds, etc. but there is less of a tendency to do that since hours/days seems to invoke an accountancy hat and gummy bears less so for some reason (smile)

4.3. Guideline 3: Use relative estimation techniques

4.3.1. We like relative estimation for two compelling reasons:

4.3.1.1. It's quick to do, i.e., it has a low cost once you have defined your reference cards

4.3.1.1.1. absolute estimation requires you to deconstruct the work and count all the bits; relative estimation just requires you to find a match, which works to the cognitive strength of our brain being a good pattern matcher/comparer (and less good at reduction)

4.3.1.2. It's consistently more accurate than absolute estimation; there is strong anecdotal evidence and some early research which backs this hypothesis

4.4. Guideline 4: Don't try to be more accurate than the error tolerance suggests

4.4.1. Quoting again Steve McConnell, from earlier, "It isn't possible to be more accurate; it's only possible to be more lucky", so don't fall into the trap of 'tuning' your estimates to a level of precision that defies the accuracy tolerance. This directly points to why we use the Fibonacci sequence (1,2,3,5,8,13, ...) in allocating story points – the bigger the estimate, the less accuracy we should claim.

4.5. Guideline 5: Consider the end-to-end activities of the work item and not just the 'obvious' ones in your estimate (everything needed to get it to Done)

4.5.1. This guideline is typically less important in relative estimation as one usually compares similar work items, so 'everything' is already factored in the reference card's index value. However, it's a common error in estimation to 'forget' the lower profile work, e.g., testing in software development, integration in service delivery, etc. Remember the estimate needs to take into account everything that gets the work item to Done.

4.6. Guideline 6: Agree to a threshold above which triggers the team to break the work item down further

4.6.1. This guideline highlights one of the other key values in estimating, apart from it's role in helping to guide capacity planning, and that is the importance of the estimation process in ensuring that the work has been sufficiently understood/de-risked. Setting a threshold is a common and important point in many teams' Definition of Ready.

4.7. Advanced guideline: Card counting is a cheap and surprisingly accurate approach

4.7.1. I define this one as an advanced guideline as it's mostly applicable to mature teams. It's something I typically recommend to teams that are either just starting out in introducing estimation and those teams, on the other end of the spectrum, that have a high maturity in work breakdown. I'm often pleasantly surprised by how accurate this approach is. An important success factor is having a large number of work items in the counting period (e.g., Iteration or Kanban replenishment cadence), because as the sample size increases the variance decreases. How does that relate to mature teams? Well mature teams are good at slicing their work thin and typically already have a lower variance in size between work items – so they score on both fronts and hence get accurate capacity figure with little effort.

5. How to Setup Relative Estimation

5.1. define reference cards and fix threshold (what is too big for an iteration)

5.2. elements of Complexity, Risk and Effort

6. Other Resources

6.1. Agile Estimation And Planning

7. FAQ

7.1. How do you reconcile non-time unit estimates to roadmaps/flightplans that are invariably time-based (e.g., time is usually along X axis)

7.1.1. Estimates that are completely divorced from time units obviously aren’t very useful, so there needs to be a means to map any estimate system to time. At the feature level, t-shirt sizes (e.g., S, M, L, etc.) directly map to the number of iterations (or days) that the team think it would take to deliver the feature. At the story level, story points (e.g., 5, 8, 13, etc.) are summed (for the forecasted stories) and compared to a forecasted average team velocity over time, e.g., 50 pts per iteration period, e.g., 2 weeks. Iterations can then role up into estimates along a time line. In both cases (feature and story level), we look to use relative estimation, which means that we look to match/compare it to a previous piece of work to be able to set the estimate (rather than find an estimate by reduction). In the case that we need to bootstrap the relative estimation process, i.e., we have no previous data to work with or it’s a different type of work that we haven’t done before, then we need to baseline against time to be able to set the reference cards. However, once we have the baseline, we no longer need to talk about time in our estimates, as that would mean that we are doing absolute estimates rather than relative estimates. There’s of course nothing inherently bad with absolute estimates—they just typically require more time to come up with and are likely to be less accurate.

7.2. How do you keep estimates comparable/consistent between teams?

7.2.1. We highly recommend (read prescribe) that all teams at Suncorp use t-shirt sizing for the unit of estimation for features and story points for stories. We also highly recommend that teams that work together use the same iteration cadence (timing and length). However, we do NOT recommend that teams use the same baseline for story points, e.g., 50 points in one team doesn’t need to correspond to 50 points in another team. If you follow guideline 1: The people who do the work should be the ones who estimate it, then you shouldn’t have any problems as each team looks after their own backlog (and estimates). So it’s possible that one team that achieves 1000 points in an iteration delivers the equivalent to another team that achieves 20 points. We don’t like comparison because we have seen more detrimental effects (we have at least a good lot of anecdotal evidence) than positive ones on overall team performance when competition is initiated between teams on a common baseline.

7.3. How often should you reestimate or refine your estimates (over time)?

7.3.1. As part of the PPG process – the way we do projects at Suncorp, features are not broken down into stories until the project is in the Delivery phase. Within the Delivery phase, features are typically broken down into stories once one is looking to deliver the feature within the next few iterations and in the worst case, for the whole MVP. As an output of the Discovery phase, we recommend that features are small enough to be deliverable in a few iterations, as such, in an ideal world you would only get stale estimates for stories of features that weren’t delivered when the first version of the feature was delivered (i.e., stories that got pushed back to a later MVP). You can always get a request from stakeholders (e.g., business sponsor) to have a more accurate estimation. My response to that is if your features are already broken down to something that you can deliver in a few iterations, the estimate should already be sufficiently accurate for a decision to be made. Obviously, if more accuracy is required then maybe this points to a deeper smell and false economy in trying to create certainty for something that is uncertain. I typically always try talk in terms of ranges to get the audience back to the fact that it remains a WAG

7.4. How do you deal with the interruptions of calls for estimates?

7.4.1. You should be working on the most valuable thing that the team can deliver, so if giving an estimate is the next most valuable piece of work for the team, then that’s what you should do, if not then you shouldn’t. This then moves on into the question of doing this estimation stuff on the side, since “it’s not real work”. The advice I give is: over context switch at your own peril; quoting a favourite slogan of mine “Multi-tasking: the art of messing up many things at once”

7.5. How do you ensure that your team doesn’t waste time on estimating things that will never become a reality?

7.5.1. One of the reasons for giving estimates is to clarify the cost aspect of doing a piece of work. If the benefits don’t outweigh the cost then hopefully you wont be implementing it. In other words, estimates are important information to the calculation of value. If however you find yourselves estimating many red herrings, then yes it can be wasteful, but it also shows that your input is valuable in the discussion. Obviously finding the balance is important and that’s a conversation…